This article is part of Demystifying AI, a series of posts that (try) to disambiguate the jargon and myths surrounding AI.

Moments of epiphany tend to come in the unlikeliest of circumstances. For Ian Goodfellow, PhD in machine learning, it came while discussing artificial intelligence with friends at a Montreal pub one late night in 2014. What came out of that fateful meeting was “generative adversarial network” or (GAN), an innovation that AI experts have described as the “coolest idea in deep learning in the last 20 years.”

Goodfellow’s friends were discussing how to use AI to create photos that looked realistic. The problem they faced was that current AI techniques and architectures, deep learning algorithms and deep neural networks, are good at classifying images, but not very good at creating new ones.

Goodfellow came up with the idea of a new technique in which different neural networks challenged each other to learn to create and improve new content in a recursive process. That same night, he coded and tested his idea and it worked. With the help of fellow scholars and alums from his alma mater, Université de Montréal, Goodfellow later completed and compiled his work into a famous and highly-cited whitepaper titled “Generative Adversarial Nets.”

Since then, GAN has sparked many new innovations in the domain of artificial intelligence. It has also landed the now 33-year-old Ian Goodfellow a job at Google Research, a stint at OpenAI, and turned him into one of the few and highly coveted AI geniuses.

[Read: The Artist in the Machine: The bigger picture of AI and creativity]

Deep learning’s imagination problem

GAN addresses the lack of imagination haunting deep neural networks, the popular AI structure that roughly mimics how the human brain works. DNNs rely on large sets of labeled data to perform their functions. This means that a human must explicitly define what each data sample represents for DNNs to be able to use it.

For instance, give a neural network enough pictures of cats and it will glean the patterns that define the general characteristics of cats. It will then be able to find cats in pictures it has never seen before. The same logic is behind facial recognition and cancer diagnosis algorithms. This is how self-driving cars can determine whether they’re rolling on a clear road or running into a car, bike, child, or another obstacle.

But deep neural networks suffer from severe limitations. Prominent among them is the heavy reliance on quality data. The training data of a deep learning application often determines the scope and limit of its functionality.

The problem is that in many cases such as image classification, you need human operators to label the training data, which is time-consuming and expensive. In other areas, it takes a lot of time to generate the necessary data, such as training self-driving cars. And in domains such as health care, the data required for training algorithms will have legal and ethical implications because it’s sensitive personal information.

The real limits of neural networks manifest themselves when you use them to generate new data. Deep learning is very efficient at classifying things but not so good at creating them. This is because the understanding of DNNs from the data they ingest does not exactly translate into the ability to generate similar data. That’s why, for instance, when you use deep learning to draw a picture, the results usually look very weird (if nonetheless fascinating).

This is where GANs come into play.

How does GAN work?

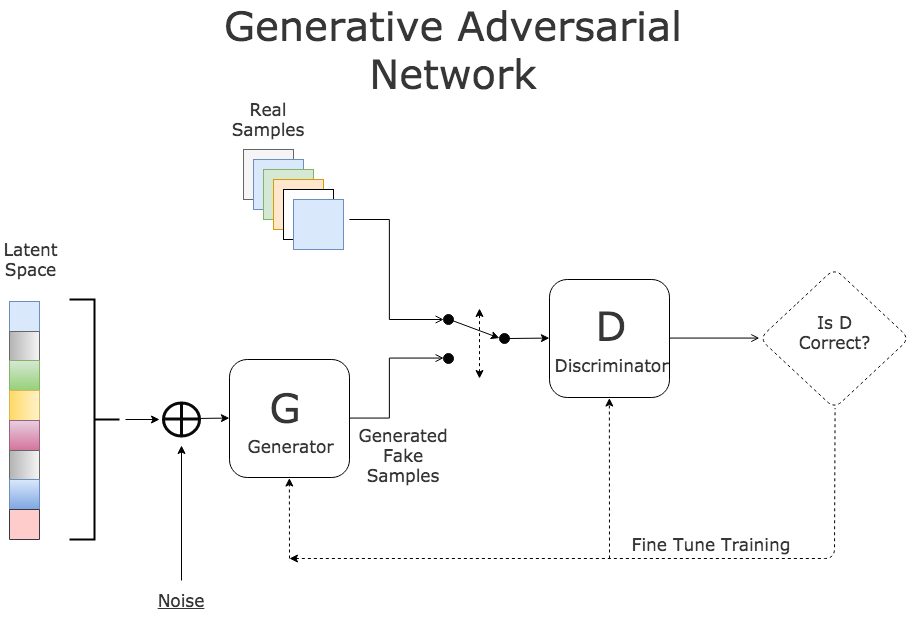

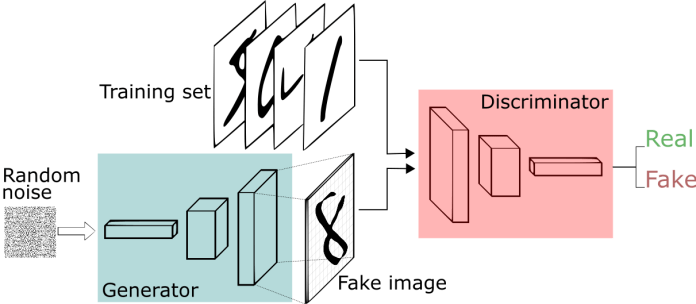

Ian Goodfellow’s Generative Adversarial Network technique proposes that you use two neural networks to create and refine new data. The first network, the generator, generates new data. The process is, simply put, the reverse of neural networks’ classification function. Instead of taking raw data and mapping it to determined outputs in the model, the generator traces back from the output and tries to generate the input data that would map to that output. For instance, a GAN generator network can start with a matrix of noise pixels and try to modify them in a way that an image classifier would label it as a cat.

The second network, the discriminator, is a classifier DNN. It rates the quality of the results of the generator on a scale of 0 to 1. If the score is too low, the generator corrects the data and resubmits it to the discriminator. The GAN repeats the cycle in super-rapid successions until it can create data that maps to the desired output with a high score.

GAN’s work process is comparable to a cat-and-mouse game, in which the generator is trying to slip past the discriminator by fooling it into thinking that the input it is providing it is authentic.

Generative adversarial networks are perhaps best represented in this video, which shows Nvidia’s GANs in action creating photos of non-existent celebrities. Not all the photos the AI creates are prefect, but some of them look impressively real.

[embedded content]

The applications of GAN

Generative adversarial networks have already shown their worth in creating and modifying imagery. Nvidia (which has certainly taken a keen interest in this new AI technique) recently unveiled a new research project which uses GAN to correct images and reconstruct obscure parts.

There are many practical applications for GAN. For instance, it can be used to create random interior designs to give decorators fresh ideas. It can also be used in the music industry, where artificial intelligence has already made inroads, by creating new compositions in various styles, which musicians can later adjust and perfect.

[embedded content]

But the applications of GAN stretch beyond creating realistic-looking photos, videos and works of art. It can help speed research and progress in several areas where AI is involved. It will also be a key component of unsupervised learning, the branch of machine learning in which AI creates its own data and discovers its own rules of application.

GAN can be crucial in areas where access to quality data is difficult or expensive. For instance, self-driving cars might use GANs in the future to train for the road without the need to drive millions of miles on the road. After accumulating enough training data, they can then use the technique to create their own imaginary road conditions and scenarios and learn to handle them. In the same manner, a robot that is designed to navigate the floors of a factory can use GANs to create and navigate through imaginary work conditions without actually steering the factory floor and running into real obstacles.

In this regard, GANs might prove to be an important step toward inventing a form of general AI, artificial intelligence that can mimic human behavior and make decisions and perform functions without having a lot of data. (On a side note, my opinion is that instead of chasing general AI, we should focus on enhancing our current weak AI algorithms. GANs are perfect for the task, as it happens.)

There are also applications for GAN in medicine, where it can help produce training data for AI algorithms without the need to collect personally identifiable information (PII) from patients. This can be a boon to areas such as drug research and discovery, which are heavily reliant data that is both sensitive, expensive, and hard to obtain. It can also be key to continue AI innovations as new privacy and data protection rules put severe restrictions on how companies can collect and use data from customers and patients.

This will not only be important in health care, but also in other domains that require personal data, such as online shopping, streaming, and social media.

The limitations of GAN

Although generative adversarial networks have proven to be a brilliant idea, they’re not without their limits. First, GANs show a form of pseudo-imagination. Depending on the task they’re performing, GANs still need a wealth of training data to get started. For instance, without enough pictures of human faces, the celebrity-generating GAN won’t be able to come up with new faces. This means that areas where data is non-present won’t be able to use GAN.

GANs can’t invent totally new things. You can only expect them to combine what they already know in new ways.

Also, at this stage, handling GANs is still complicated. If there’s no balance between the generator and the discriminator, results can quickly get weird. For instance, if the discriminator is too weak, it will accept anything the generator produces, even if it’s a dog with two heads or three eyes. On the other hand, if the discriminator is much stronger than the generator, it will constantly reject the results, resulting in an endless loop of disappointing data. And if the network is not tweaked correctly, it will end up producing results that are too similar to each other. Engineers must constantly optimize the generator and discriminator networks sequentially to avoid these effects.

The potentially negative uses or GANs

As with all breakthrough technologies, generative adversarial networks can serve evil purposes too. The technique is still too complicated and unwieldy to become attractive to malicious actors, but it’s only a matter of time before that happens. We’ve already seen this happen to deep learning. Widely available, easy-to-use deep learning applications that synthesize pictures, videos, and photos recently triggered a wave of AI-doctored photos and videos, which raised concerns over how criminals can use the technology for scam, fraud and fake news.

GANs had no part in that episode, but it is easily imaginable how they can contribute to the practice by helping scammers generate the images they need to enhance their AI algorithms without the need to obtain too many pictures of the victim. GANs can also be used to find weaknesses in other AI algorithms. For instance, if a security solution uses AI to detect cybersecurity threats and malicious activities, GAN can help find the patterns that can slip past its defenses.

GAN can also inflict real harm in areas where AI coincides with the physical world. For example, in the same way that the technique can train the AI algorithms that enable self-driving cars to analyze their surroundings, it can ferret out and exploit their weaknesses. For instance, it can help find patterns that will fool self-driving cars into missing obstacles or misreading street signs.

In fact, Goodfellow, who is now a scientist at Google Research, is well aware of the risks that his invention poses and is now heading a team of researchers whose task is to find ways to make machine learning and deep learning more secure. In an interview with MIT Technology Review, Goodfellow warned that AI might follow in the footsteps of previous waves of innovation, in which security, privacy, and other risks were not given serious consideration and resulted in disastrous situations.

“Clearly, we’re already beyond the start,” he told Tech Review, “but hopefully we can make significant advances in security before we’re too far in.”

This article was originally published by Ben Dickson on TechTalks, a publication that examines trends in technology, how they affect the way we live and do business, and the problems they solve. But we also discuss the evil side of technology, the darker implications of new tech and what we need to look out for. You can read the original article here.

Published June 29, 2020 — 14:03 UTC