For a couple of thousand of dollars a month, you can now reserve the capacity of a single Nvidia HGX H100 GPU through a company called CoreWeave. The H100 is the successor of the A100, the GPU that was instrumental in training ChatGPT on LLM (Large Language models). Prices start at $2.33 per hour, that’s $56 per day or about $20,000 a year; in comparison, a single HGX H100 costs about $28,000 on the open market (NVH100TCGPU-KIT) and less wholesale.

You will pay extra for spot prices ($4.76 per hour) and while a cheaper SKU is available (HGX H100 PCIe, as opposed to the NVLINK model), it cannot be ordered yet. A valid GPU instance configuration must include at least one GPU, at least one vCPU and at least 2GB of RAM. When deploying a Virtual Private Server (VPS), the GPU instance configuration must also include at least 40GB of root disk NVMe tier storage.

The news comes after a flurry of announcements at Nvidia’s GTC 2023 where generative AI was left, right and center. The technology employs LLM training that enables creative work, including the writing of scholarly papers, a stand-up comedy routine or a sonnet; the designing of artwork from a block of text; and in the case of NovelAI, one of CoreWeave’s first clients, composing literature.

You can of course check what our peers have done at Tomshardware and run a ChatGPT alternative on your local PC.

Nvidia Monopoly

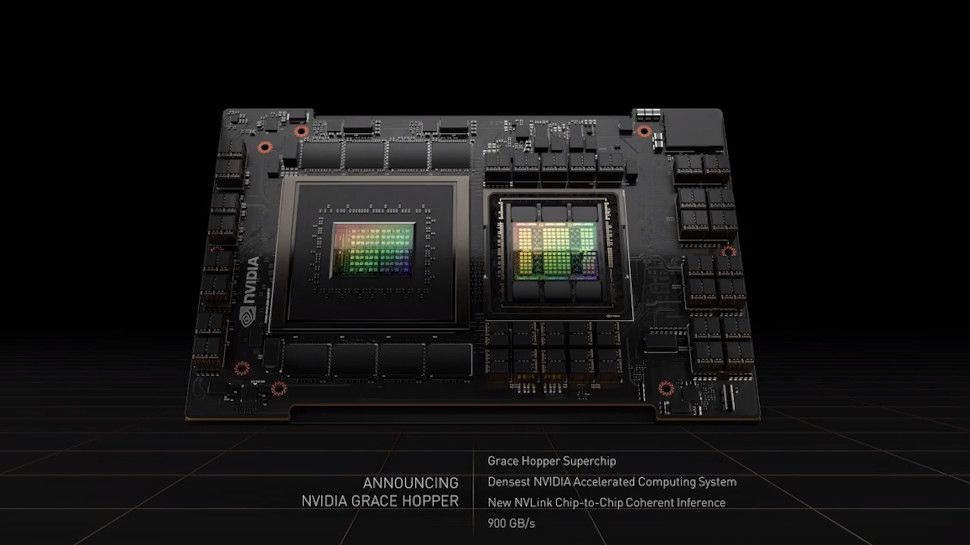

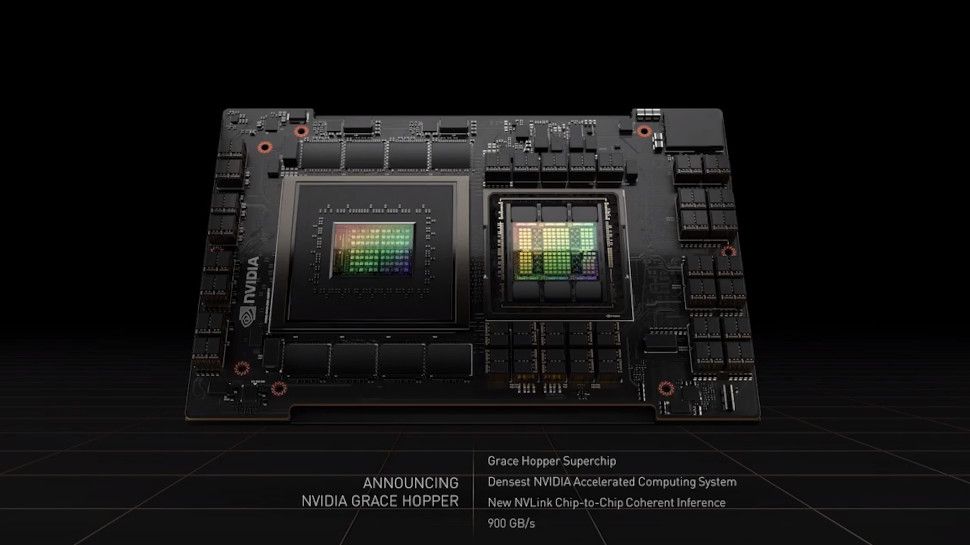

Jensen Huang, founder and CEO of NVIDIA, oversaw the launch of a number of GPUs that target specific segments of the rapidly expanding AI content market: the L4 for AI video, the L40 for image generation and the H100 NVL (basically two H100 in an SLI-esque setup). Nvidia, which is turning 30 in April 2023, wants to capture as much of the market as possible by offering hardware and software with an eye on deploying its own hardware-as-a-service.

It has unveiled a cloud version of its DGX H100 server, one that packs eight H100 cards and can be rented for just under $37,000 from Oracle with Microsoft and Google coming up. Although that sounds expensive, just bear in mind that the DGX H100 costs more than $500,000 from enterprise vendor Insight, and that excludes the cost of actually running the device (maintenance, colocation, utilities etc).

Nvidia’s sudden interest in becoming a service provider of its own is likely to make its partners slightly uncomfortable. The chair of the TIEA (Taiwan Internet and E-Commerce Association), which regroups some of the biggest names in the tech hardware industry, was lucid enough to declare yesterday that the company will “incur coopetition” with leading cloud service providers (CSP), and will likely accelerate the quest for an alternative to Nvidia, to establish an equilibrium.

All eyes are on AMD (with its Instinct MI300 GPU) and Intel but lurking in the shadows are a roster of challengers (Graphcore, Cerebras, Kneron, IBM and others) that will want a piece of a growing pie.

Services Marketplace – Listings, Bookings & Reviews