What is the master algorithm that allows humans to be so efficient at learning things? That is a question that has perplexed artificial intelligence scientists and researchers who, for the past decades, have tried to replicate the thinking and problem-solving capabilities of the human brain. The dream of creating thinking machines has spurred many innovations in the field of AI, and has most recently contributed to the rise of deep learning, AI algorithms that roughly mimic the learning functions of the brain.

But as some scientists argue, brute-force learning is not what gives humans and animals the ability to interact the world shortly after birth. The key is the structure and innate capabilities of the organic brain, an argument that is mostly dismissed in today’s AI community, which is dominated by artificial neural networks.

In a paper published in the peer-reviewed journal Nature, Anthony Zador, Professor of Neuroscience Cold Spring Harbor Laboratory, argues that it is a highly structured brain that allows animals to become very efficient learners. Titled “A critique of pure learning and what artificial neural networks can learn from animal brains,” Zador’s paper explains why scaling up the current data processing capabilities of AI algorithms will not help reach the intelligence of dogs, let alone humans. What we need, Zador explains, is not AI that learns everything from scratch, but algorithms that, like organic beings, have intrinsic capabilities that can be complemented with the learning experience.

Artificial vs natural learning

Throughout the history of artificial intelligence, scientists have used nature as a guide to developing technologies that can manifest smart behavior. Symbolic artificial intelligence and artificial neural networks have constituted the two main approaches to developing AI systems since the early days of the field’s history.

“Symbolic AI can be seen as the psychologist’s approach—it draws inspiration from the human cognitive processing, without attempting to crack open the black box—whereas ANNs, which use neuron-like elements, take their inspiration from neuroscience,” writes Zador.

While symbolic systems, in which programmers explicitly define the rules of the system, dominated in the first few decades of AI history, today neural networks are the main highlight of most developments in artificial intelligence.

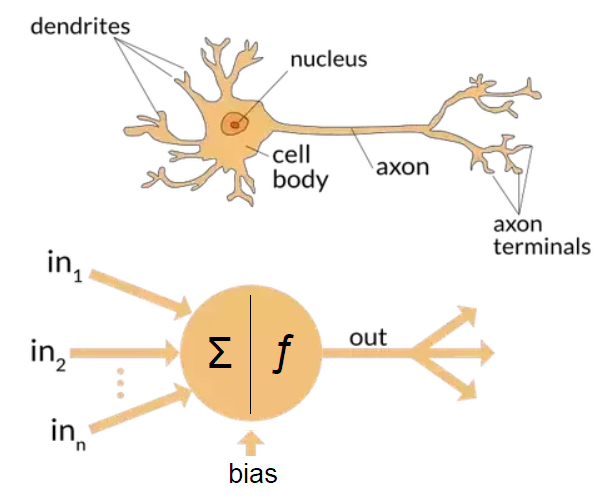

Artificial neural networks are inspired by their biological counterparts and try to emulate the learning behavior of organic brains. But as Zador explains, learning in ANNs is much different from what is happening in the brain.

“In ANNs, learning refers to the process of extracting structure—statistical regularities—from input data, and encoding that structure into the parameters of the network,” he writes.

For instance, when you develop a convolutional neural network, you start with a blank slate, an architecture of layers upon layers of artificial neurons connected with random weights. As you train the network on images and their associated labels, it will gradually tune its millions of parameters to be able to place each image in its rightful bucket. And the past few years have shown that the performance of neural networks increases with the addition of more layers, parameters, and data. (In reality, there are a lot of other intricacies involved too, such as tuning hyperparameters, but that would be the topic of another post.)

There are some similarities between artificial and biological neurons, such as the way ANNs manage to extract low- and high-level features from images.

But when it comes to humans and animals, learning finds a different meaning. “The term ‘learning’ in neuroscience (and in psychology) refers to a long-lasting change in behavior that is the result of experience,” Zador writes.

The differences between artificial and natural learning are not limited to definition. In supervised learning, in which neural nets are trained on hand-labeled data (such as the example mentioned above), these differences become more acute.

“Although the final result of this training is an ANN with a capability that, superficially at least, mimics the human ability to categorize images, the process by which the artificial system learns bears little resemblance to that by which a newborn learns,” Zador observes.

Children mostly learn to explore their world on their own, without the need for much instruction, while supervised algorithms, which remain the dominant form of deep learning, requires millions of labeled images. “Clearly, children do not rely mainly on supervised algorithms to learn to categorize objects,” Zador writes.

There’s ongoing research on unsupervised or self-supervised AI algorithms that can learn representations with little or no guidance from humans. But the results are very rudimentary and below what supervised learning has achieved.

Why unsupervised learning is not enough

“It is conceivable that unsupervised learning, exploiting algorithms more powerful than any yet discovered, may play a role establishing sensory representations and driving behavior. But even such a hypothetical unsupervised learning algorithm is unlikely to be the whole story,” Zador writes.

For instance, most newborn animals learn their key skills (walking, running, jumping) in such a short period of time (weeks, days, hours) that would be impossible with a sheer unsupervised learning on a blank slate neural network. “A large component of an animal’s behavioral repertoire is not the result of clever learning algorithms—supervised or unsupervised—but rather of behavior programs already present at birth,” Zador writes.

At the same time, innate abilities do not enable animals to adapt themselves to their ever-changing environments. That’s why they all have a capacity to learn and adapt to their environment.

And there’s a tradeoff between the two. Too much innateness and too little learning will get you faster on your feet and help you go about performing your evolutionary duties of surviving in your environment and passing on your genes to the next generation. But it will deprive you of the flexibility of adapting yourself to different variables in your environment (weather, natural disasters, disease, etc.) On the other hand, a good learner with little innate capabilities will spend most of its early life in a state of total vulnerability, but will end up being smarter and much more resourceful than other beings. This explains why it takes a baby human around a full year before taking the first step while kittens learn to walk a month after birth.

Innate and learning abilities complement each other. For instance, the brain of human children has the wiring to distinguish faces from other things. Then, throughout their lives, they learn to associate specific faces to people they know. Squirrels, on the other hand, have the innate skill of remembering the places of things they have buried, and according to some studies, can memorize the exact location of thousands of nut caches.

These innate mechanisms and the preference to learn specific things is what Zador calls “Nature’s secret sauce” encoded in the genome.

“Specifically, the genome encodes blueprints for wiring up their nervous system,” Zador writes in his paper. “These blueprints have been selected by evolution over hundreds of millions of years, operating on countless quadrillions of individuals. The circuits specified by these blueprints provide the scaffolding for innate behaviors, as well as for any learning that occurs during an animal’s lifetime.”

So, what exactly does the genome contain? The answer varies in different beings. For instance, in super-simple organisms such as worms, the genome contains all the minute details for the entire neural wiring. But for a complex system such as the human brain, which has approx. 100 billion neurons and 100 trillion synapses, there’s no way you can encode everything in the genome, which has about 1 gigabyte of space.

“In most brains, the genome cannot specify the explicit wiring diagram, but must instead specify a set of rules for wiring up the brain during development,” Zador says.

Evolution vs learning

So, biological brains have two sets of behavior optimization mechanisms. On the one hand, they have the learning capability, which enables each individual of a species to develop its own specific behavior and finetune it based on its lifetime experiences. And on the other hand, all individuals of a species have a rich set of innate abilities that have been ingrained in their genome. “The genome doesn’t encode representations or behaviors directly; it encodes wiring rules and connection motifs,” Zador writes.

The genome itself is not constant either. It goes through infinitesimal transformations and mutations as it is passed from one generation to the next. Evolution and natural selection do their magic and make sure that across thousands and millions of generations, the better changes survive and the bad ones get eliminated. In this respect, it’s fair to say that the genome too goes through optimization and enhancement, though in a much larger timescale than the life of an individual.

Zador visualizes these two optimization mechanisms as two concentric loops: the outer evolution loop and the inner learning loop.

Artificial neural networks, on the other hand, have a single optimization mechanism. They start with a blank slate and must learn everything from scratch. And this is why it take them huge amounts of training time and examples to learn the simplest things.

“ANNs are engaged in an optimization process that must mimic both what is learned during evolution and the process of learning within a lifetime, whereas for animals learning only refers to within lifetime changes,” Zador observes. “In this view, supervised learning in ANNs should not be viewed as the analog of learning in animals.”

How artificial neural networks must evolve

“The importance of innate mechanisms suggests that an ANN solving a new problem should attempt as much as possible to build on the solutions to previous related problems,” Zador writes.

AI researchers have already devised transfer learning techniques, where the parameters weights of one trained neural network are transferred to another. Transfer learning helps to reduce the time and the number of new data samples required to train a neural network for a new task.

But transfer learning between artificial neural networks is not analogous to the kind of information passed between animals and humans through genes. “Whereas in transfer learning the ANN’s entire connection matrix (or a significant fraction of it) is typically used as a starting point, in animal brains the amount of information ‘transferred’ from generation to generation is smaller, because it must pass through the bottleneck of the genome,” Zador writes.

Another thing that is currently missing from artificial neural networks is architectural optimization. Through blind evolutionary mechanisms, genomes learn to optimize the structure and wiring rules of the brain to better solve the specific problems of each species. ANNs on the other hand, are only limited to optimizing their parameters. They have no recursive self-improvement mechanism that can enable them to create better algorithms. Changes to their architecture must come from outside (or limited hyperparameter tuning techniques such as grid search and AutoML).

The one thing that might resemble the evolutionary optimization of the genome is the invention of different ANN architectures such as convnets, recurrent neural networks, long short-term memory networks, capsule networks, Transformers, and others. These architectures have helped create networks that efficiently solve different problems. But they’re not exactly what the genome does.

“All these new architectures are impressive, but I’m not sure how analogous they are to the architectures that emerge through evolution,” Zador told TechTalks in written comments. “The key in evolution is that architectures need to be compressed into a genome. This ‘genomic bottleneck’ acts as a regularizer and forces the system to capture the essential elements of any architecture.”

Other scientists have suggested combining neural networks with other AI techniques, such as symbolic reasoning systems. The hybrid AI approach has proven to be much more data-efficient that pure neural networks and is currently the focus of different research groups such as the MIT-IBM Watson AI Lab.

Zador, however, is skeptical about this approach, and believes that artificial neural networks stand a better chance of developing artificial intelligence. “Although the processing elements of ANNs are simpler than real neurons—e.g., they lack dendrites—I think they’re probably close enough. The fact that ANNs are universal approximators is suggestive,” he said in his comments.

He does however finish his paper by reminding us that, at the end of the day, the study of animal brains might not be the entire answer to the AI question.

“What is sometimes misleadingly called “artificial general intelligence”—is not general at all; it is highly constrained to match human capacities so tightly that only a machine structured similarly to a brain can achieve it,” he writes.

This article was originally published by Ben Dickson on TechTalks, a publication that examines trends in technology, how they affect the way we live and do business, and the problems they solve. But we also discuss the evil side of technology, the darker implications of new tech and what we need to look out for. You can read the original article here.

Read next: Scrum is how successful teams get even better. This course package explains it all

Pssst, hey you!

Do you want to get the sassiest daily tech newsletter every day, in your inbox, for FREE? Of course you do: sign up for Big Spam here.