Refresh

You won’t need to wait long for the ChatGPT Mac Desktop app to get all of these new features, including more support for “Working with Apps” and the arrival of “Advanced Voice Mode,” as it’s being pushed to the app now. Just be sure your Mac ChatGPT app is up to date.

It’ll be coming to the Windows app soon, but no exact time frame was given.

That concludes Day 11 of 12 Days of OpenAI in terms of news, but Kevin did tease some big news tomorrow. And rather than closing out day eleven with a joke, we got a saxophone solo.

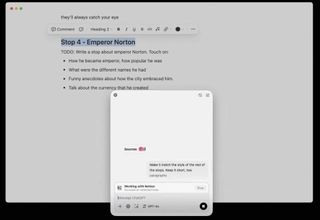

Regarding using ChatGPT for writing, OpenAI has announced support today for Apple Notes, Quip, and Notion. We can expect that functionality to roll out today, and in a live demo during day 11, the team is going through a document in which they’re creating a guide for a walking tour within Notion.

With the new functionality, ChatGPT is working directly with Notion. This live demo is focused on the selected text within the documentation, and the task is to “fill out these talking points.” You can also choose ChatGPT’s Search functionality to have the response generated – in this case, talking points on Emperor Norton – to provide citations with its sources for the generated text. It’s basically showing it’s work.

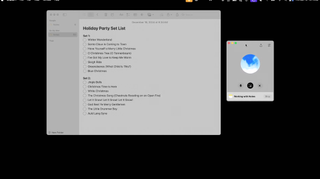

Beyond just selecting text, copying in, and then copying out with a final past, ChatGPT is debuting support for Advanced Voice Mode. In this case, for a “Holiday Party Set List” within Apple Notes and asking Santa via ChatGPT for feedback on the proposed songs. It can even tell you if you did something wrong, in this case, writing “Freezy the Snowman” instead of “Frosty the Snowman.”

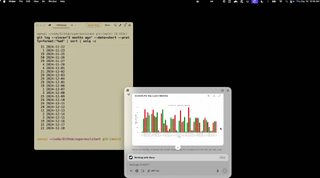

Beyond viewing and understanding the app, it’s still ChatGPT and can use the various functions or features the AI and its model can perform. One example within Warp is that ChatGPT can see what you see on screen and also view what else is within the app so that a long list of codes can be scrolled without needing to scroll. Neat.

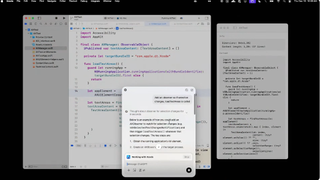

Compared to the Windows Recall feature, ChatGPT is about working with apps rather than recording and building a library to look back into. In another demo, the OpenAI team uses ChatGPT with XCode, which allows it to work within Apple’s development application. You can ask for it here to help generate code or solve a problem.

In this demo, OpenAI asked ChatGPT for help with code within XCode and then pasted the resulting code it delivered into XCode. Another new feature that was approved on the spot is the ability for ChatGPT to put the created code directly into XCode – which could end upstream linking some workflows.

While greenlighting that new feature, ChatGPT’s code failed twice during this live demo. On the third try, the OpenAI team did get the code to run successfully.

It’s day 11, and Kevin, OpenAI’s chief product lead, is kicking things off by discussing desktop apps with two colleagues dressed to the nines in Christmas-themed blazers. But the bigger idea is a shift to ‘Agentic’ in that ChatGPT can do a lot more for you and on your behalf.

For instance, with the ChatGPT desktop app on the Mac, you can easily access it as a floating window layered on your desktop. Desktop apps will likely be the focus of day 11, with hints of ‘Agentic.’

In this demo of the ChatGPT app for the Mac, you can let the AI view and get context for one of your apps. When you select it through ‘Working with Apps,’ it will be able to view it and assist. What is new today is that Warp is working within this flow.

Day 11: Time for an update to image generation?

Yesterday we had a really intriguing announcement from OpenAI. You can now text through WhatsApp to talk to the ChatGPT chatbot, which is a pretty convenient way of using it. This was quite a surprise, since WhatsApp is owned by Meta, who has its own MetaAI.

Since yesterday’s announcement was about ChatGPT we’re guessing that today’s will be about something else entirely… However, there’s still been no upgrades to the DALL-E 3 image generator that is part of ChatGPT, so our money is on something image related today.

Welcome to day 11 of ’12 Days of OpenAI’

Welcome to the penultimate day of ’12 Days of OpenAI’! It’s starting to feel like OpenAI’s product announcements have been going on for most of December at this point, but we’re getting very close the end now, the big announcement on day 12. What will it be? Well, we still have one more day to go before we get there, and we’re still wondering what we’ll get today, so don’t forget to come back at 10am PT for another exciting announcement from OpenAI.

ChatGPT text calls will work with WhatsApp

Basically, ChatGPT calling will work with your WhatsApp account. Scanning the QR code included with the stream gets you directly to the WhatsApp experience inside ChatGPT.

These conversations are text-based only. You do not need to have a ChatGPT account to make it work

It’s worth noting that the voice Call ChatGPT feature is for the US only.

The text-based WhatsApp is how international users will use Call ChatGPT.

Any phone will work

While the demo started with an iPhone, the small group of OpenAI engineers soon showed how you can call ChatGPT with any phone, including a classic flip phone and then an old-school rotary phone (ask your grandparents).

The actual toll-free number, by the way, is 1-800-242-8478.

Anyone can get 15 minutes of free ChatGPT calls. For more time, you’ll need to create and ChatGPT account.

Call ChatGPT today

OpenAI’s is bringing ChatGPT to your telephone. They’ve told us to add ChatGPT to your contacts so you can, yes, call ChatGPT. This is quite a change for the AI company. It’s very old school. You can call ChatGPT toll-free to chat with it by voice.

The number, by the way, if you want to play along is: 1-800-chatgpt

Get using ChatGPT Projects

Back in day six, OpenAI announced ChatGPT Projects, as part of its 12 Days of OpenAI, and if you’re the sort of person who loves to say organized, you’re going to love using it. Luckily for you TechRadar writer Eric Hal Schwartz has whipped up a handy guide to using ChatGPT Projects. Enjoy!

Welcome to day 10!

Welcome to day 10 of ’12 Days of OpenAI’! After yesterday’s developer-heavy announcements we’re expecting something a bit more consumer friendly today. What will it be? Tune in at 10am PT to find out.

Next up is fine-tuning, and the API model currently supports preference and supervised types. So, for day nine, OpenAI ushered in the o1 model in the API, as well as real-time enhancements and fine-tuning.

There were a few more minor things shipped today as well – those including new SDKs – Go and Java –, a refreshed flow for creating an API key, released OpenAI dev talks on YouTube, and will be doing an AMA, starting now, for the next hour on the OpenAI developer forum.

And day nine is wrapped. It was still quick, though a bit longer than day eight, but we’ll have to see what day ten brings tomorrow. OpenAI didn’t tease what’s to come, which means anything is on the table.

One piece of good news is that o1 uses 60% less tokens than o1 preview, which should mean you get even more value out of the o1 release, which is rolling out today – though it will take a few weeks for it to hit all users.

That’s not all, though, now OpenAI is speaking to the Real-Time aI which is all about voice experiences building. Currently, it has web socket support but webRTC support is here and is built for the internet.

Now rather than elf on the shelf, OpenAI brought out a Fawn on the Lawn with a micro controller inside it. When the Fawn was plugged into the MacBook Pro, it started saying “Merry Christmas, what are you talking about it?” and responded saying that webRTC was a little complicated, and the Fawn switched the conversation to giving gifts on Christmas. The briefing here is that you can create custom assistants using voice – pretty neat.

It’s time for day nine of the 12 Days of OpenAI, and as teased during day eight, we’re fully expecting a mini developer day of sorts. And right away, it’s confirmed that it’s all about how developers and startups can build off of the OpenAI API.

For starters, o1 is launching out of preview in the API today, and based on feedback function calling, developer messages, and structured output are arriving with the model. OpenAI will launch a “reasoning effort” tool within the o1 model, which lets you tell the AI how long to spend on a task.

Lastly, there is a vision aspect of the o1 model – in the example, it looks at a tax form and can help detect issues in the form. To do this, OpenAI opened up its developer program, and through a “Developer Message,” you can provide instructions on how it should work. This was written out, the tax forms were uploaded, and the AI analyzed the image and marked the errors. o1 here is analyzing the uploaded and provides written answers.

ChatGPT search vs Perplexity

With OpenAI dropping some updates to the ChatGPT search function yesterday, our Senior AI Editor, Graham, thought it would be a good time to compare what ChatGPT’s AI search function can do against Perplexity, which has been doing AI search for a while now. He was quite surprised by the results. Have a read and see what you think.

Today’s more developer-focused announcement will be happening at 10am PT.

Operator agent

With many expecting AI agents (autonomous AI bots that do your bidding) being a key feature of 2025, we’re still wondering if we’ll see the release of Operator from OpenAI this week. The smart money is on it closing the show on Friday.

Operator would be able to control your browser, which means it could do any task that you currently use your browser for, which, when you think about it, is probably about 90% of what you use your computer for.

Operator is already tipped to land in January, so it’s not out of the bounds of possibility that OpenAI has brought the release forward a few weeks.

Welcome to day nine!

Welcome to day nine of 12 Days of OpenAI! Yesterday was a true Christmas cracker, since we got ChatGPT search released to all ChatGPT users, including those on the free tier. The OpenAI team hinted that today’s announcement would be more developer focussed, but check back at 10am PT and we’ll have the news for you as it happens.

Just as quickly as day 8 of 12 Days of OpenAI began, it’s already come to a close. ChatGPT Search was the focus, with some significant enhancements and a much larger rollout for logged-in free users globally, where ChatGPT is available. Much like Canvas, you’ll need a free account to use ChatGPT search and get high rate limits.

Kevin also teased that tomorrow, Day 9, will be a mini developer day, so expect the focus to be less on consumer features and more on larger tools.

Beyond rolling out ChatGPT search to even more users, OpenAI is also integrating the feature more seamlessly into its Android or iOS mobile app. When you ask a question, say a restaurant in a specific area, like how the OpenAI team demoed during the reveal, it will list the results in line. Further, you can have a more natural conversation about the results to find what you’re truly after. It’s pretty neat.

Once you find a restaurant in the ChatGPT app for iOS, you can also get directions via Apple Maps as it is integrated.

Also, within the mobile app, you can talk with ChatGPT using voice mode, and it will weave search results and broader web information into its response. For instance, if you’re asking for a Christmas market, you can even get more specific for hours and days of operation.

It’s all about ChatGPT Search for Day 8

And away we go! OpenAI is kicking off day 8 of its 12 Days of announcements. Kevin, OpenAI’s product lead, is kicking things off and quickly shared that the focus for today is ChatGPT Search.

First, it’s arriving to everyone globally and on every platform where ChatGPT is available beginning today. OpenAI is also saying broadly that they’ve made ChatGPT Search better and are rolling out the ability to search while you’re talking with ChatGPT Advanced Voice Mode.

We asked ChatGPT what announcement we’ll get today

We asked ChatGPT what it thought that OpenAI would be releasing today because, well, if anybody should know it should be ChatGPT, right? It came back with:

“Given that image generation updates have been notably absent so far, many speculate that a DALL-E update could be coming today. The announcement is scheduled for 10 a.m. PT, so keep an eye out for news regarding potential advancements in OpenAI’s creative tools and accessibility features.”

To be honest, we think it’s right, but it also sounds a lot like ChatGPT has been reading our own blog post on the subject of today’s release (see down below), so, er, thanks for nothing ChatGPT…

Time for an AI podcast generator?

Something we haven’t seen from OpenAI so far is an AI podcast generator. While Google has been having a lot of success with NotebookLM, its research tool that will generate a fantastically real podcast between two AI hosts from whatever text, video or PDF sources you feed it, we haven’t seen anything from OpenAI on this front so far.

Google is rolling out a new feature to NotebookLM that lets you join in the conversation with the AI hosts too.

NotebookLM has been so popular that we’re starting to wonder if this week we’ll see OpenAI step into the AI podcast game with one of its ’12 Days of OpenAI’ releases? Time will tell.

Let’s talk about AI images

Could we see a major update to DALL-E in today’s announcement? I highly doubt it, but you never know.

Today, TechRadar’s Senior AI Writer, John-Anthony Disotto, has been testing Grok, a competitor to DALL-E from xAI, Elon Musk’s AI company. Grok 2 is now free to all users on X (formerly Twitter), and it’s capable of some crazy unrestricted image generation results.

In his piece, titled “I used Grok’s new free tier on X but I can’t show you the results because it could infringe Nintendo’s copyright“, he talks about how OpenAI’s AI image generator won’t create images of copyrighted characters or public figures, while Grok will do whatever you ask it to. Despite the limitations, DALL-E 3 as part of ChatGPT remains one of our picks for best AI image generator, but could it get a whole lot better in today’s announcement? Time will tell.

My favorite announcement from last week?

Today is a good day to reflect on the goodies that OpenAI announced last week, and while Sora was the highlight, without a doubt, it was another of its announcements that I found the most useful…

I’m talking about the Canvas feature. As I wrote at the time, it has completely changed the way I use ChatGPT. The new writing tools are really useful, and I love the way you can keep refining the same piece of text over and over, without having to keep generating reams of text each time you want to change just one element of it.

If you haven’t had a play with Canvas yet I’d recommend you give it a go. It’s free!

Welcome to day eight of ’12 Days of OpenAI’! We’ve had the weekend to think about all the good stuff OpenAI released last week (Sora, ChatGPT Canvas and Projects, plus ChatGPT o1) and that’s got us wondering what we can expect from the AI giant this week?

There’s still been nothing announced on the AI image generation front, so could we see a new DALL-E release today?

We’re here from today until Friday this week (the 20th) when we’ll get our final day of OpenAI releases. Today’s announcement will kick off at 10am PT, so don’t miss it.

Services Marketplace – Listings, Bookings & Reviews