Refresh

Tell us everything

As I mentioned, this event was chock-a-block with announcements. So many, it can be hard to tell what mattered. That is unless you read Global Editor in Chief Marc McLaren’s round-up of the 13 biggest reveals

Android XR Glasses hands on coming in…

Intrepid tech reporters Jake Krol and Phil Berne are currently queued up in an hours-long line to touch and try out the remarkable new Google Android XR Glasses. They look like quite an upgrade from Google’s last glasses attempt.

Keep an eye on TechRadar for updates on their quest.

Can search survive AI?

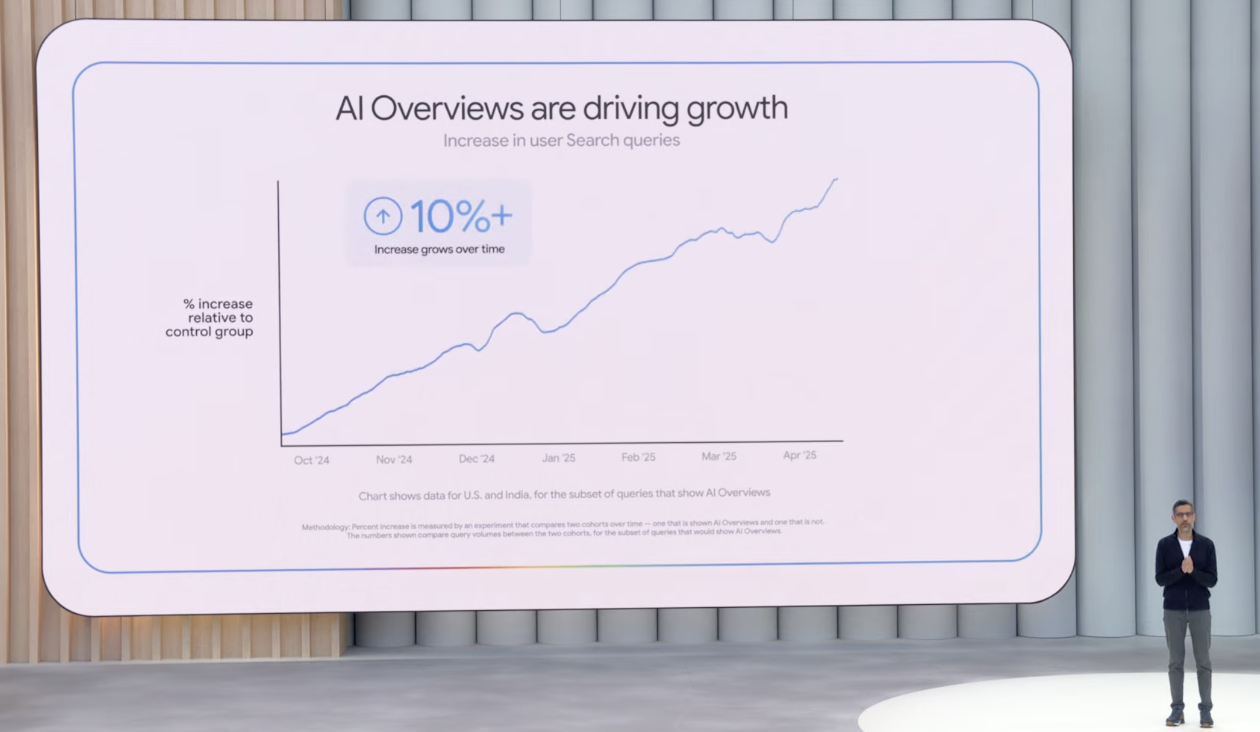

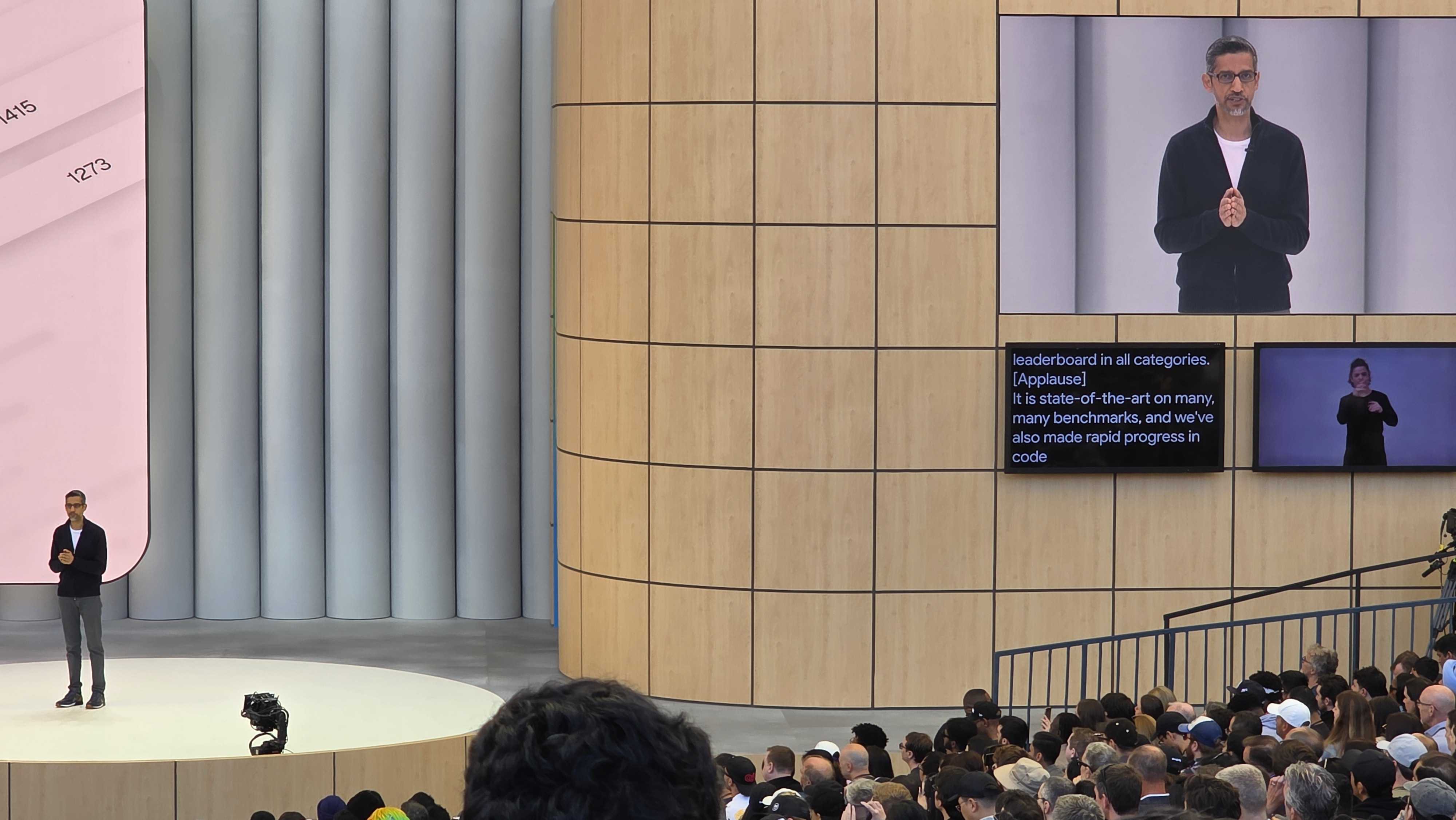

Google CEO Sundar Pichai sees AI as net-positive for search, adding that this is not a “zero-sum moment” for Search and AI. More in my story below.

Be there without being there

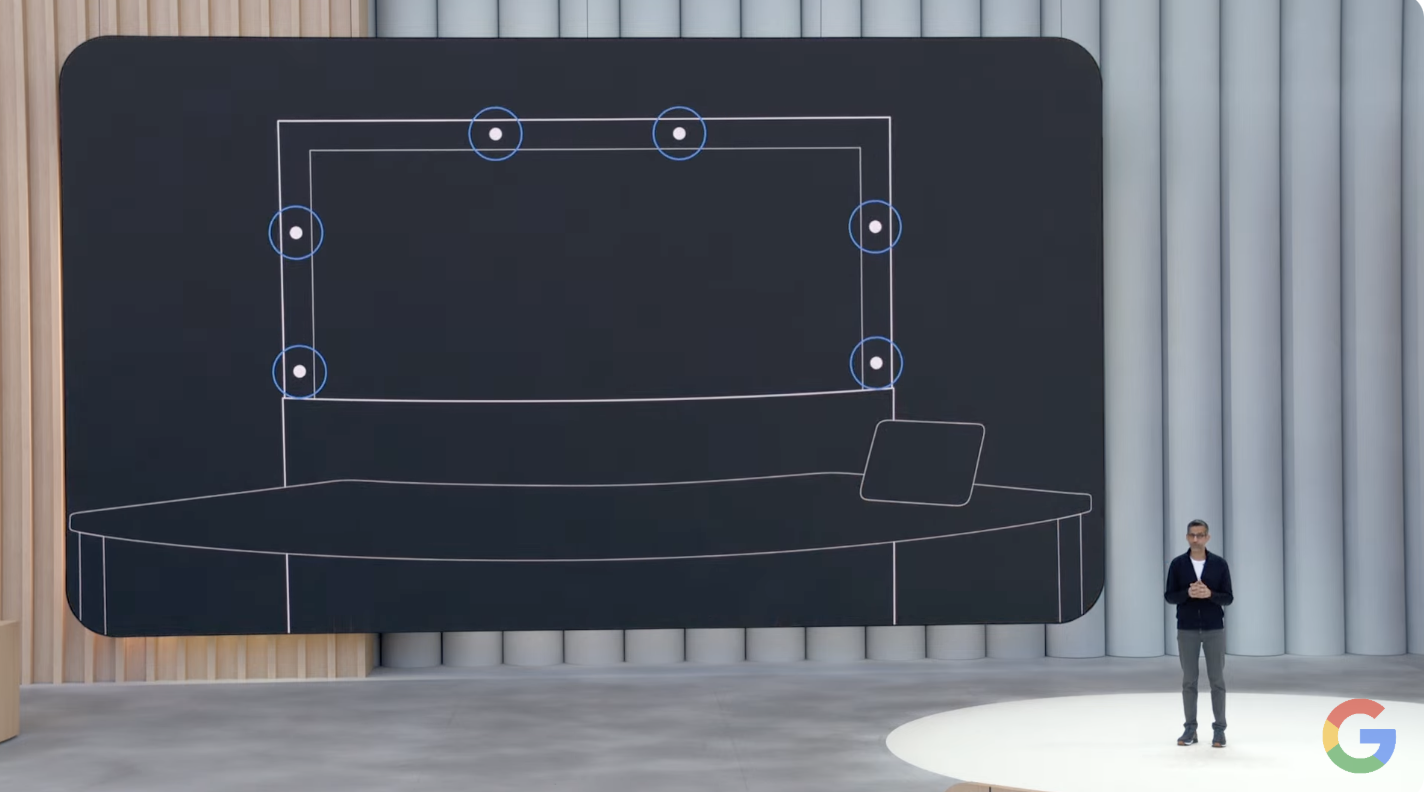

The Project Starline’s upgrade to Google Beam looked incredible and is even more exciting because Google already has a partner, HP, lined up.

Our story is here.

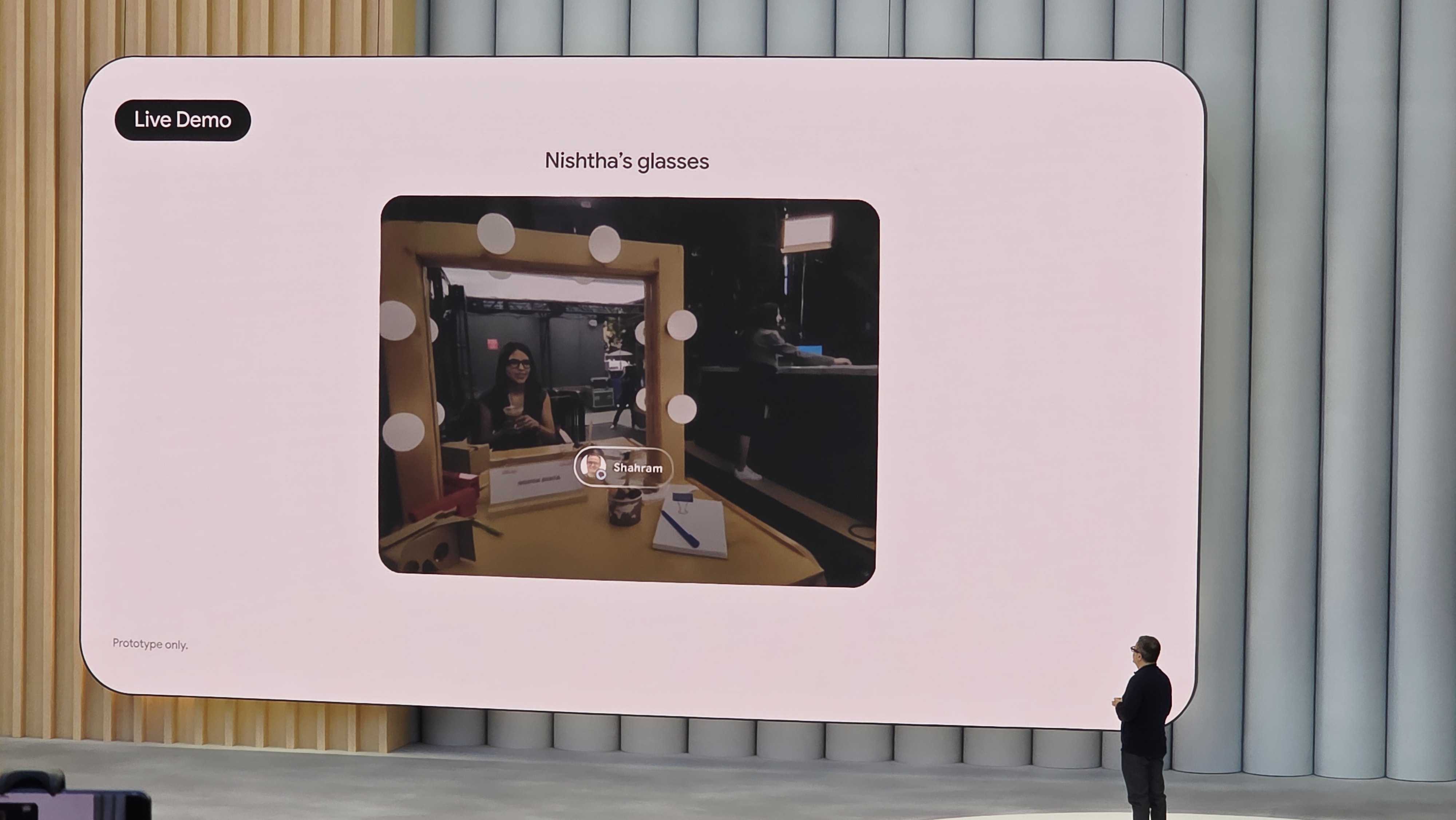

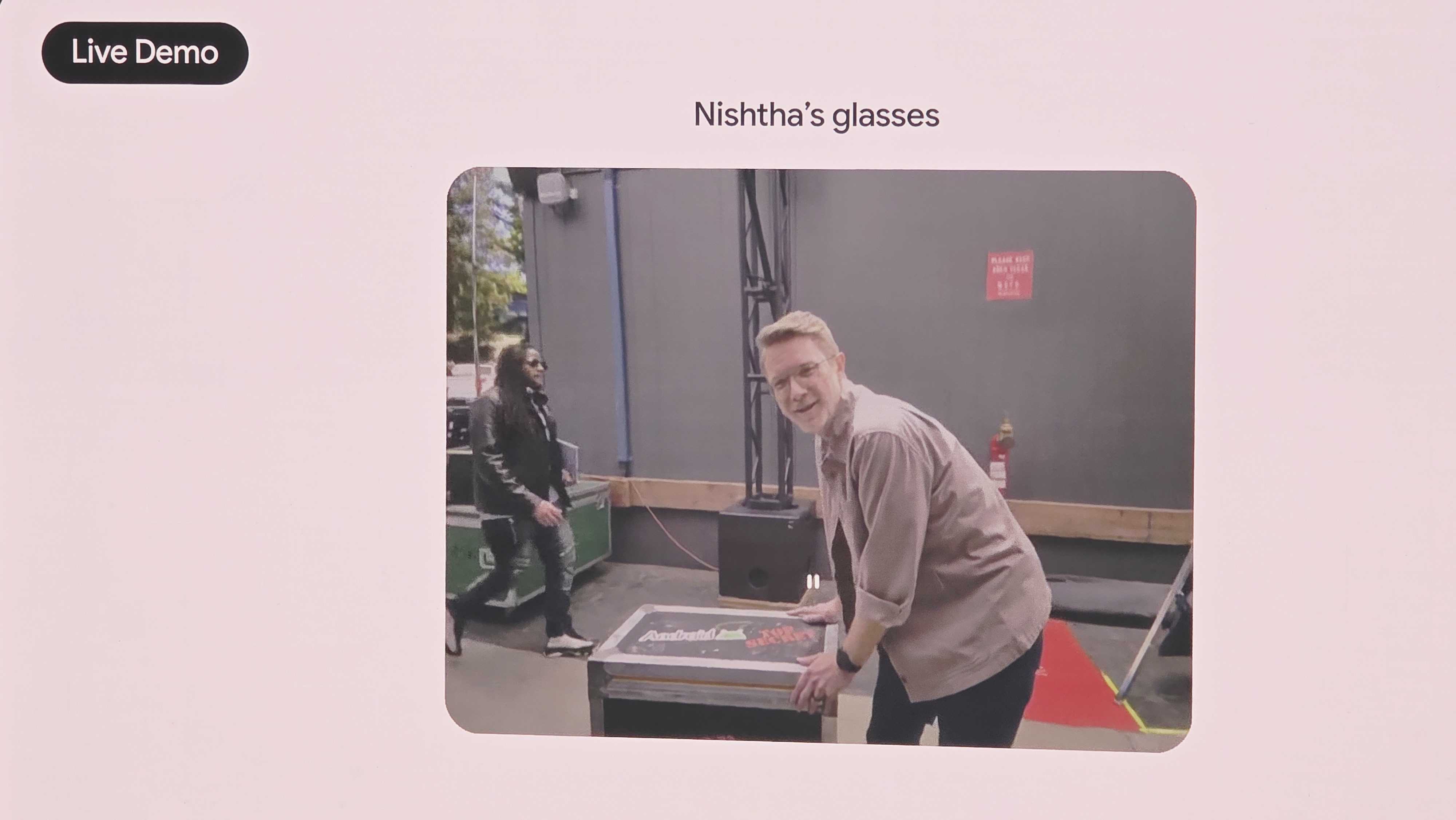

Android XR Glasses had its moment

We finally saw a live Google Android XR Glasses demo, and it was a doozy, especially because so many on stage were wearing them. Here’s a deep dive on what we learned.

Google finally gave us a closer look at Android XR – here are 4 new things we’ve learned

How big is outputting AI audio and video at the same time?

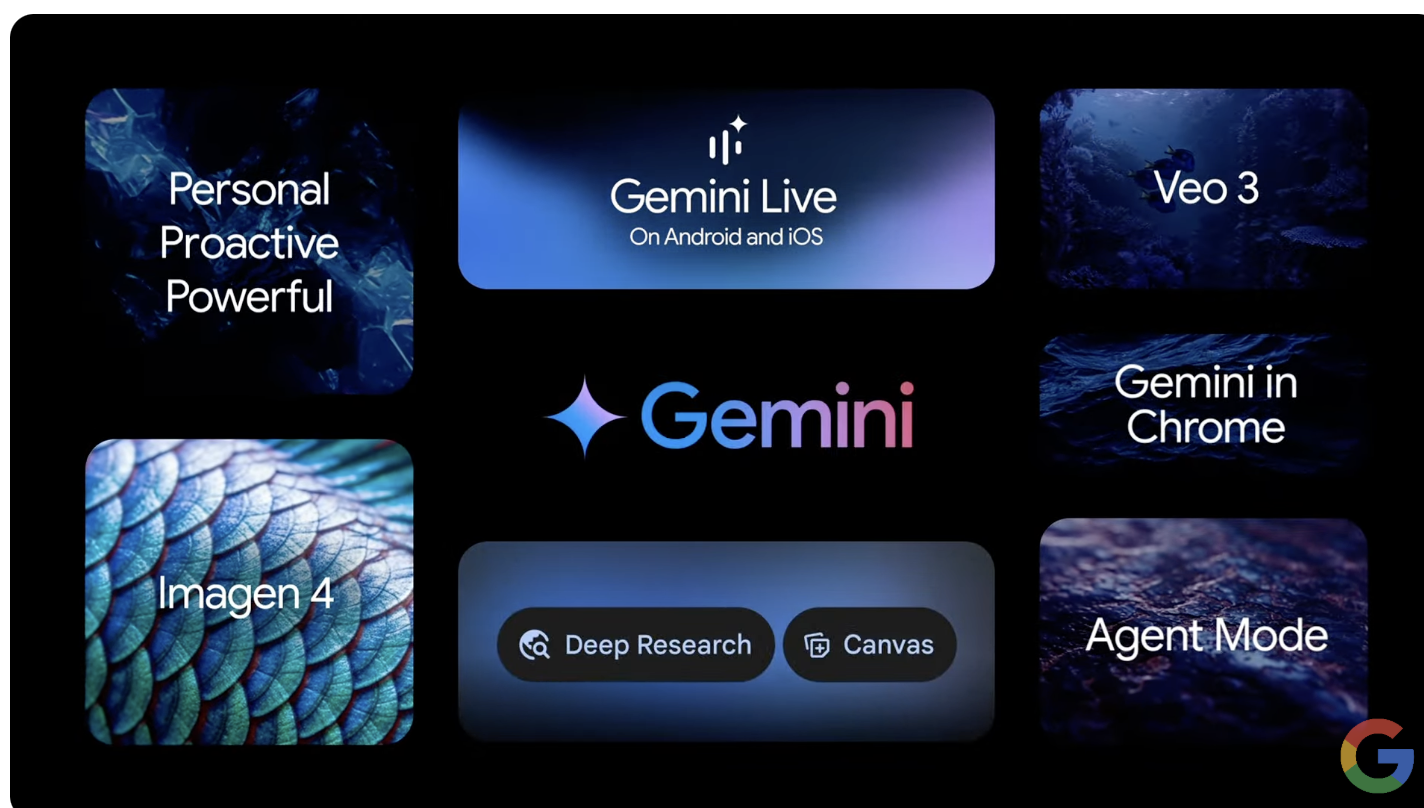

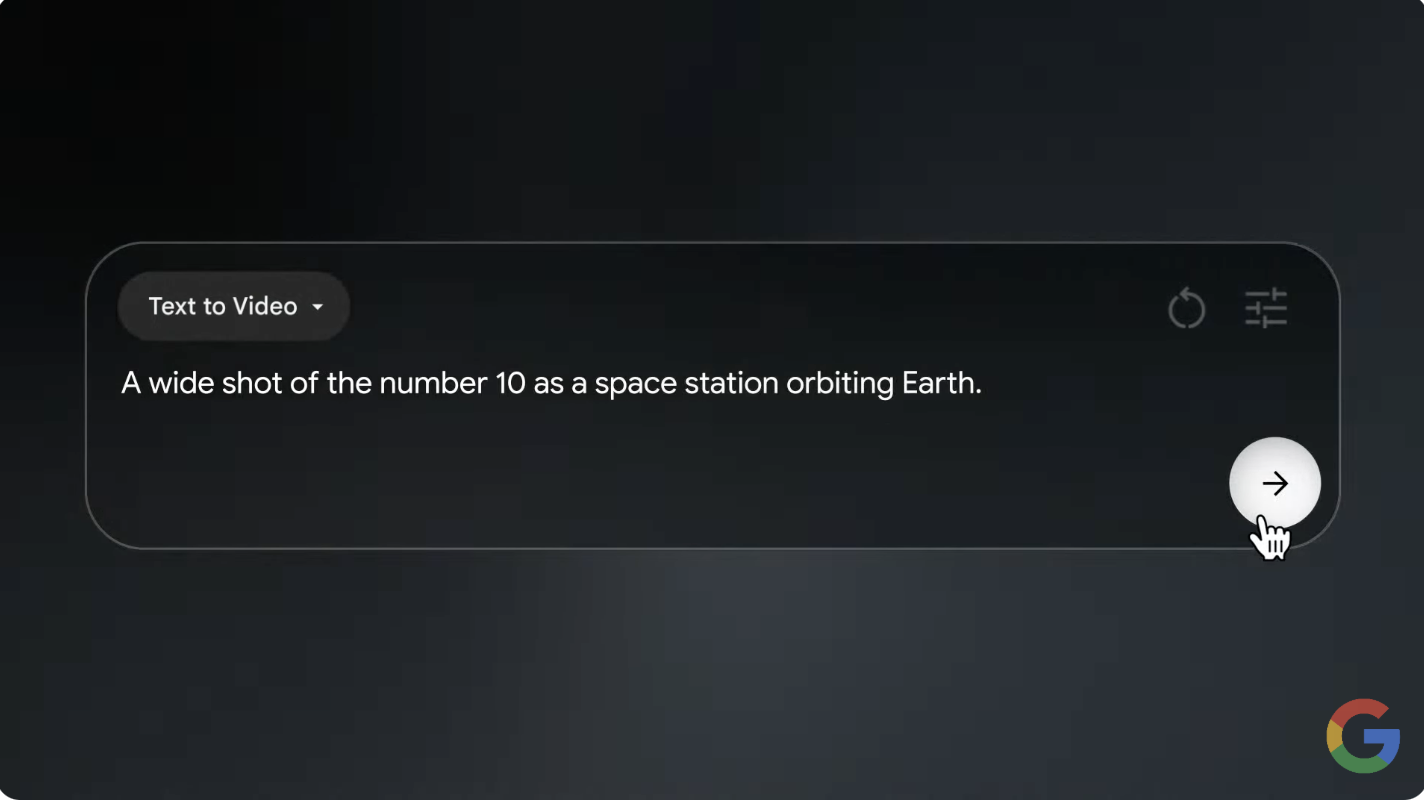

One of my favorite announcements is the Veo 3 model update. This is the first time you can describe a scene with dialogue and sound effects and get AI-generated video output with audio. Wow.

My story is here.

We’ll do it live!

Gemini Live, which lets the app see what you see and what’s on your screen, is free to all iOS and Android users (assuming their phones support it).

Here’s our deep dive.

And that’s all they wrote…but what did they write?

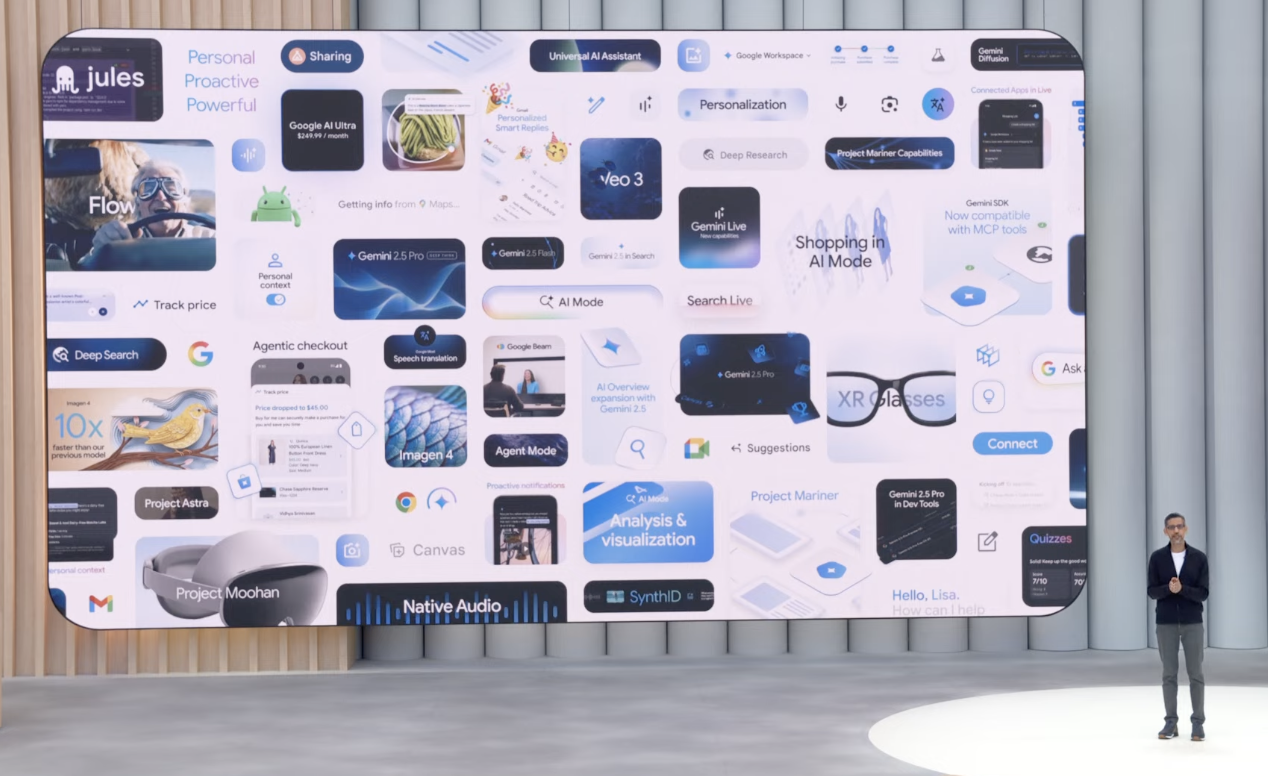

Google I/O 2025 keynote just wrapped up, and they unveiled so, so much.

Fortunately, TechRadar has covered all the key bits. Below are some of the highlights and links to our in-depth coverage.

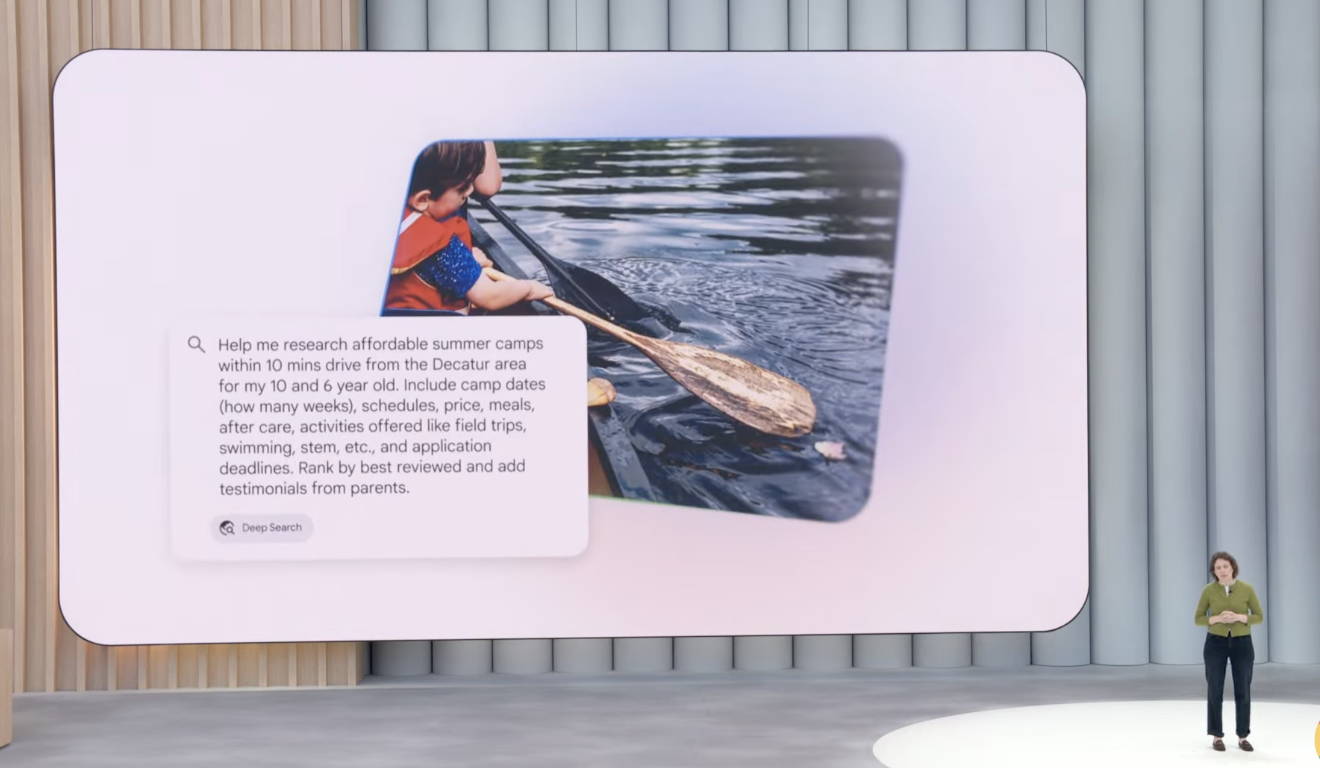

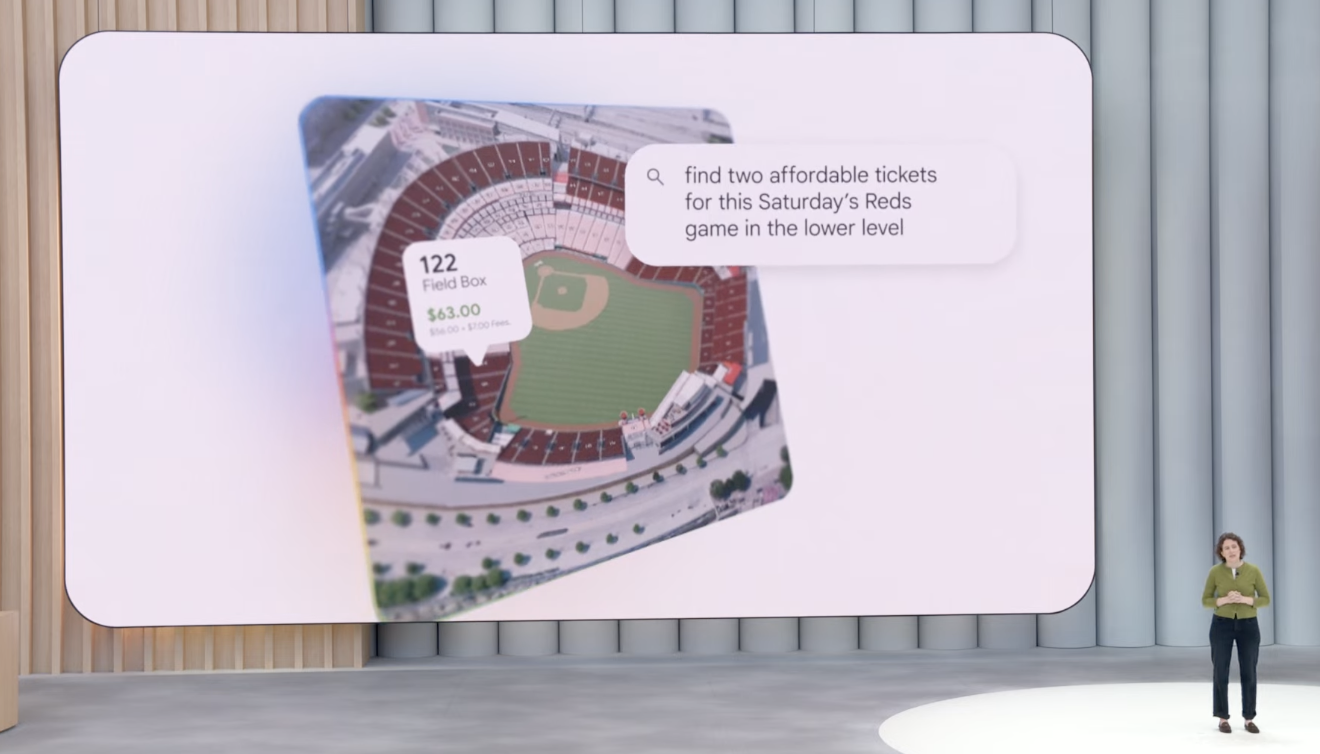

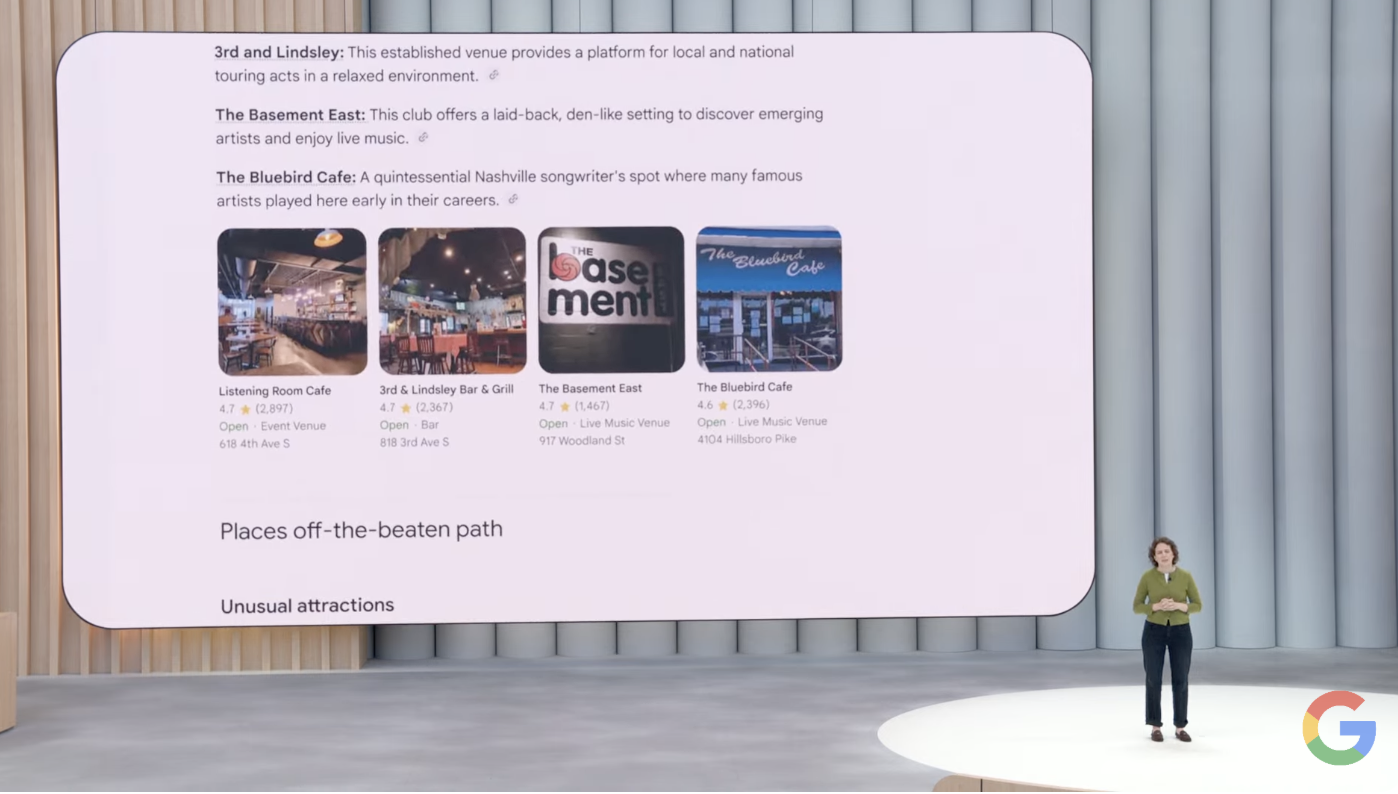

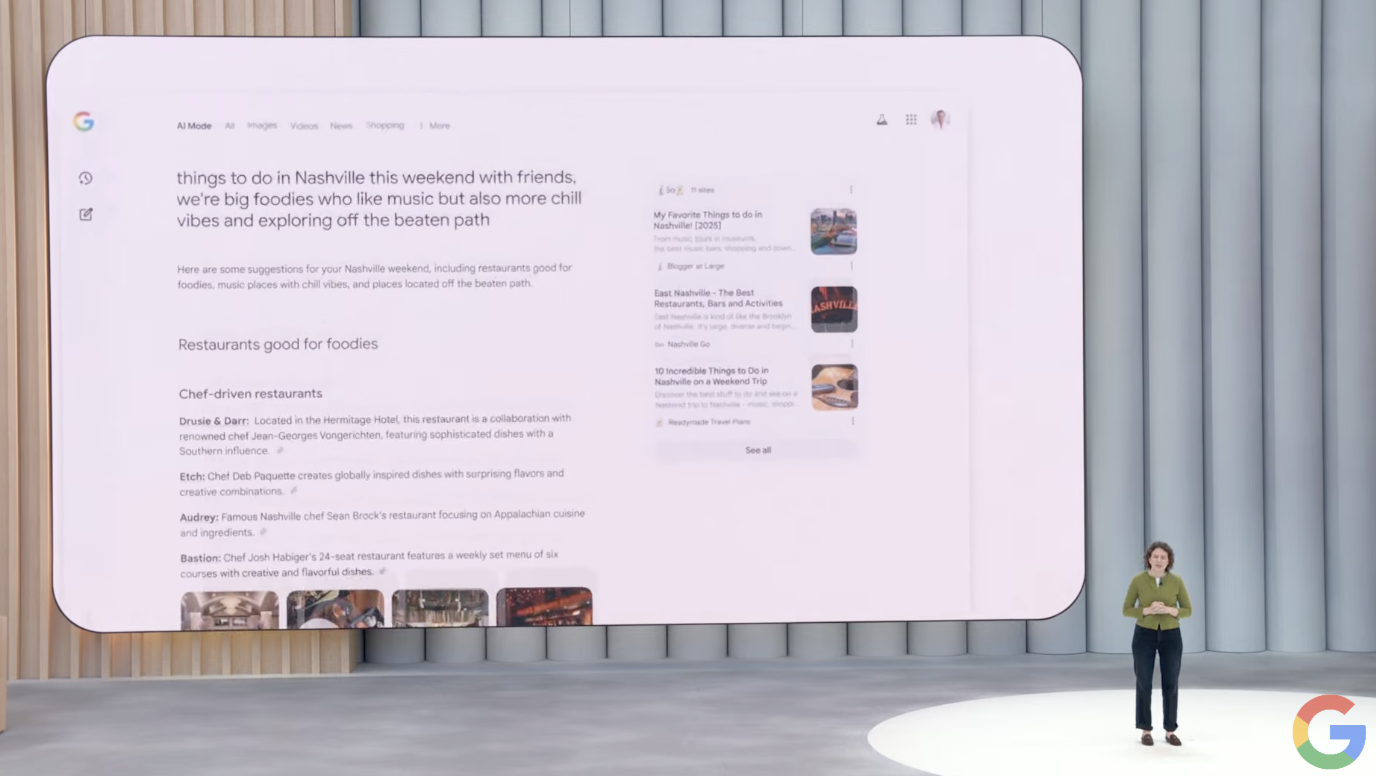

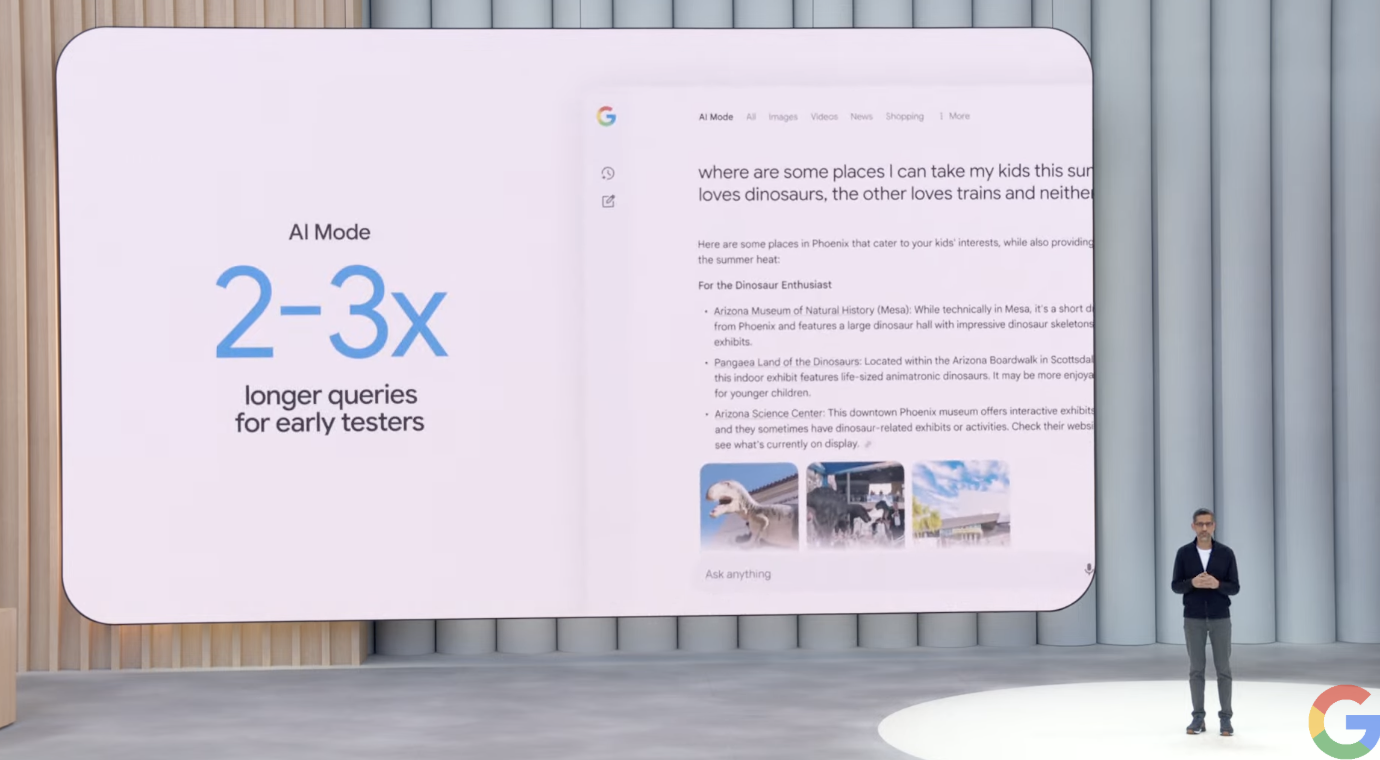

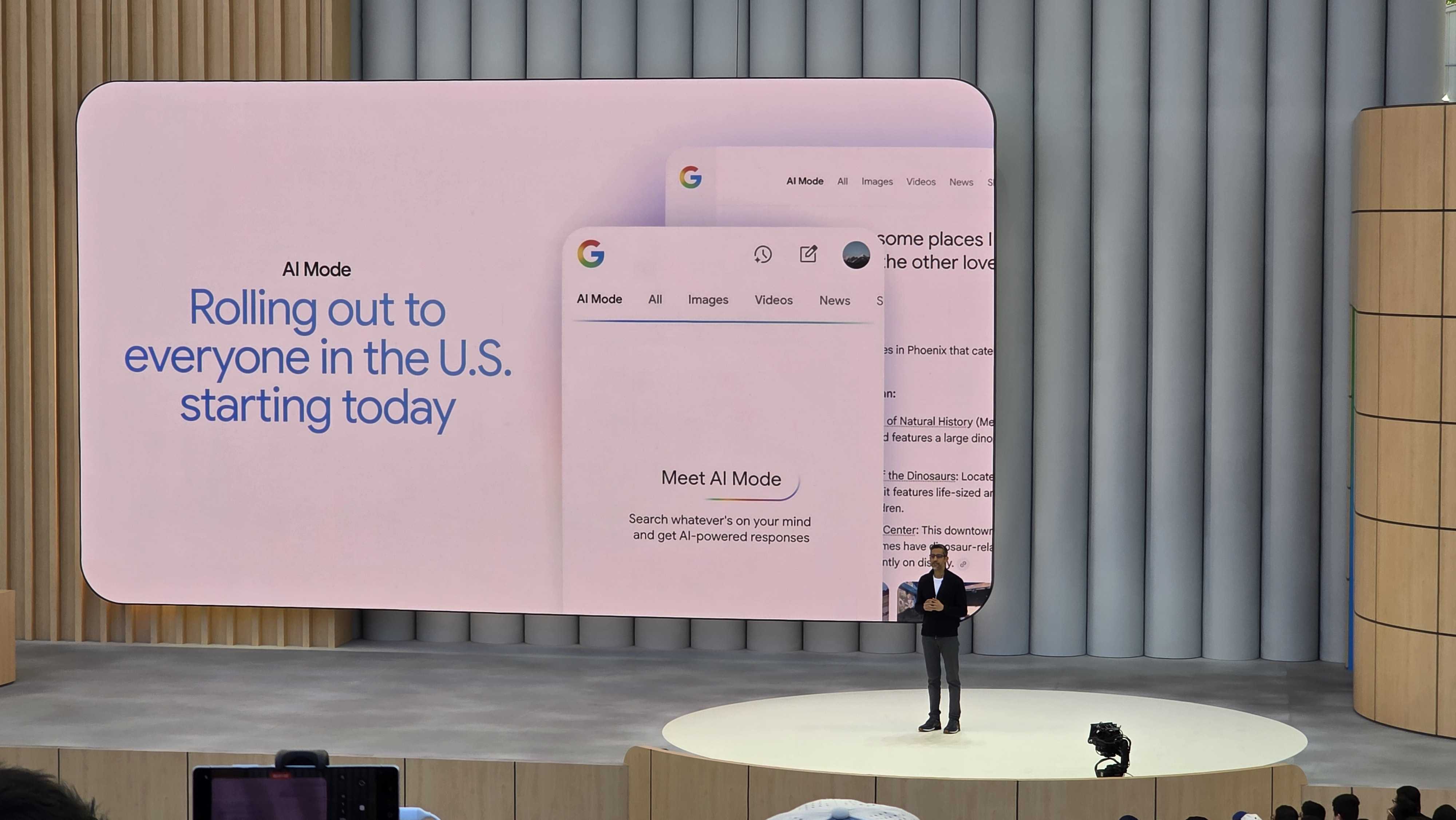

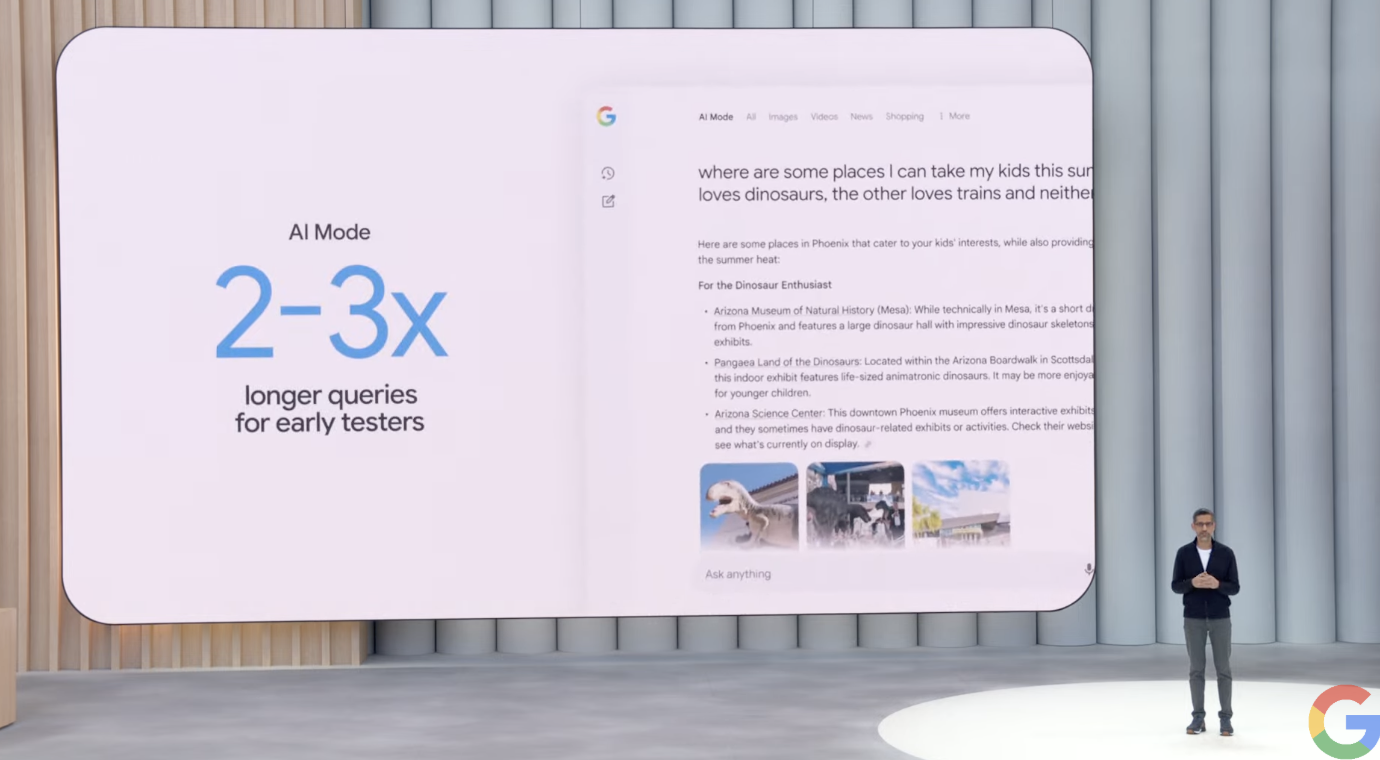

Let’s start with Search. It got a huge upgrade with better AI Overviews and, now, AI Mode for all in the US. Search Live also looks like a game-changer

Everything Google announced during Google I/O 2025 in one image

You’re welcome

Big partnerships for Android XR Glasses

Gentle Monster and Warby Parker are both building Android XR Glasses. And Samsung is working on them, too.

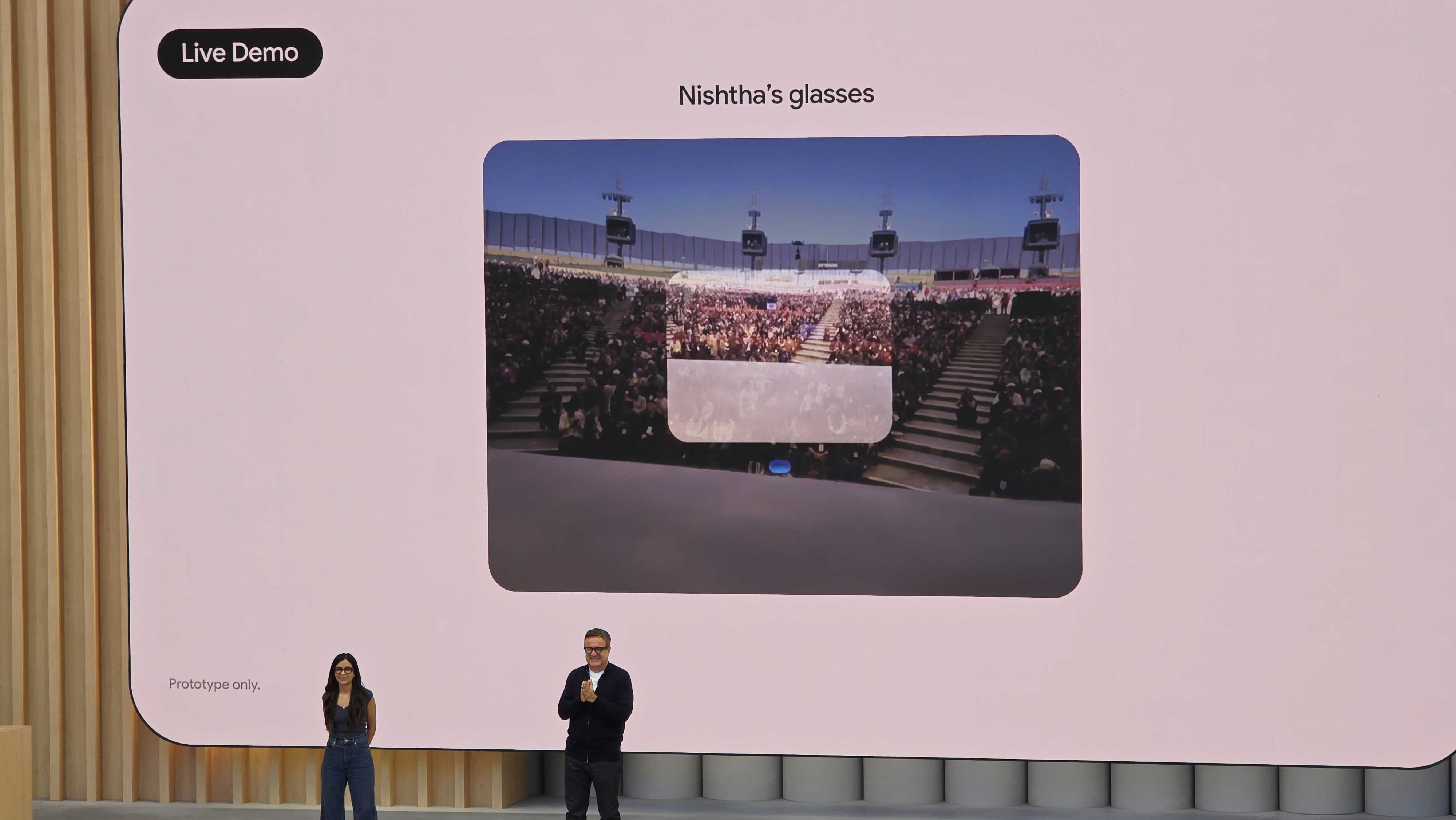

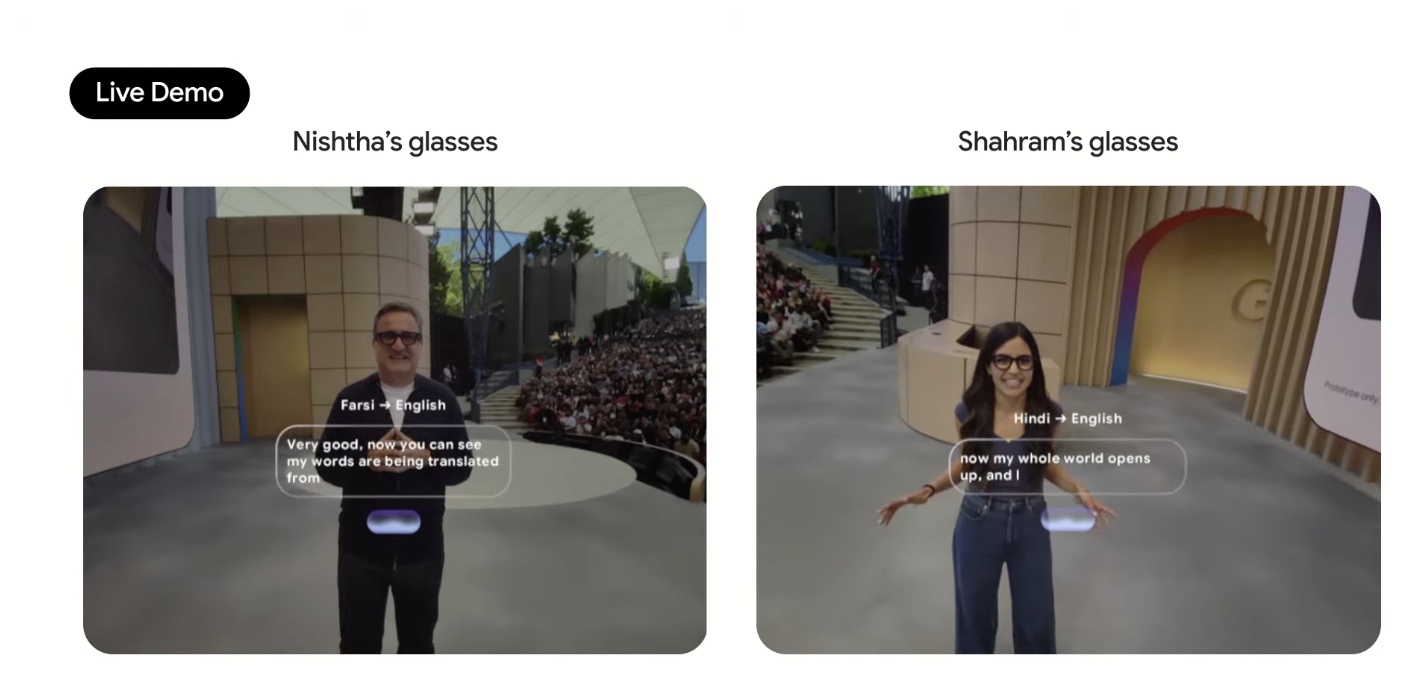

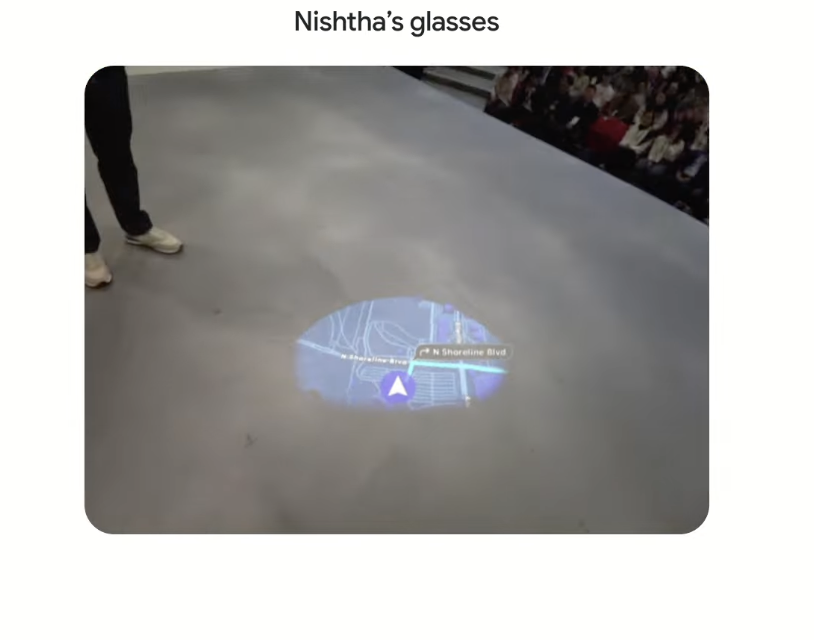

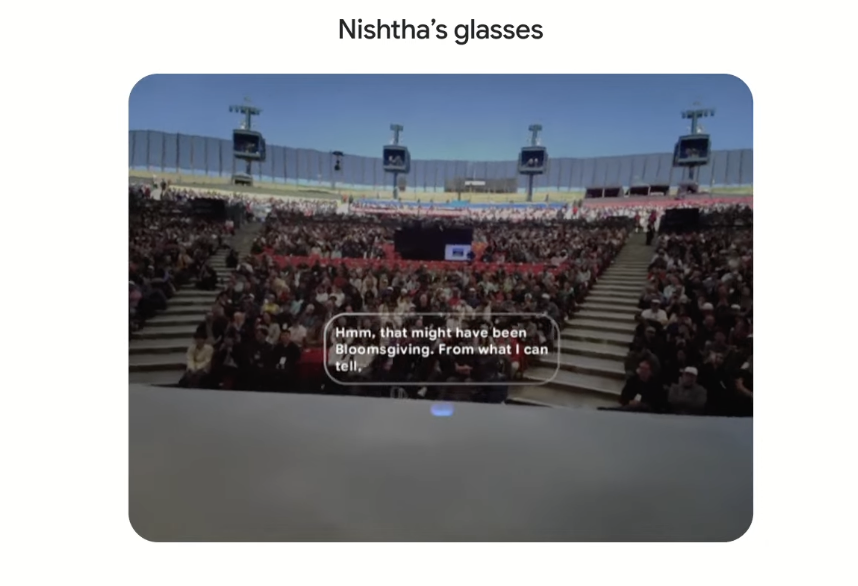

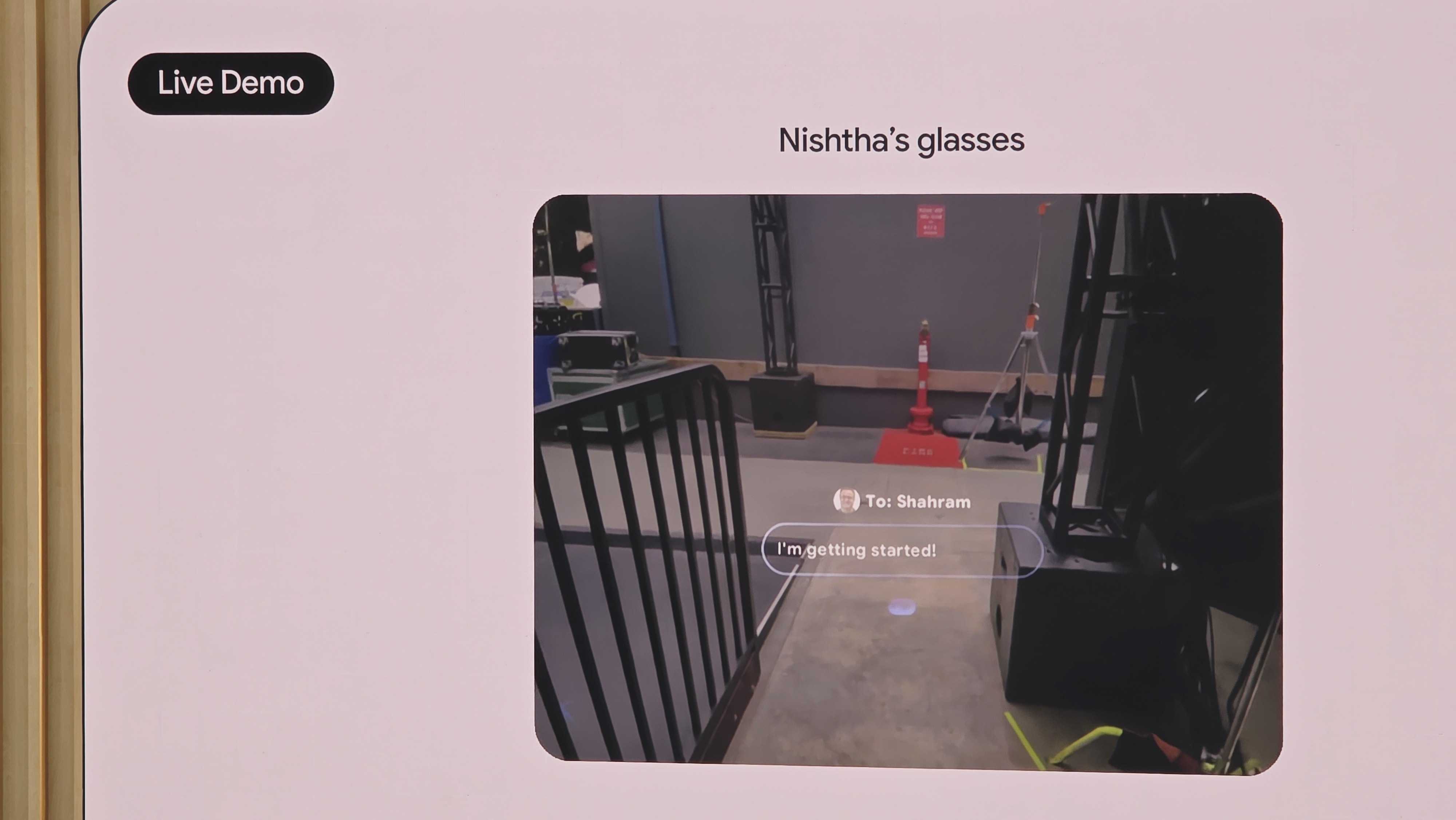

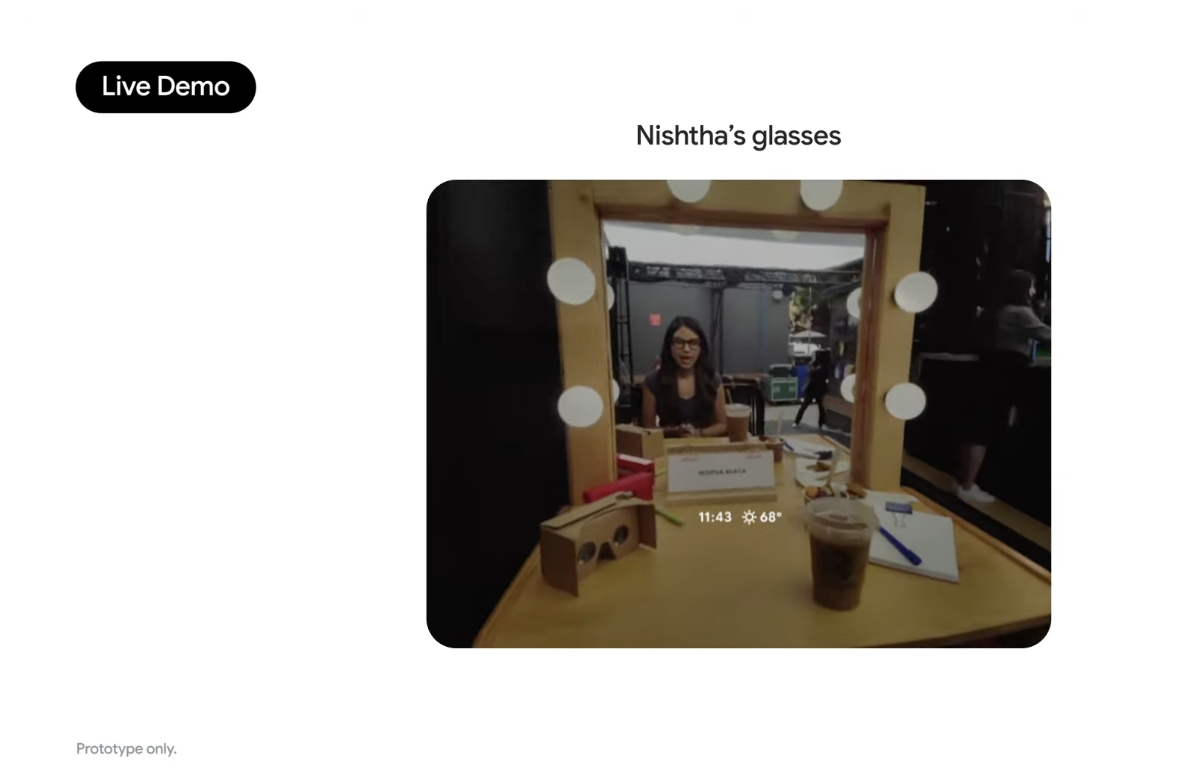

We saw a real-time translation demo with Android XR Glasses

And it mostly worked!

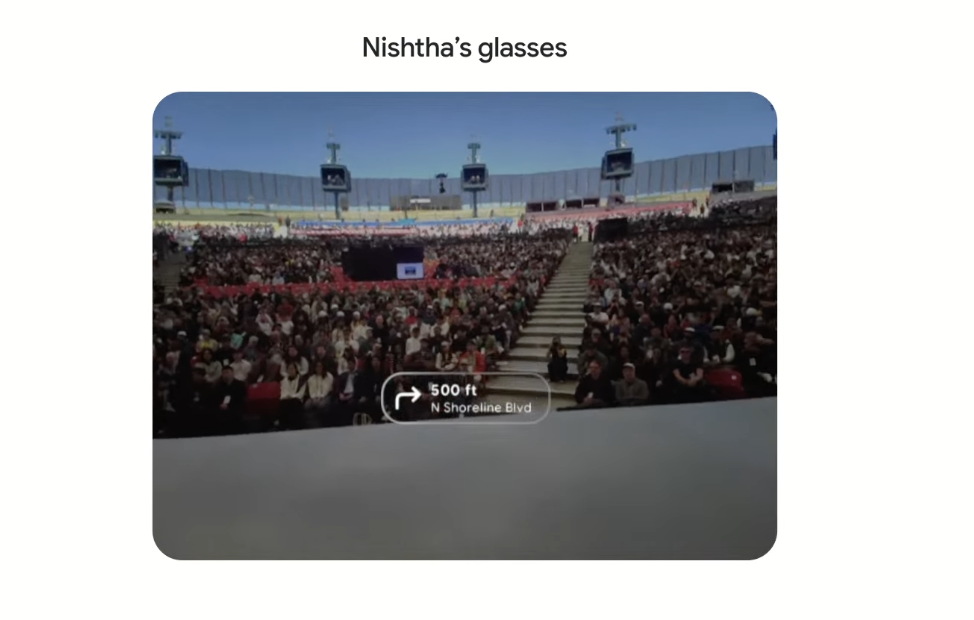

Here are the new Android XR Glasses

We’re finally getting a good look at the glasses and how they work in real time. They even had a pro basketball player, Giannis Antetokounmpo, basketball player wearing them.

It’s finally Android XR time

Android XR will feature Gemini. They’re still calling the Google/Samsung collaboration “Project Moohan.” It’s still “coming later this year.”

They quickly moved on to Glasses and a live demo.

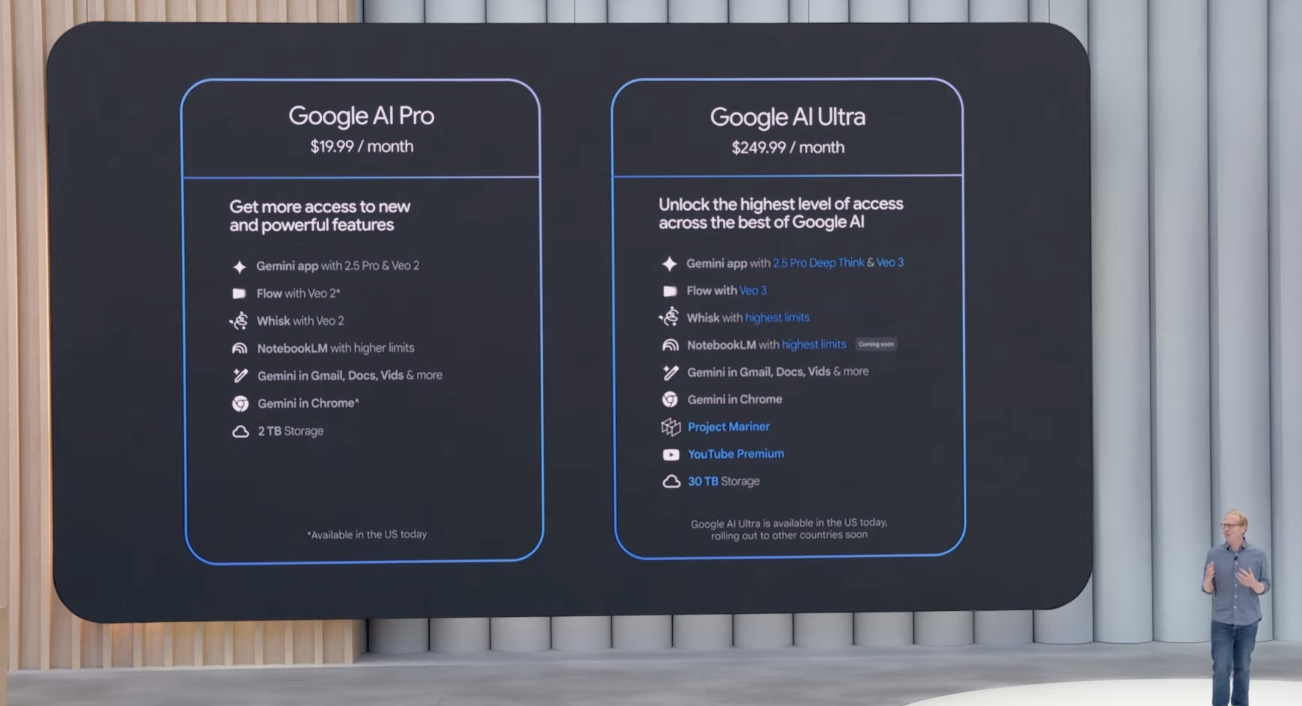

Google has new AI plans

Google’s new plans are Google AI Pro and Google AI Ultra.

Pro is $19.99 a month, and the aptly-named Ultra is $249.99 a month.

The second plan is for the most serious AI creator, obviously.

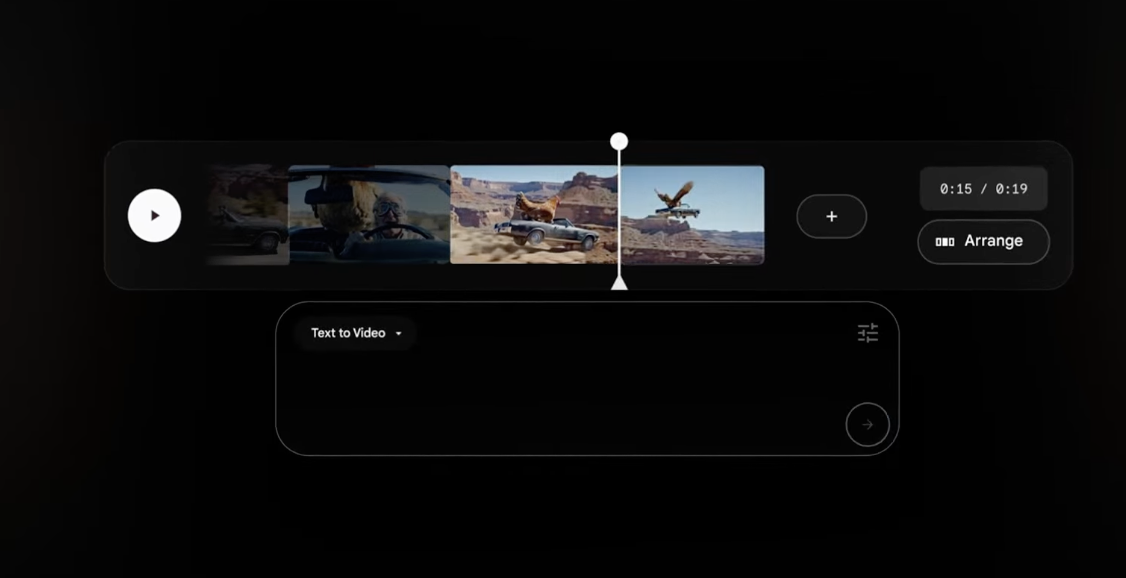

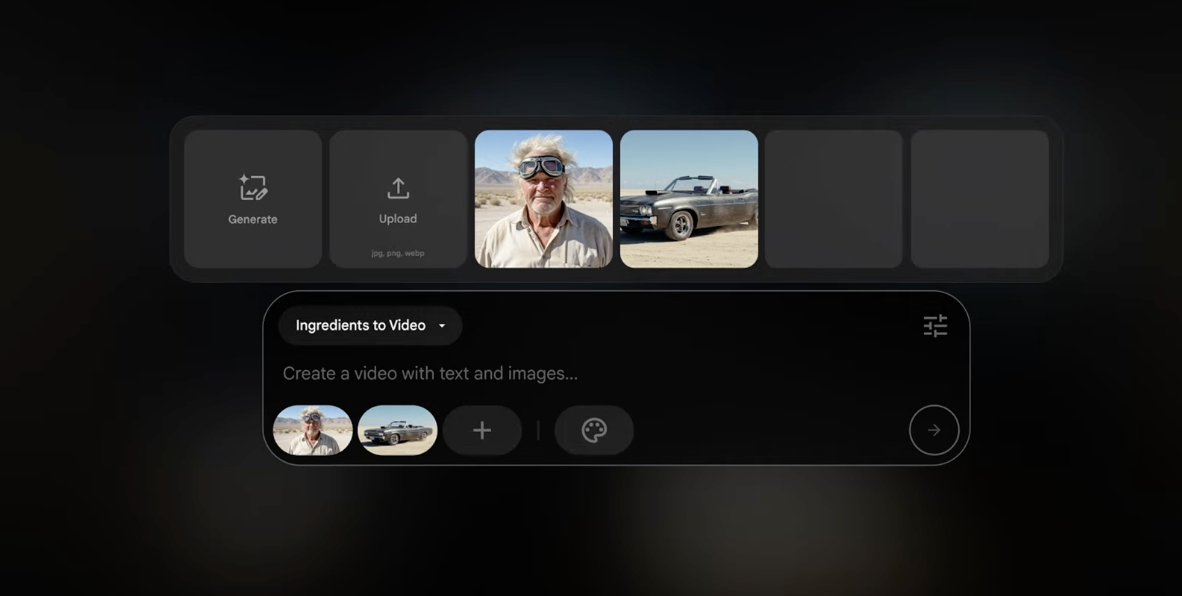

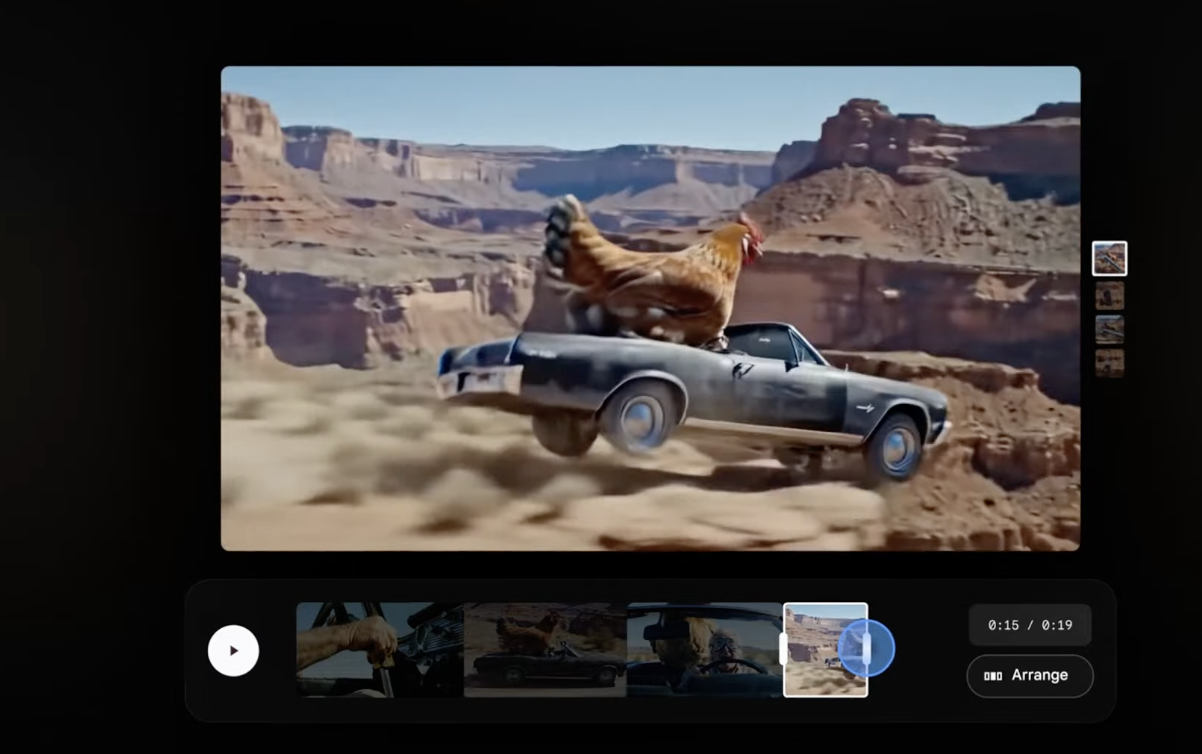

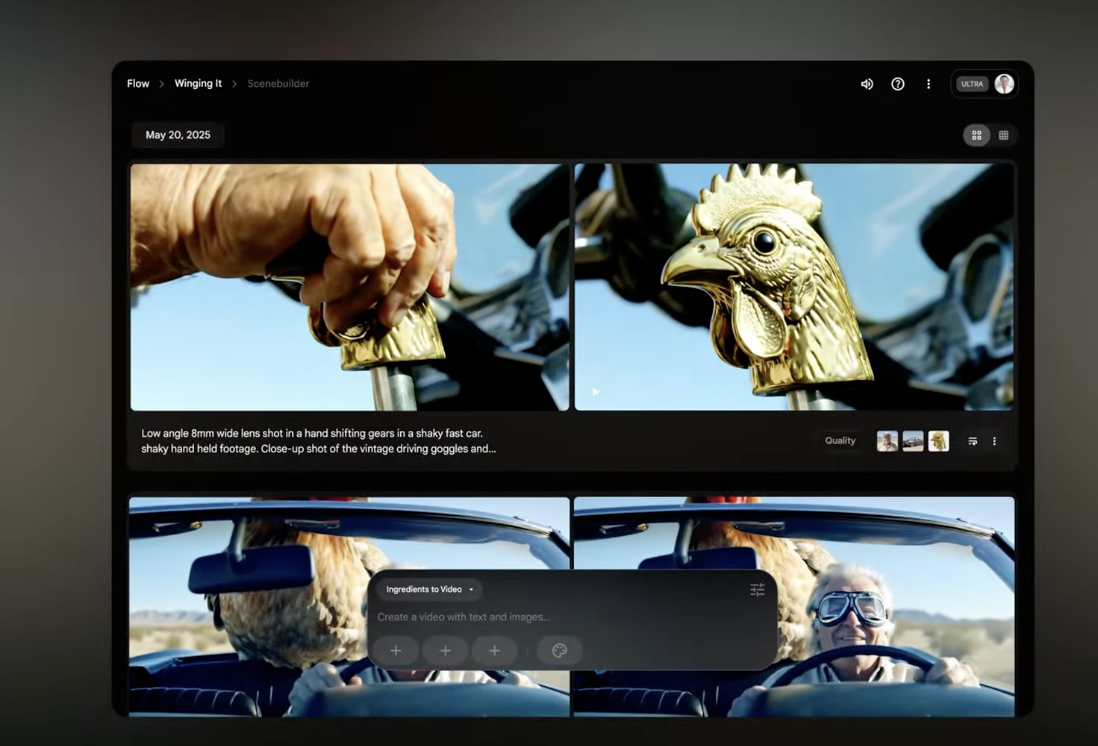

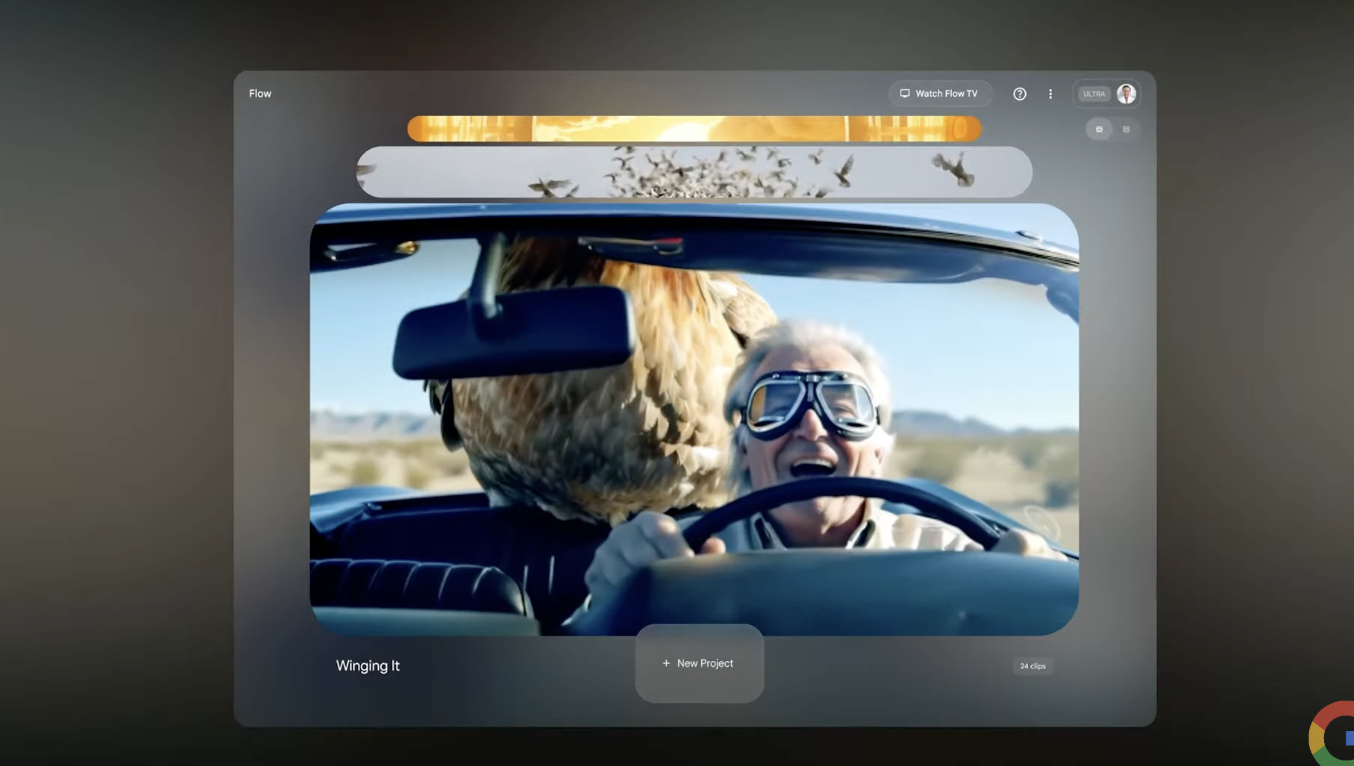

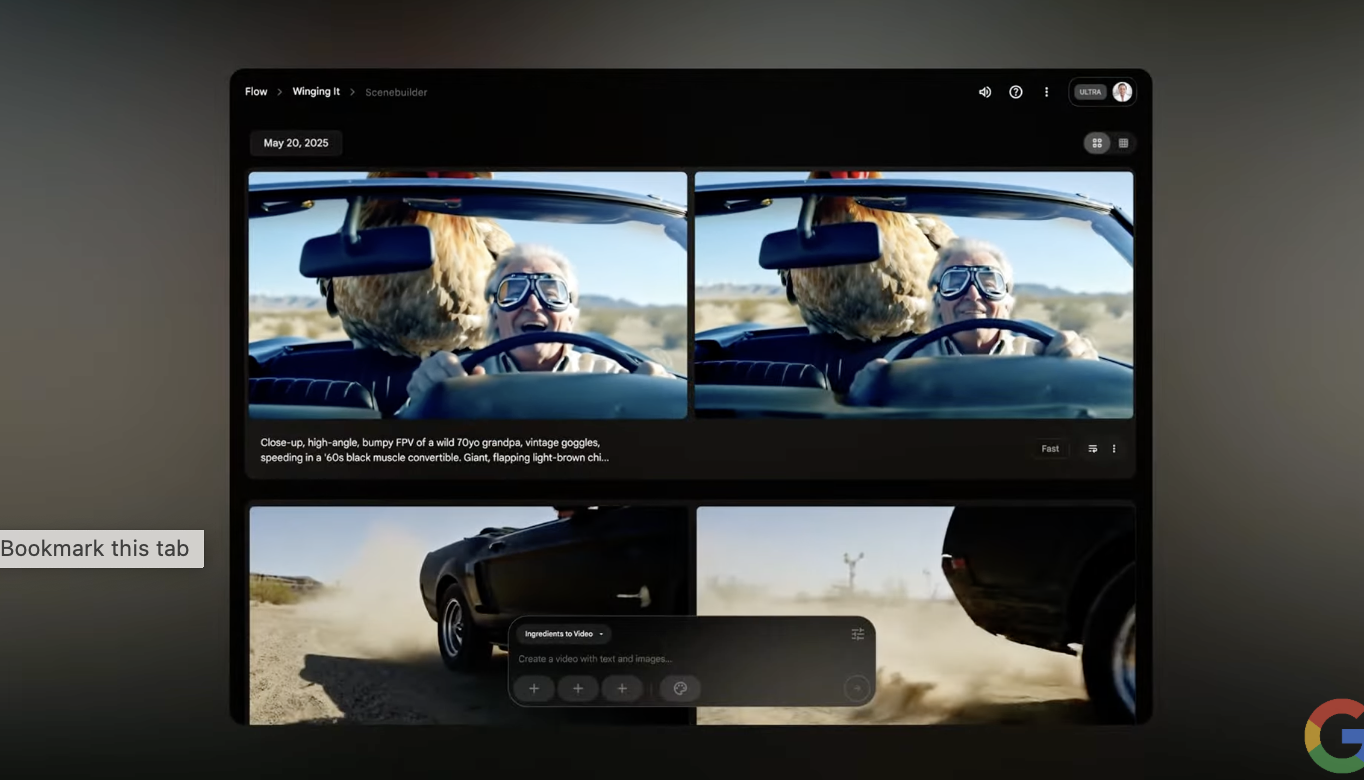

Google unveils Flow

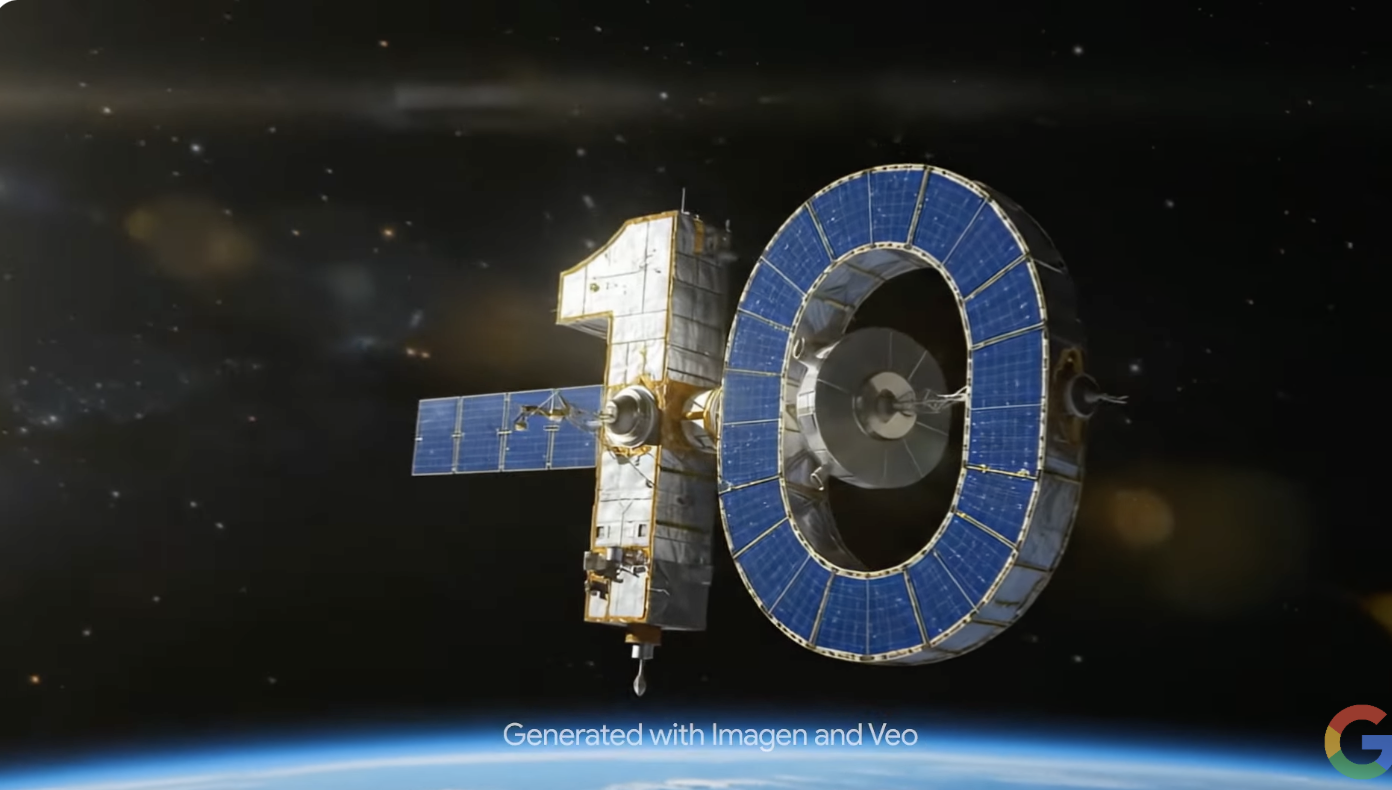

Flow is more comprehensive video creation tool that combines, among other things, Imagen 4 and Veo 3. They tout the character and scene consistency. You can even extend scenes. Also can add music from the new Google Lyria.

Google presented a video featuring Darren Aronofsky and young filmmakers using a combination of real-life performances and Veo 3 generated video that they say they could not capture otherwise.

One of them is Ancestra, the story of the filmmaker’s birth.

Imagen 4 and Veo 3 coming with huge upgrades

Imagen 4 will do much better on textures and text, which is a big deal for AI-generated content..

Veo 3 is coming with audio and video in a single output.

These are big updates that will change how we produce AI content.

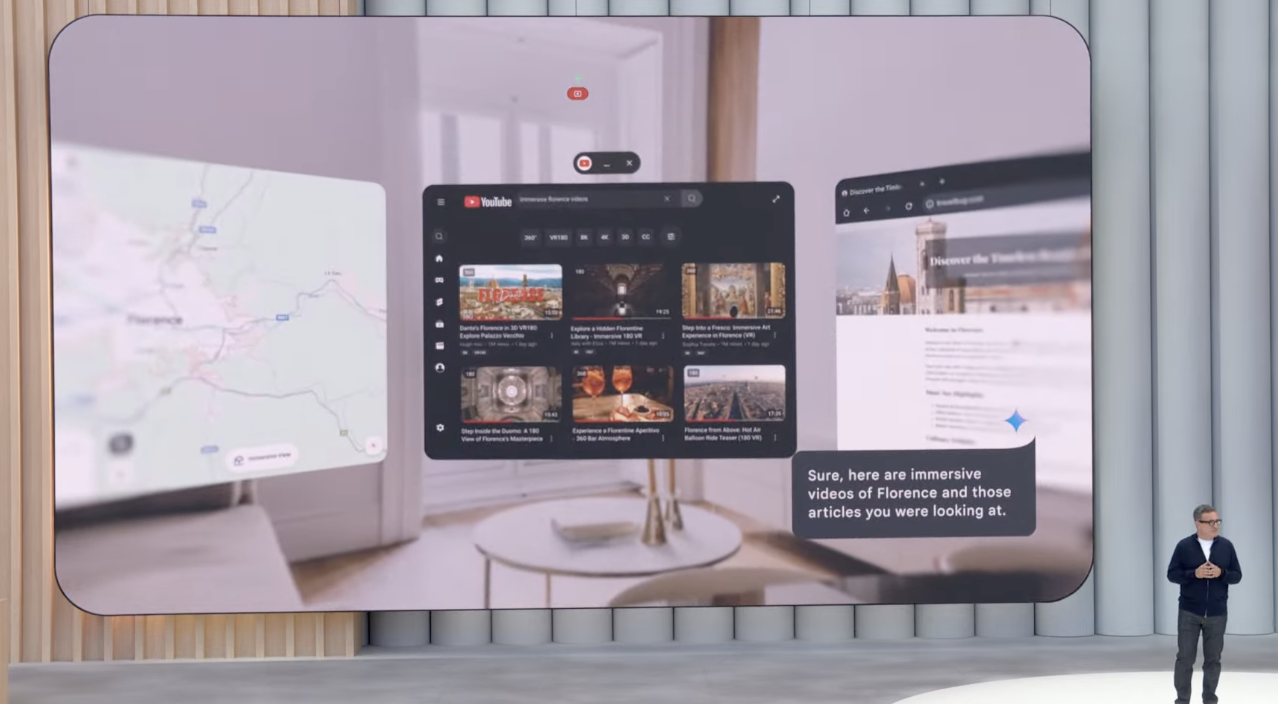

Gemini is coming to Chrome

Gemini will work as you browse the web on Chrome and understand the page you’re viewing. Then you can ask questions. Coming to Gemini subscribers in the US this week.

Camera and Screen sharing coming free to android and iOS Gemini apps

Your Gemini app experience is getting a big upgrade. This ability for Gemini to see what you see in person and on screen is huge.

Google’s big goals for Gemini

Are you ready for Google Gemini to really know you?

You’ll eventually be able to connect all your Google apps to Gemini. It’ll make it more proactive.

“What if it could see what’s coming and help you prepare, even before you ask?”

The three main goals:

Personal

Proactive

Powerful

Google says you can ‘Ask Anything’

Sounds like the tag line for Google Search is changing thanks to the integration of all this AI.

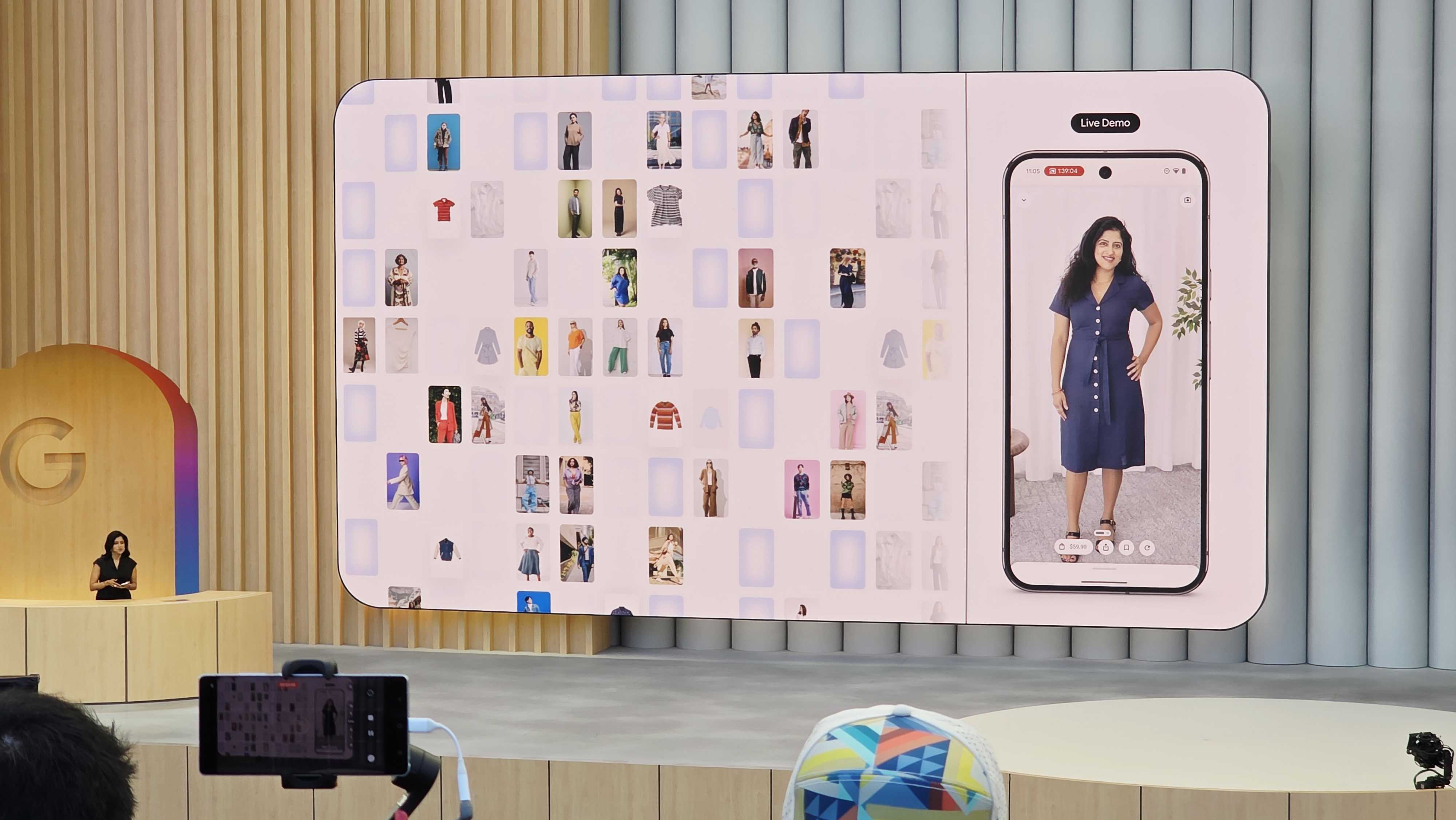

Type it, say it, snap it, film it.

They built a new model to better understand the human body. The models also understand how clothes and materials will fold and stretc,h and drape on people.

You feed it a full-length picture, and then it lets you try on the outfits, virtually.

It’s a powerful demonstration.

And, yes, you can use Agentic features to track sales and have the AI buy the outfit for you.

2025-05-20T18:04:12.795Z

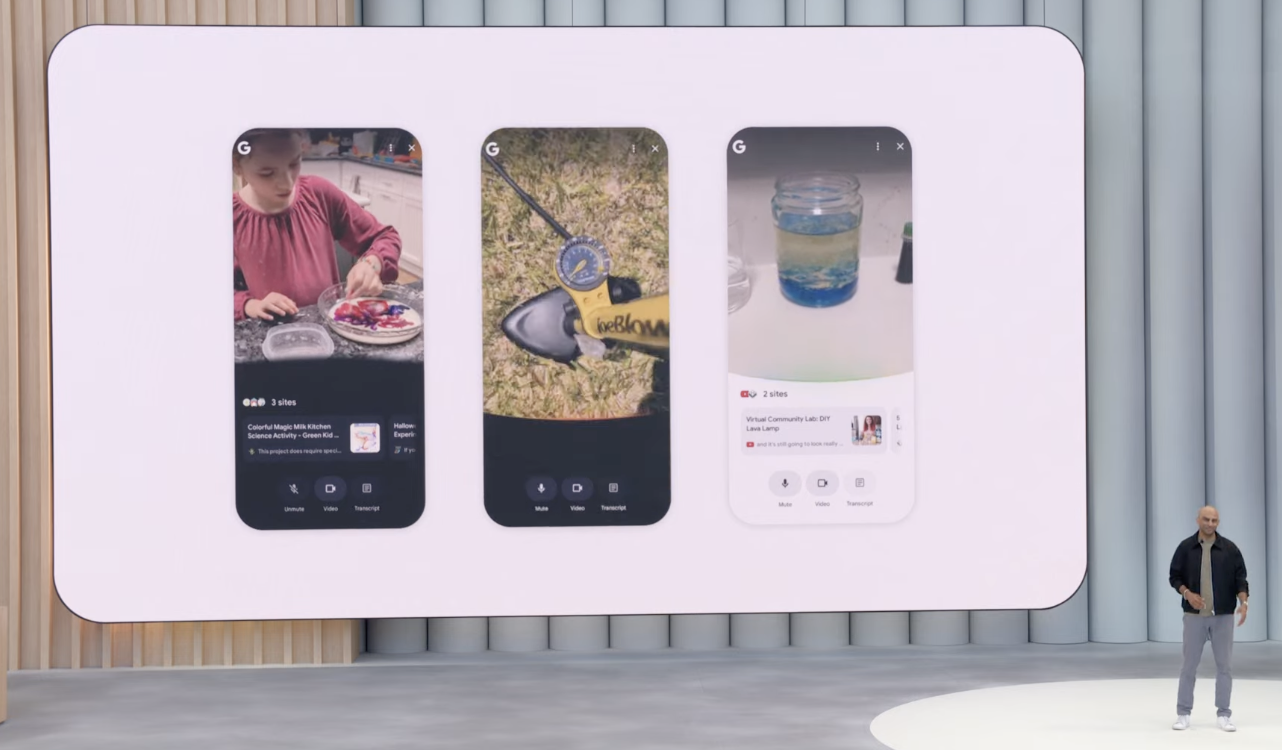

Multi modal Search

Project Astra’s visual capabilities are coming to AI Mode in Search.

It’s called Search Live. We’re seeing some real-world applications.

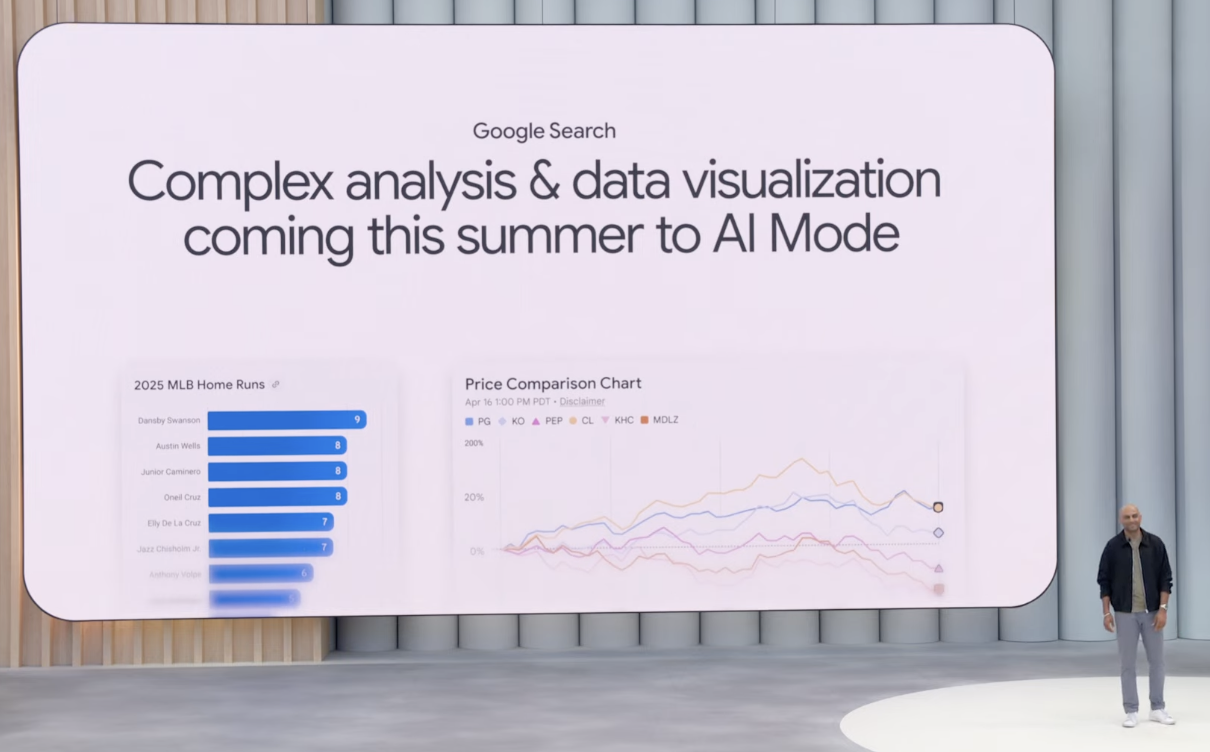

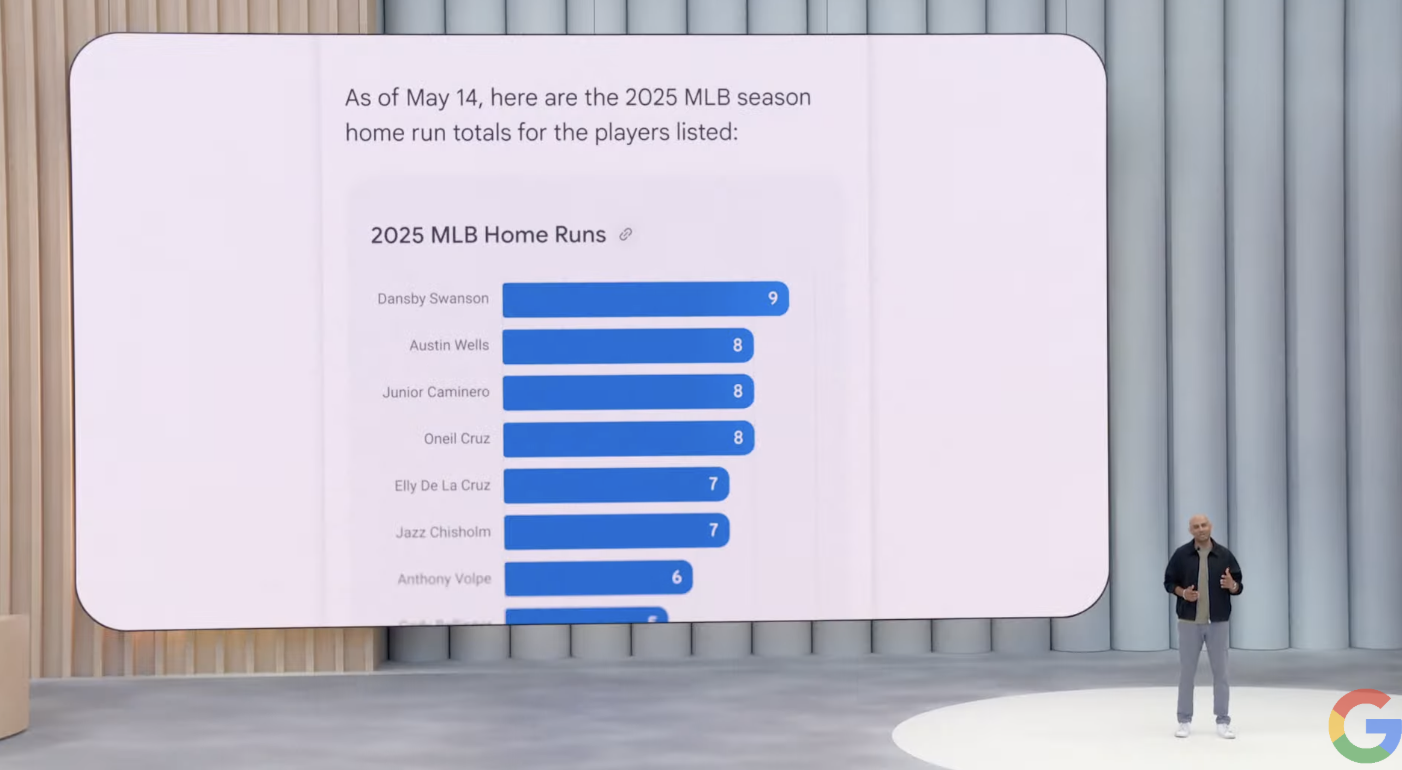

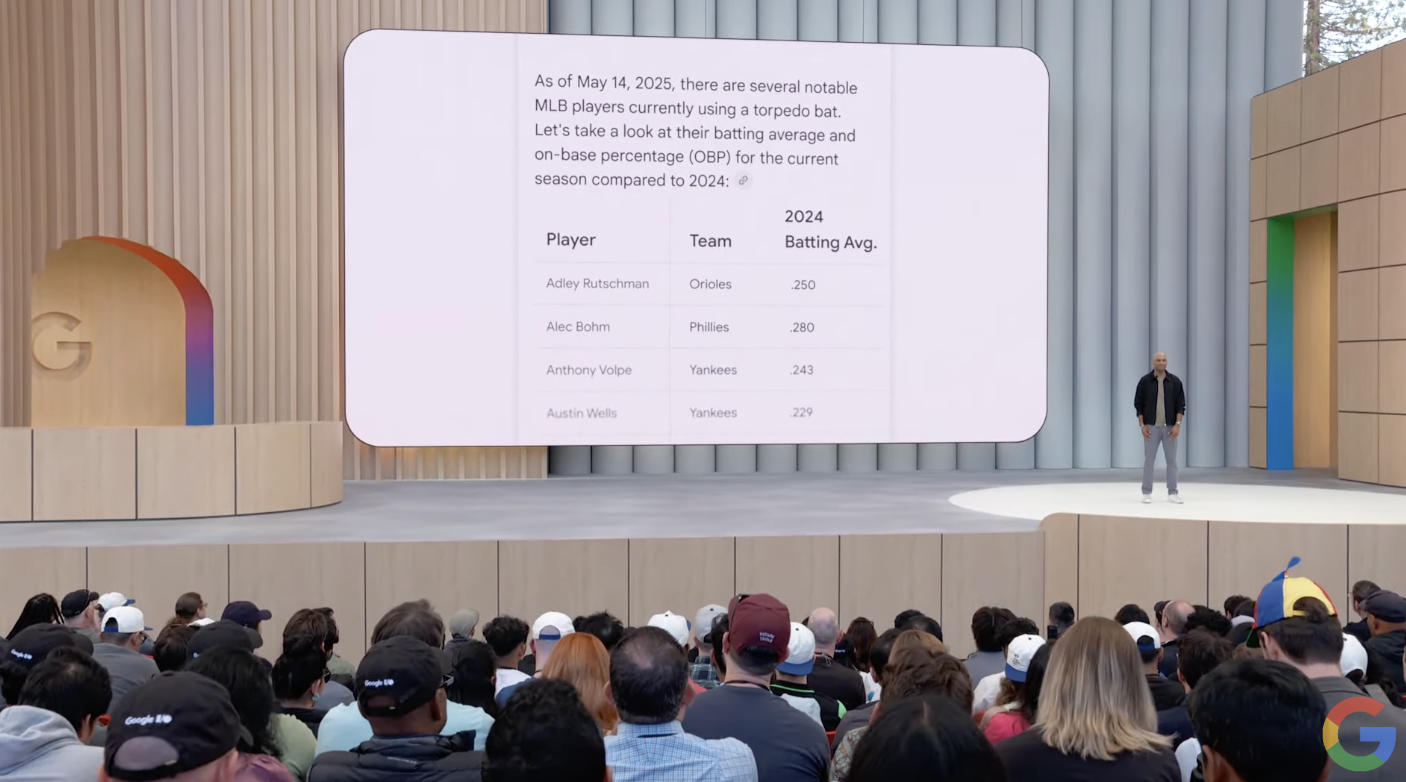

And now, for a sports break

Google is showing how Complex Search and Data Analysis in Google Search AI Mode can answer complex sports questions. It’s coming this summer.

2025-05-20T17:55:58.169Z

AI Mode will almost take over

Google says AI mode capabilities will eventually blend into search.

Personalized suggestions are coming soon.

Back to Search but with a big AI upgrade

Pichai is back on stage, talking about Google Search.

He’s talking about how 1.5 billion users use AI overviews every month.

AI Mode is coming to everyone in US starting today.

A new era of scientific discovery

Google believes we can have an incredible “golden age of discovery and wonder” in the areas of health and science if AI is developed safely.

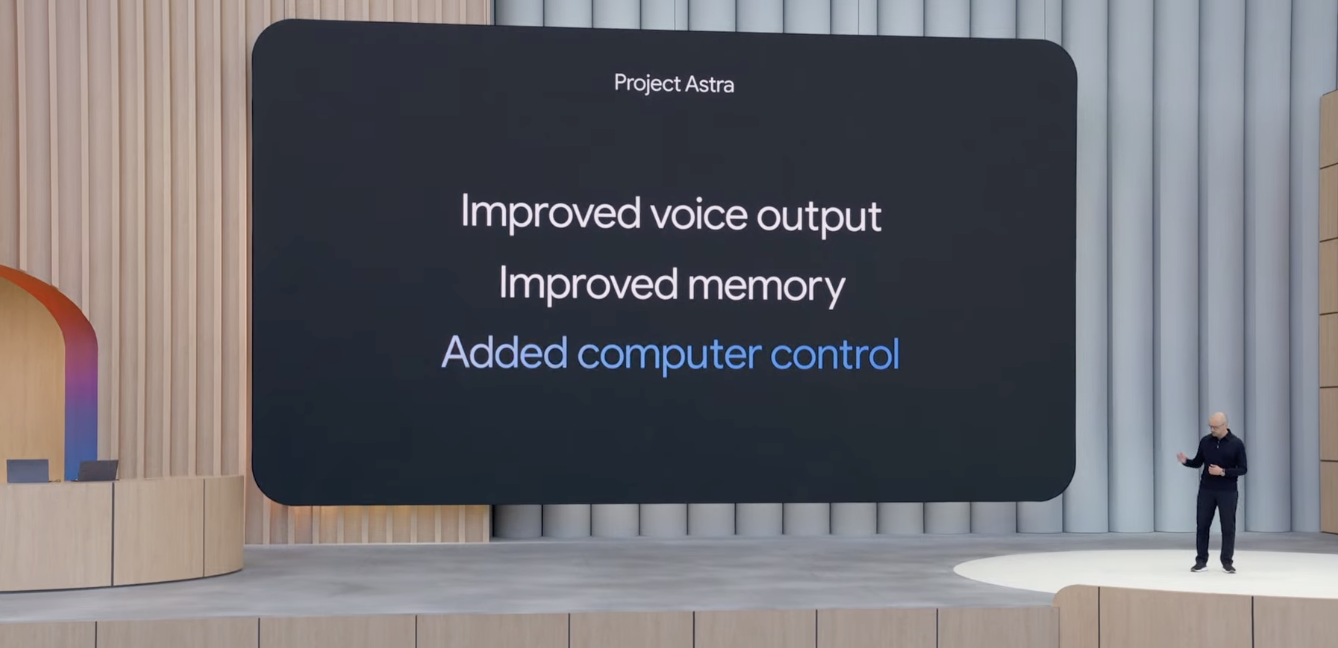

Project Astra in the real world

Google is showing off the latest version of Project Astra as a universal AI assistant. The scenario is a very natural interaction between Project Astra on the phone and a guy trying to fix his bike.

It shows lots of natural language, asking for Project Astra to check for things online, and handling interruptions.

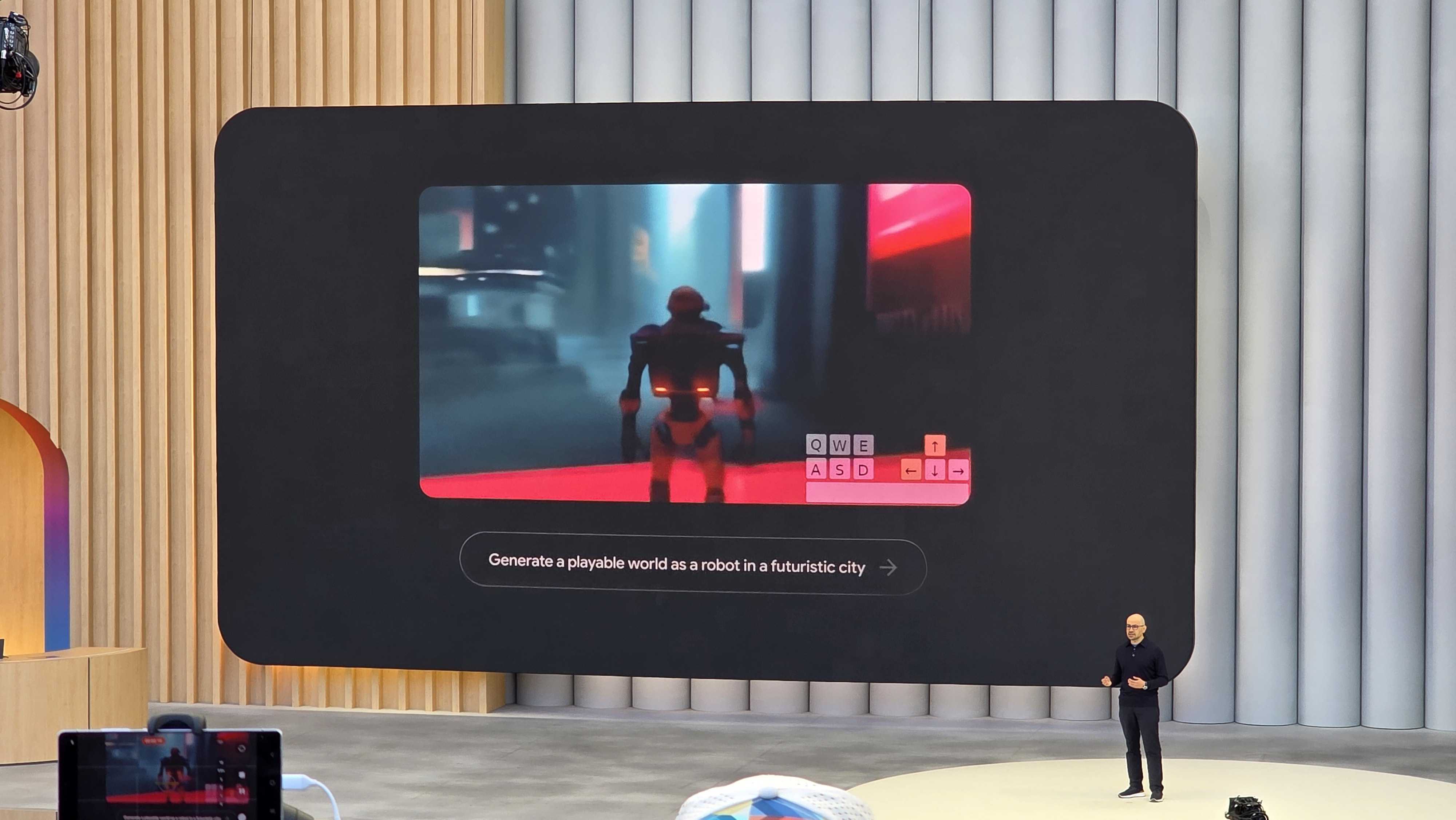

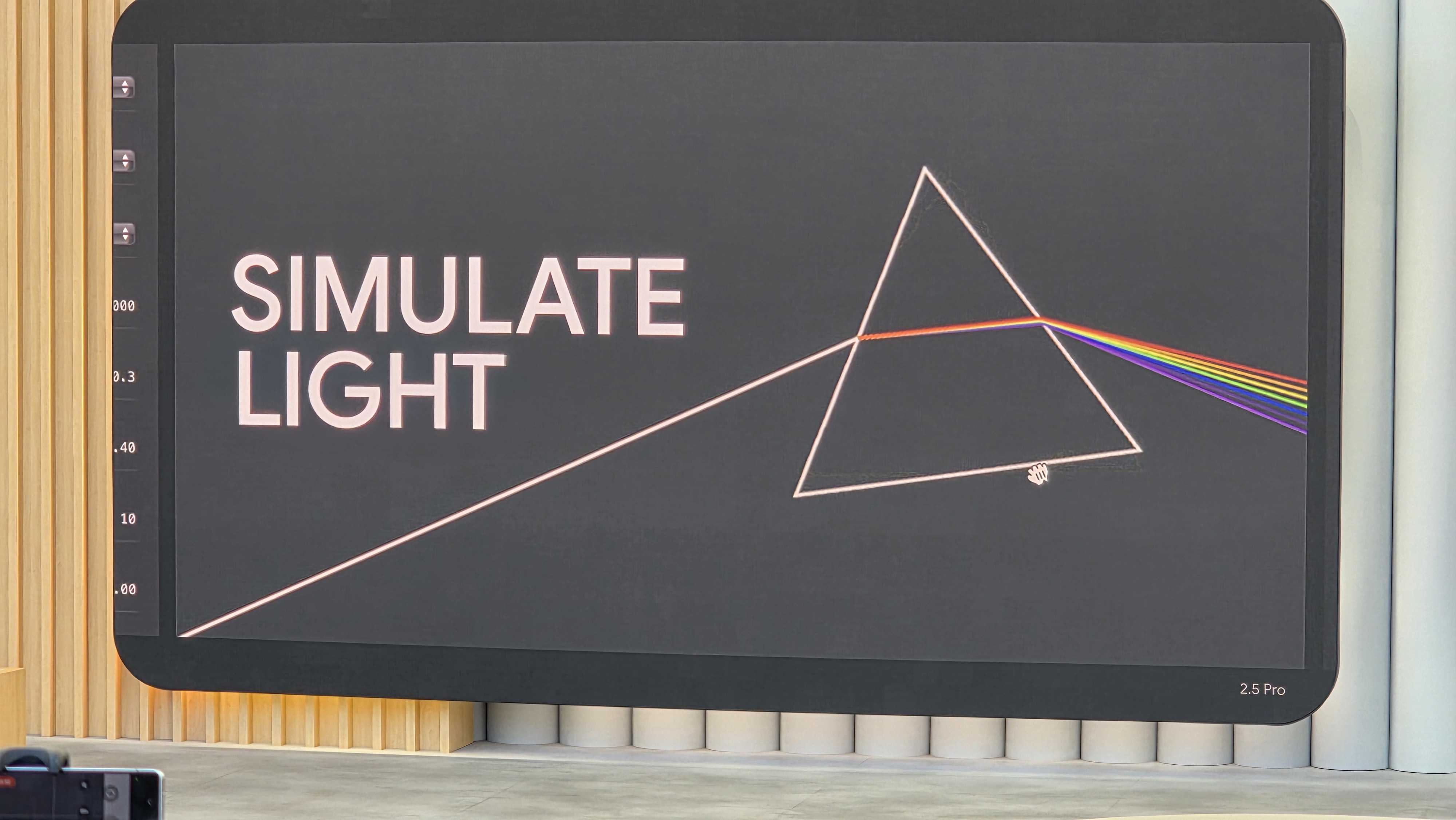

Google working on a World Model

This work informs everything from Veo to robotics.

New mode: Deep Think

This is so powerful that they are still testing saftey. For now, it’s available to trusted testers,

Going deep

We’re into “Gemini Diffusion” territory. Definitely very developer-focused.

The processing went so fast that the applause was muted when she got her result.

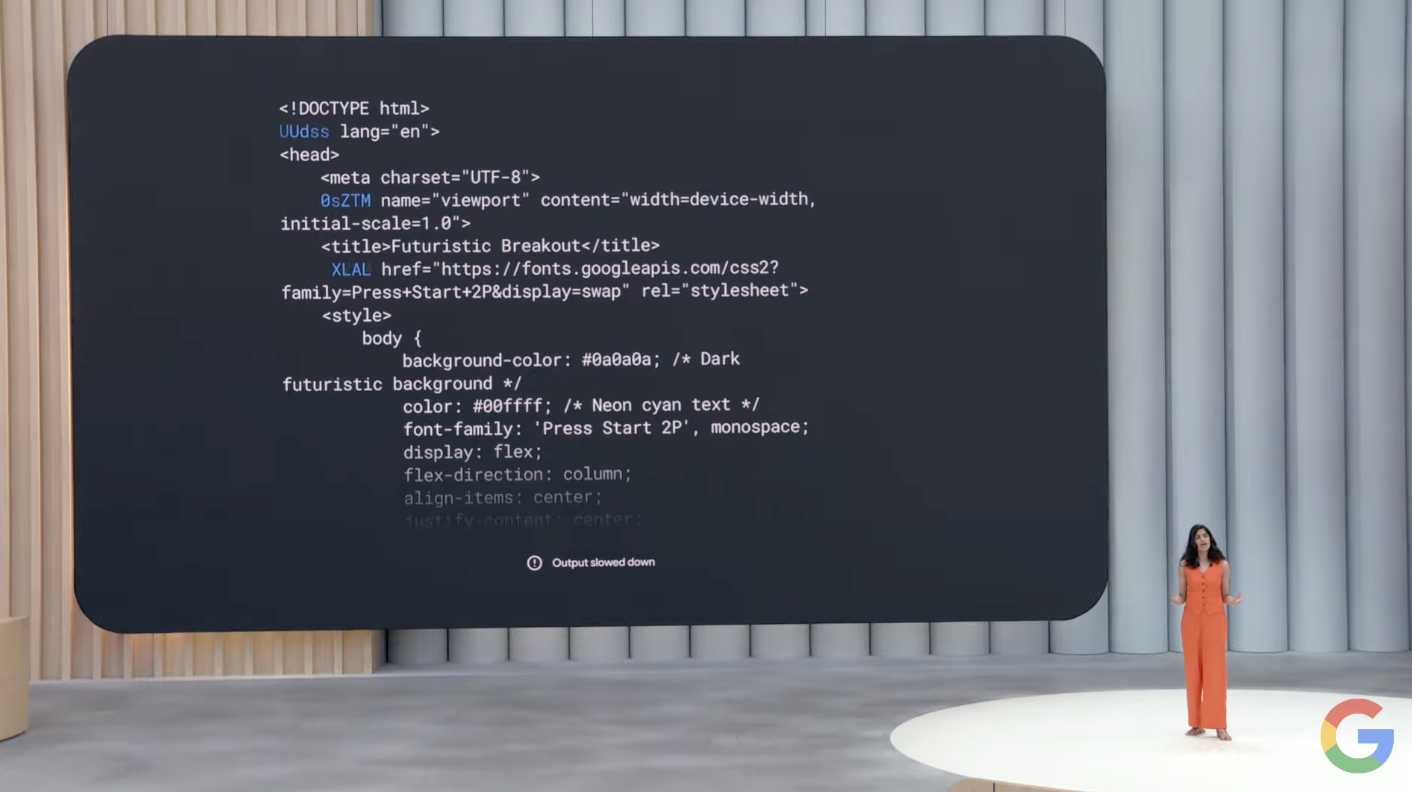

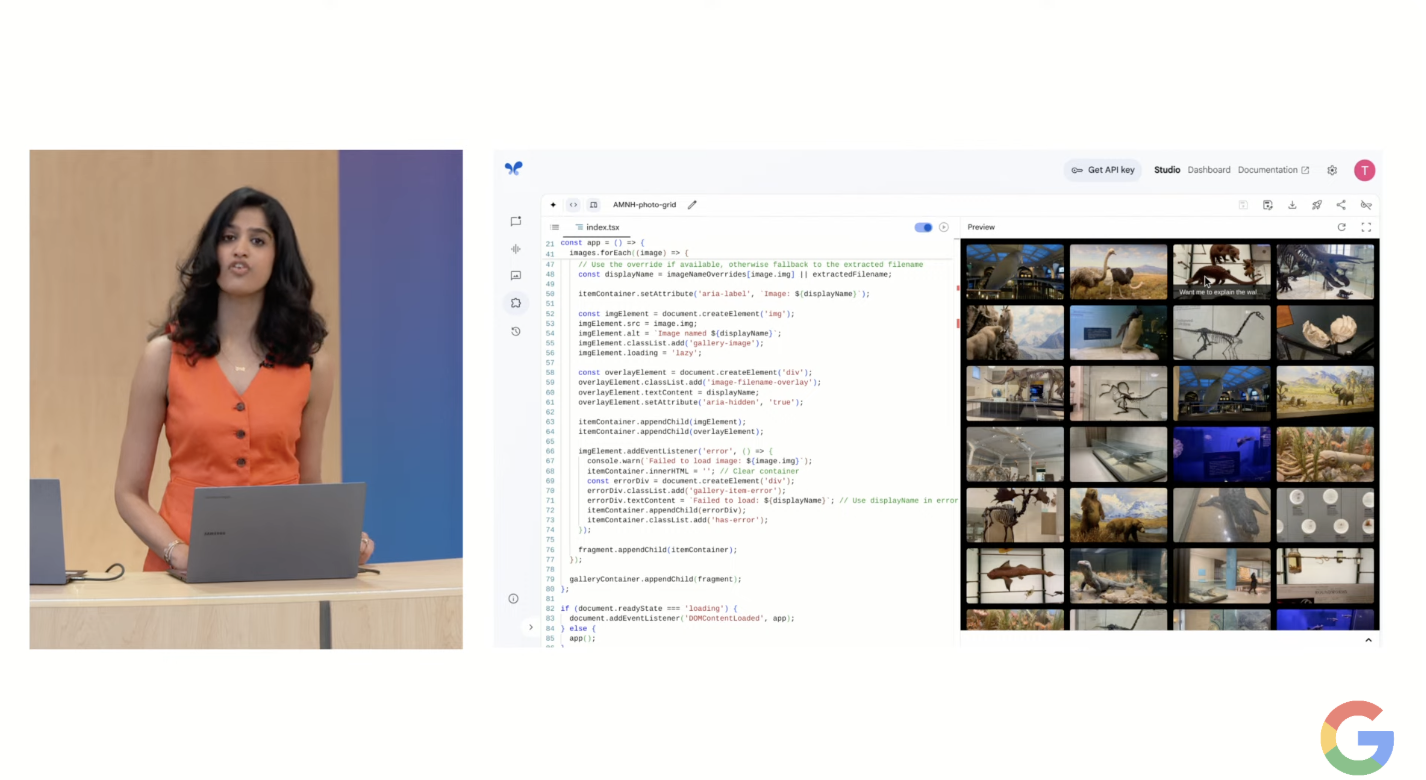

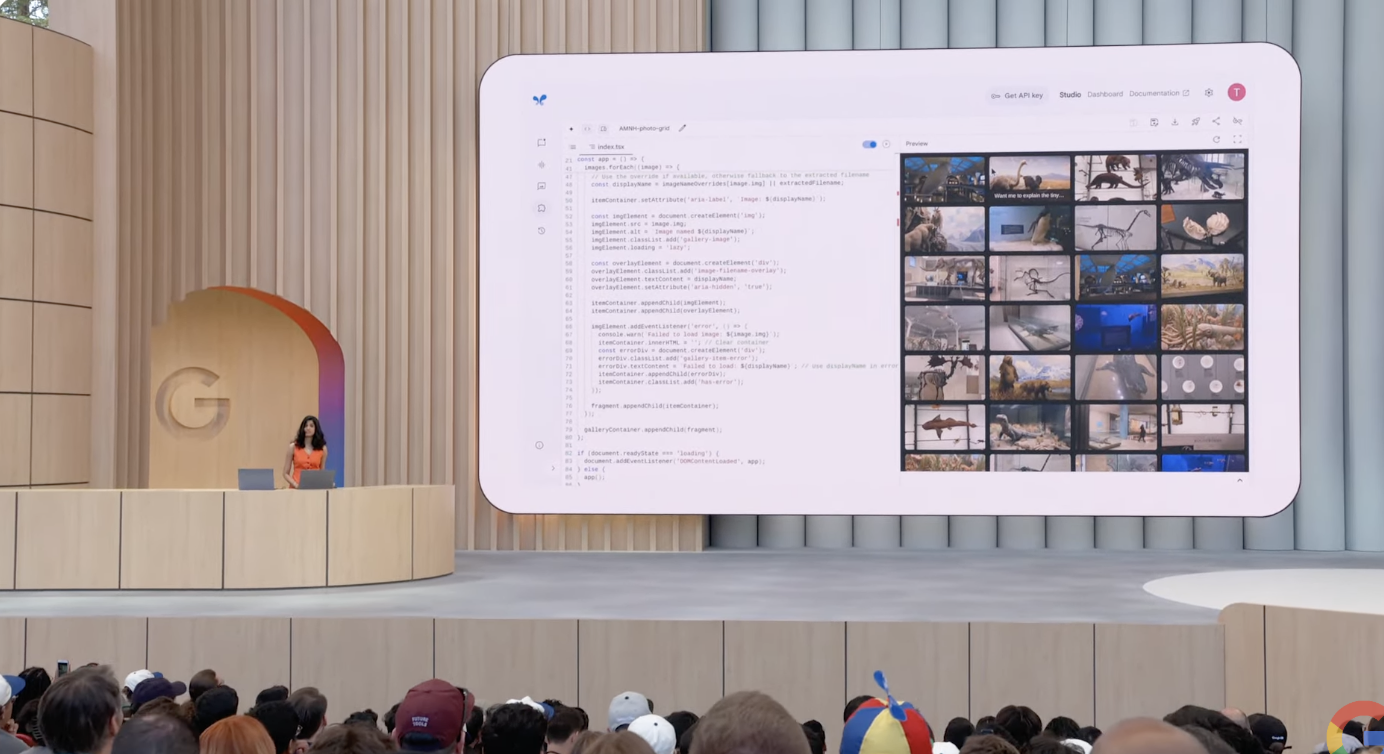

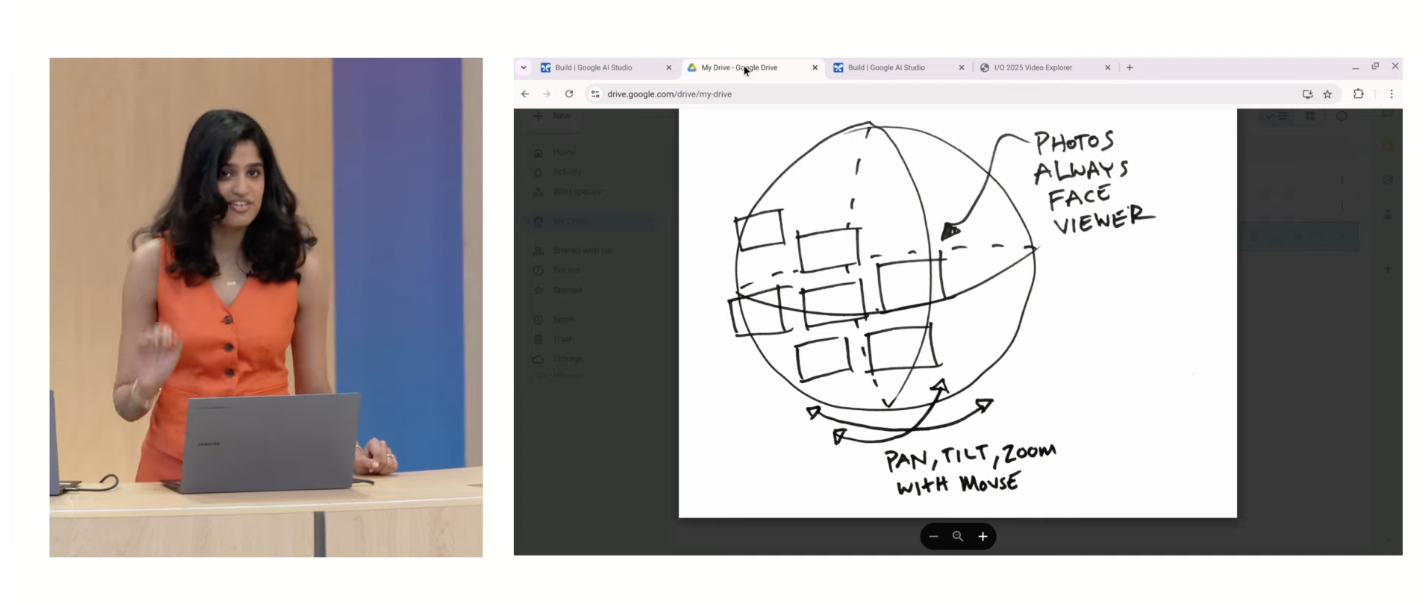

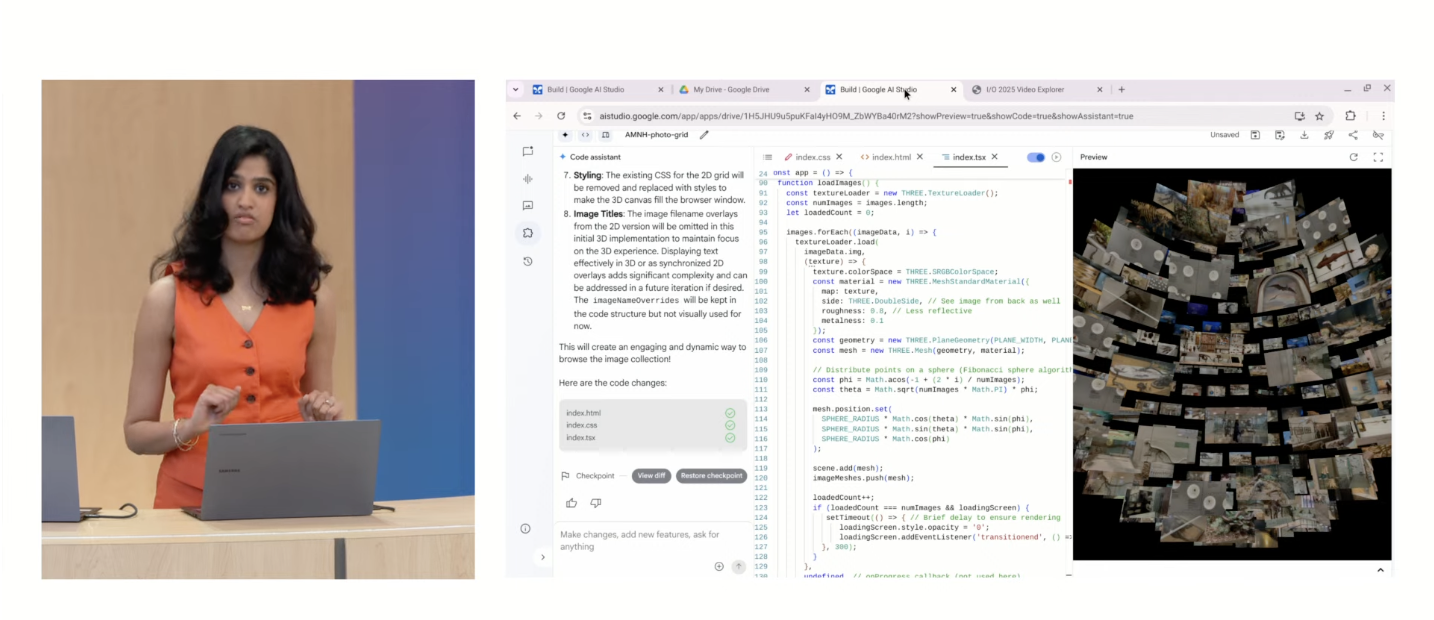

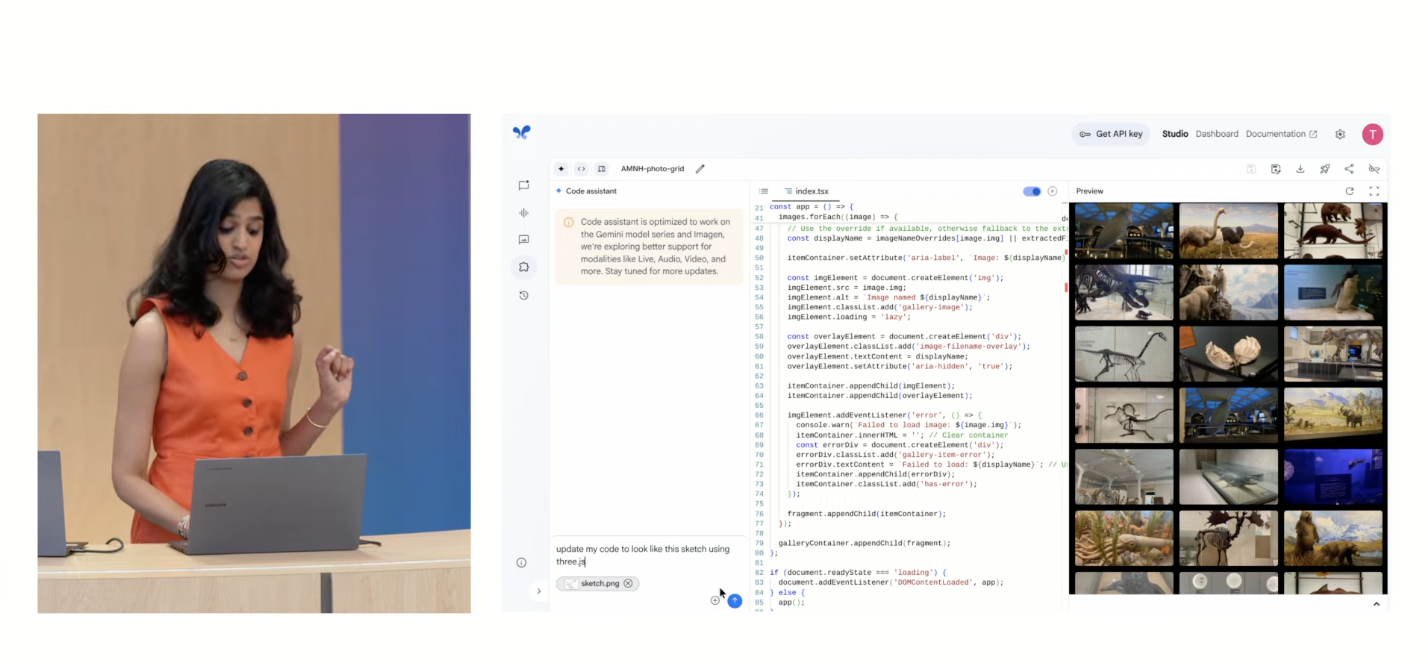

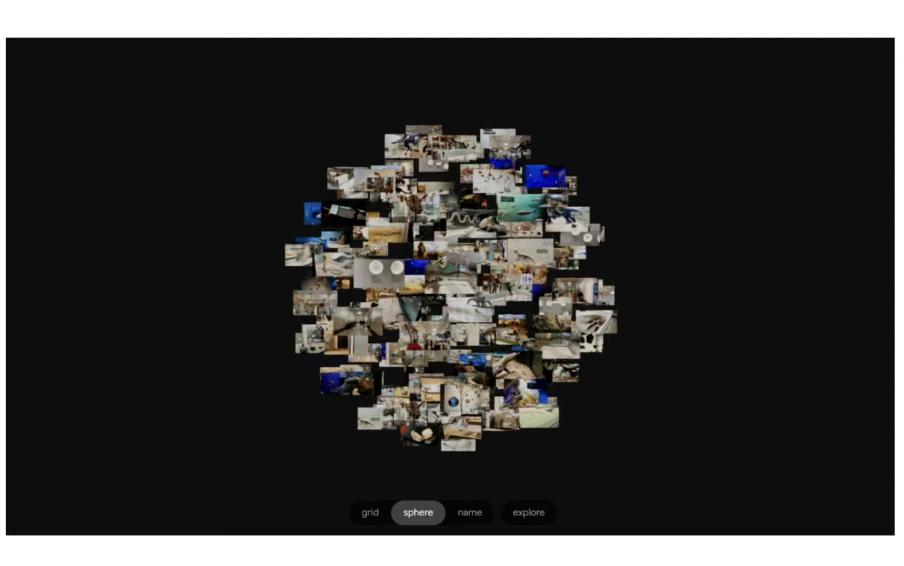

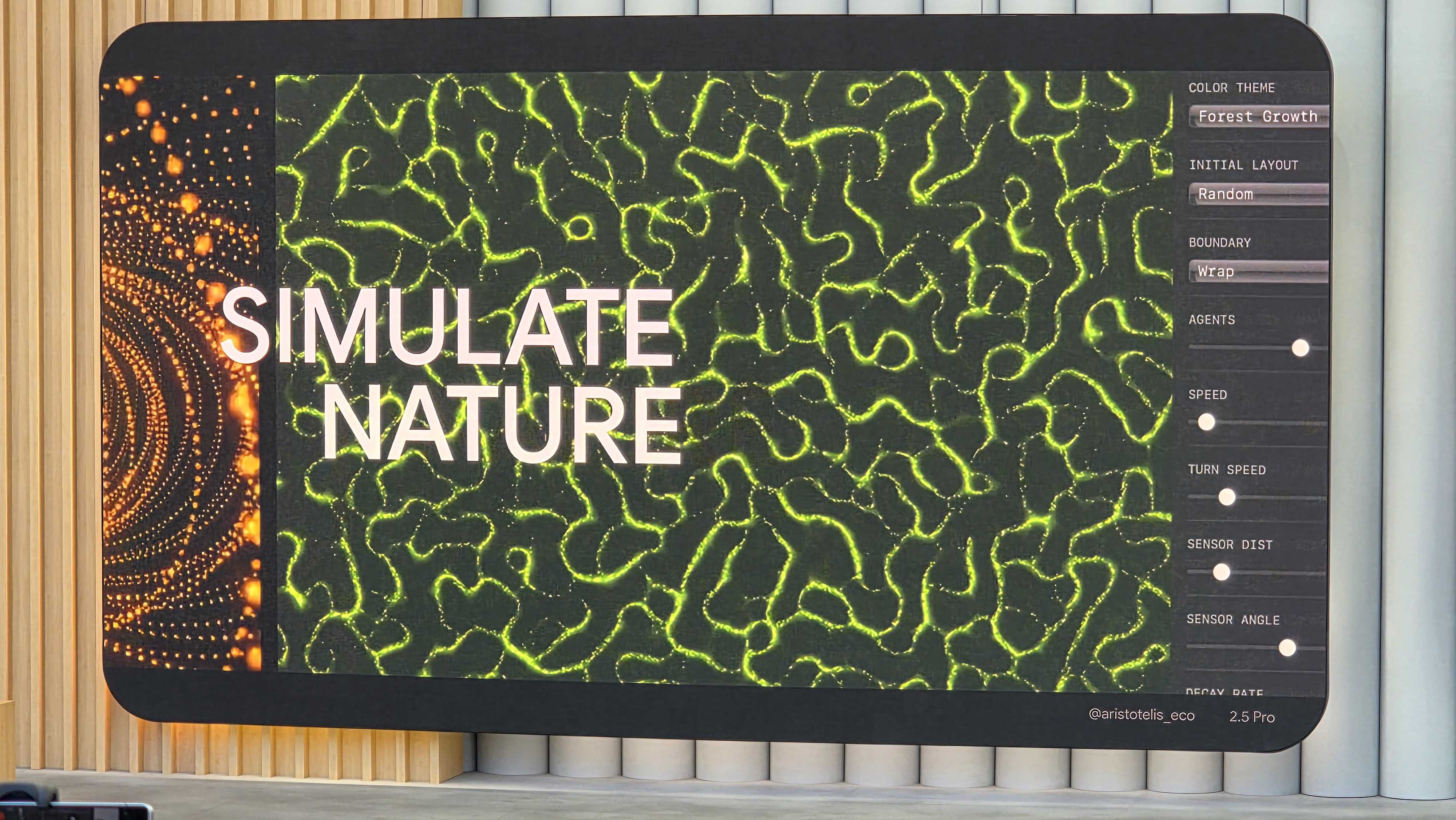

Live coding in Gemini 2.5 Pro

We watching some real-time code and Web app generation in Gemini 2.5 Pro. She did have to cut the generation time (2 minutes short) and go “baking style” to show us the final app.

Native language Text-to-Speech capabilities

Gemini 2.5 Flash has some very powerful language capabilities on the way.

What can you do with Gemini?

Here are some ideas:

Nobel Laureate Demis Hassabis in the house

The DeepMind CEO is going over the key models in including Gemini 2.5 Pro and a new version of Gemini 2.5 Flash. Generally available in early June. Pro comes soon after.

Personalization, FTW?

With your permission, Gemini could look across your apps and use that information create things like personalized smart replies in Gmail.

This sounds both amazing and slightly scary. Google would be looking inside all your stuff and handing over details to Gemini.

Coming this summer in Gmail.

Project Astra heading to Android and iOS

Project Astra, the powerful, mobile AI package that can look and react to real-time input is coming to iOS and Android today. The demo was good because it showed how Project Astra could keep correcting the user.

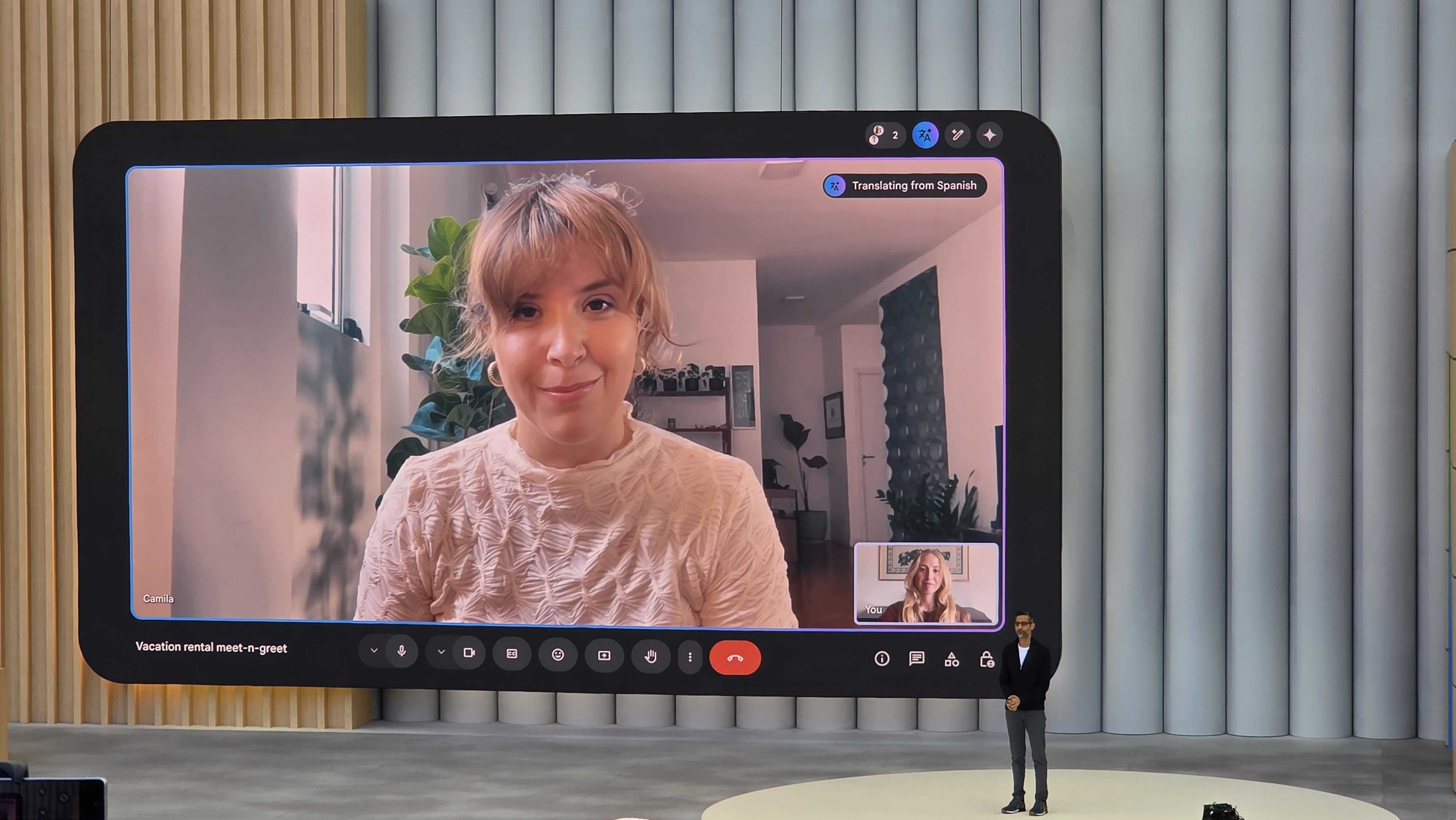

New real-time translation capabilities

Google has some cool real-time translation capabilities. That looked and sounded pretty good.

Google Starline becomes Google Beam

Google is taking video conferencing to the third dimension with a massive upgrade to Starline to Google Beam. Six cameras in one device to create a 3D version of you for your video call. HP is the first partner.

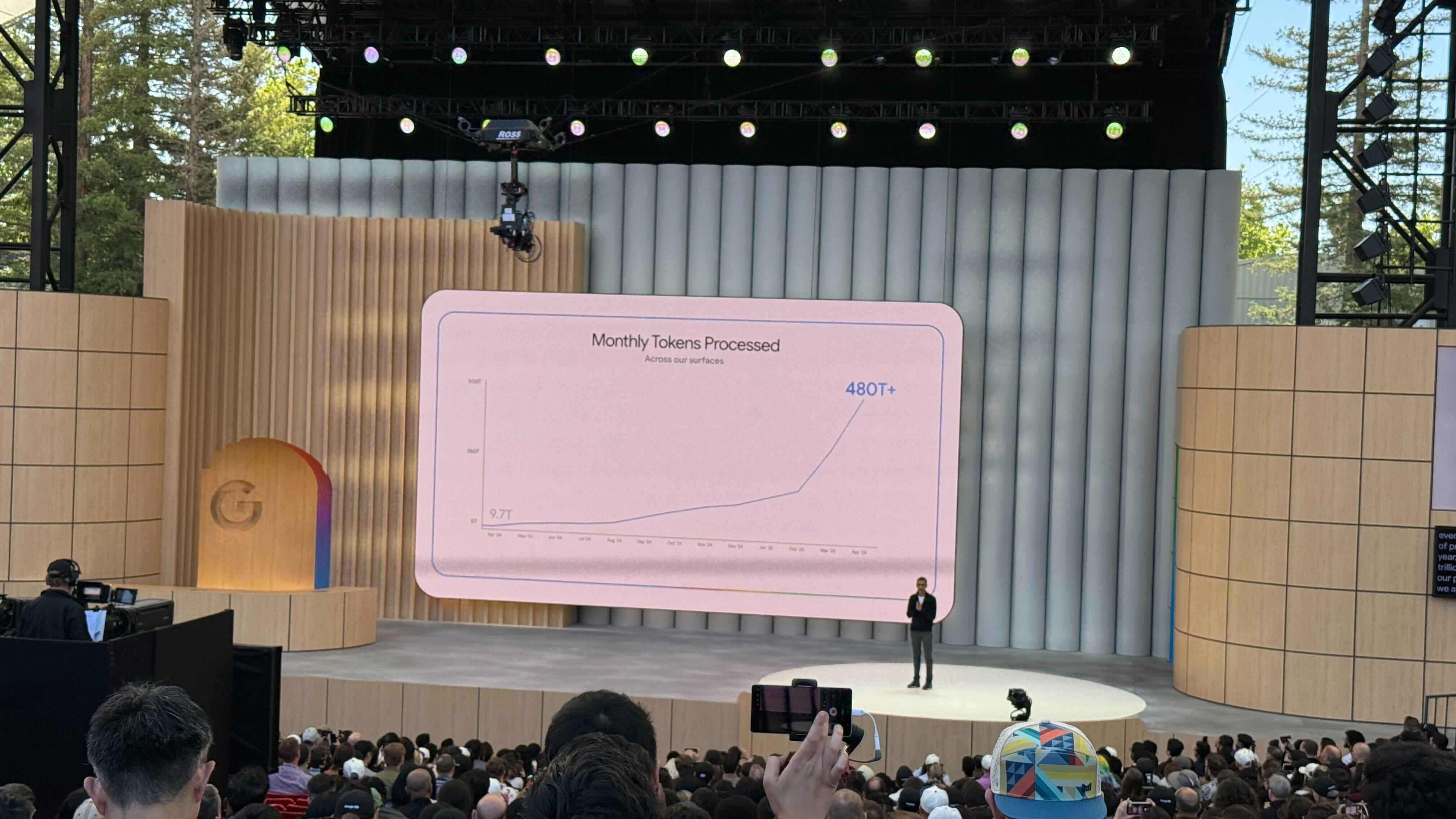

So many tokens

Just look at how many more tokens Google is processing since this time last year. Wow.

Some recent AI highlights

Sundar Pichai is walking us through recent AI breakthroughs.

Sundar Pichai takes the stage

There’s Google CEO Sundar Pichai. He says every day is Gemini season at Google.

Here we go: A preview of powerful Generative Video capabilities

The countdown is on

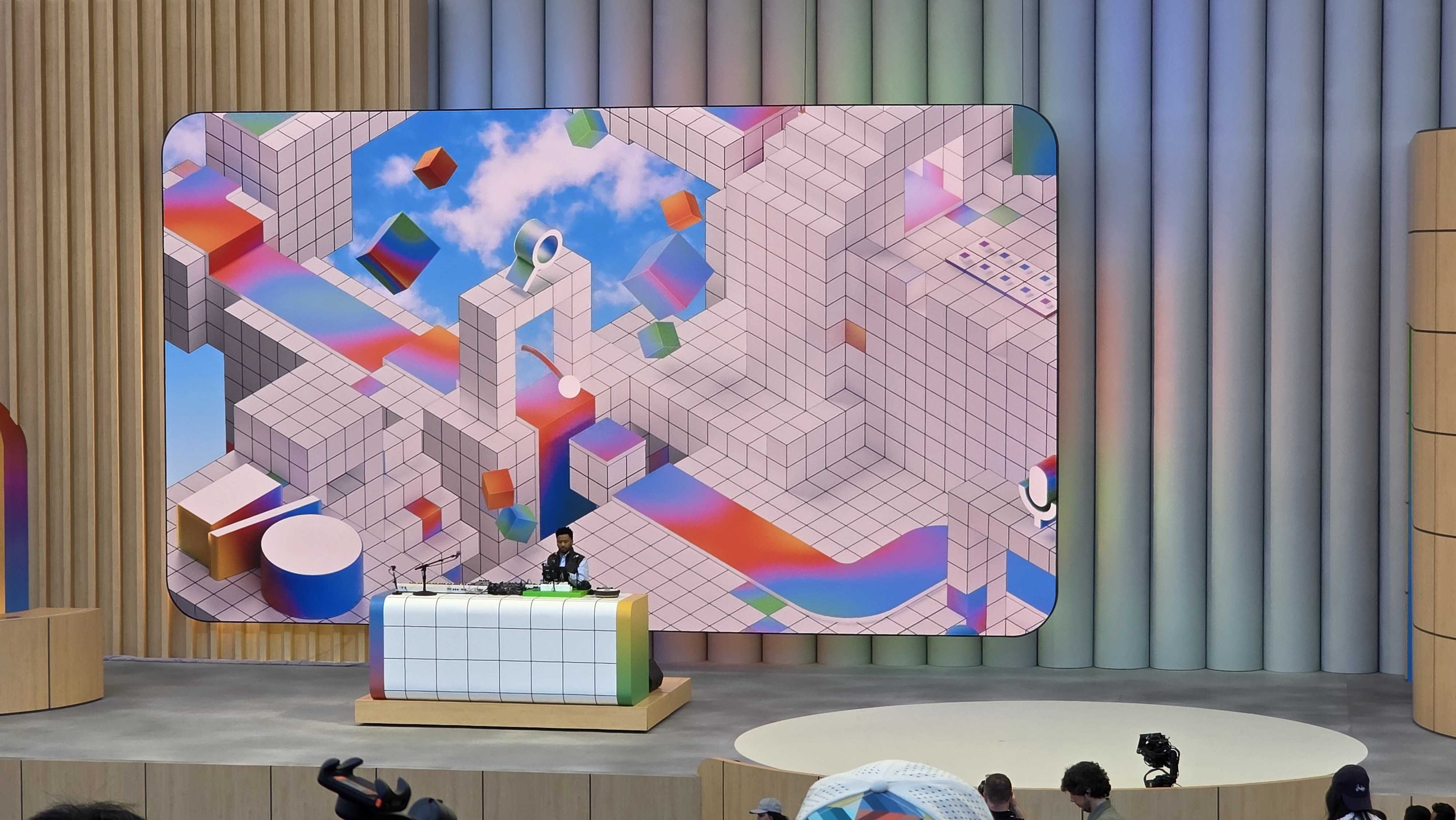

With less than 10 minutes to go, the DJ has left the stage. Now we wait…

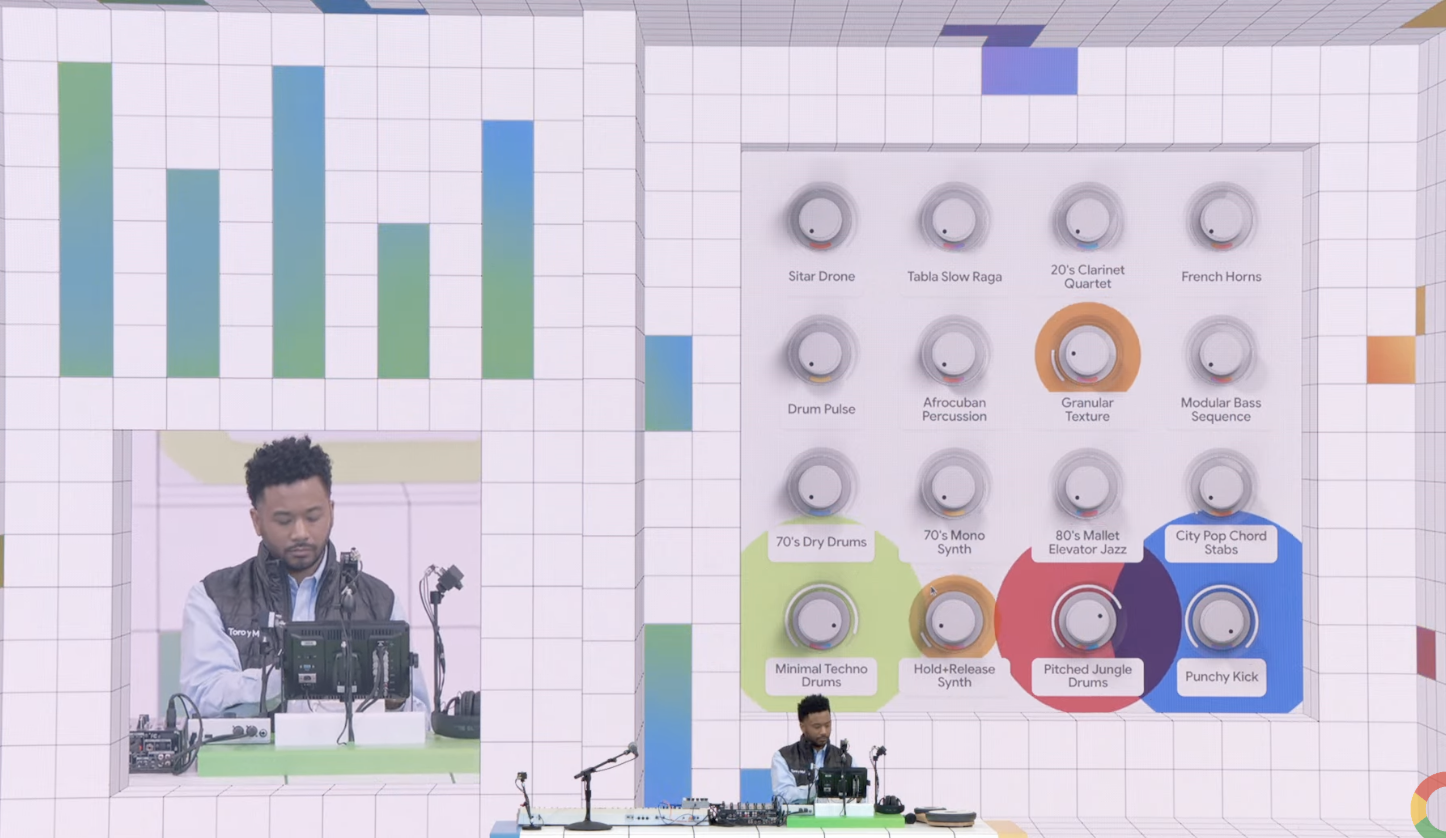

One man band

What’s kind of cool about this music is that it’s all coming from this one guy. He’s fast and smooth, turning all these knobs and occasionally singing a note, to make it happen.

The pace of the music is also picking up and may continue to do so until we kick off the keynote at 1PM ET.

A musical interlude

Lance Ulanoff jumping in here.

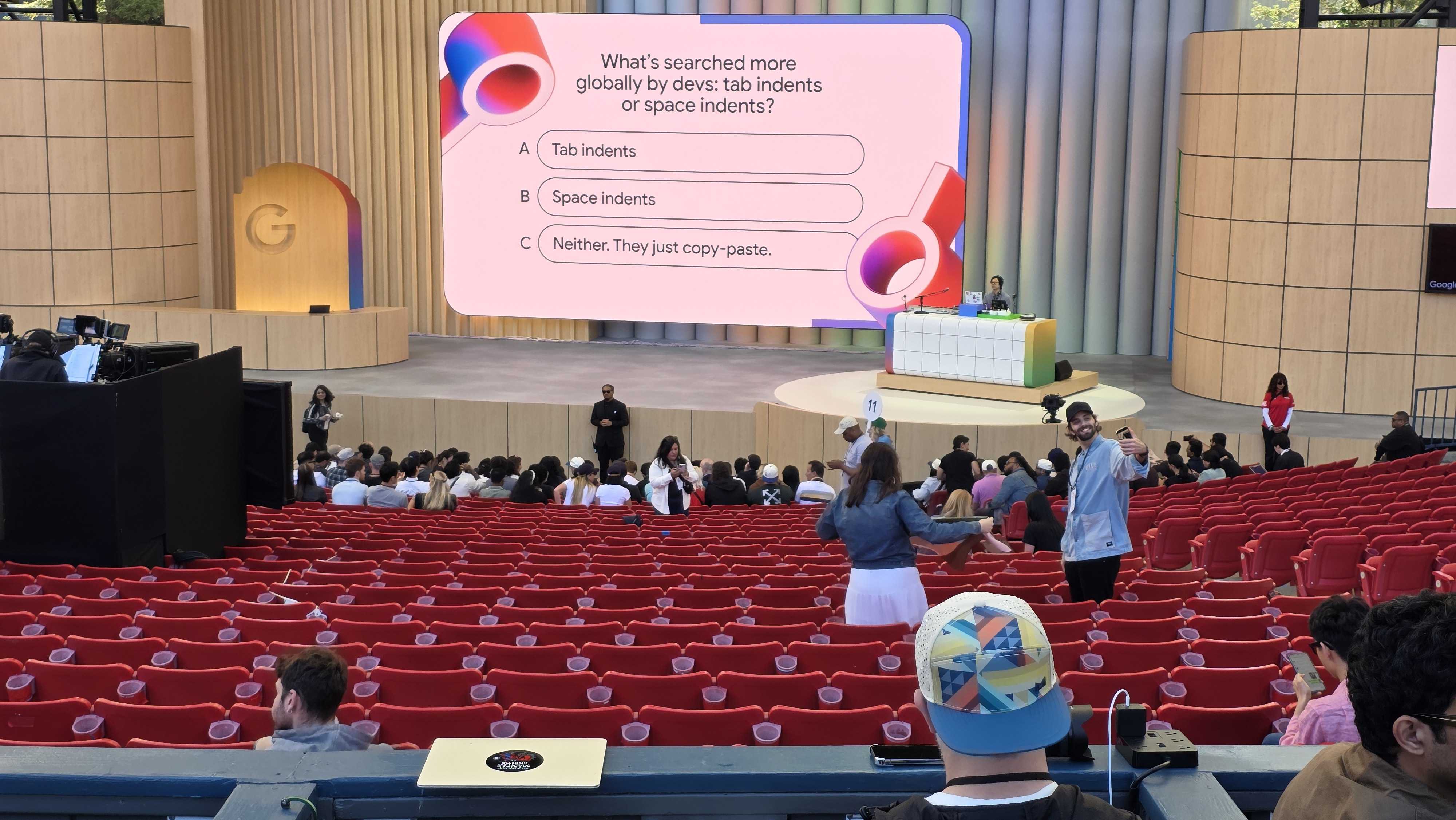

Google I/O attendees are currently being treated to what Phil Berne calls “Ambient music with no explanation, after about an hour of vibe coding.”

It seems to be setting a mood, but not one of attentiveness. The Google DJ did tell people they could look at their phones.

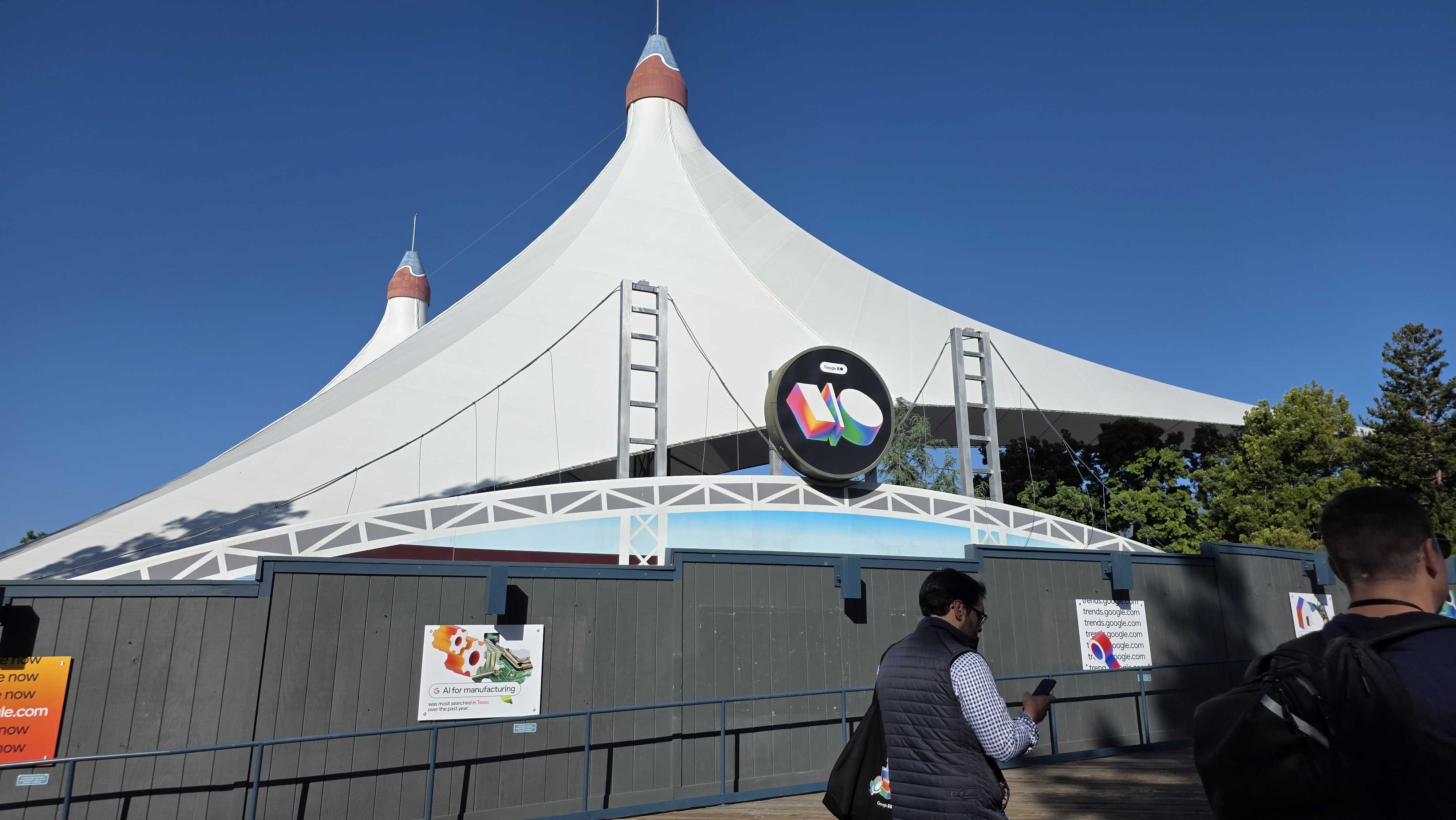

People are taking their seats

People are now taking their seats in the Google I/O 2025 amphitheatre. The TeachRadar team are on site and will be bringing you all the important updates live from the event, which is scheduled to start at 10 am PT / 1 pm ET / 6 pm BST.

The live stream has awakened… the show will start shortly

If you’ve got a stream of the Google I/O keynote open in a browser window you’ll find that it has just suddenly sprung to life, playing a repeating animation and some, er, interesting, music.

It’s a sign that there’s not long to go now, so it’s time to get some snacks and a drink ready, because we are about to set the controls for the heart of Google, where we’ll learn all about the exciting AI developments that the tech giant has in store for us.

A ticker tape image message at the bottom of the video says “Welcome to Google I/O. The Show Will Start Shortly.”

More Google I/O photos

The TechRadar team is getting closer to the presentation theatre for Google I/O 2025, here’s a few of the things that have been encountered along the way. Note – no pepper spray is allowed at Google I/O 2025!

The four stages of Google I/O

While we think the new announcements in the Google I/O keynote today will be all about AI, particularly around Gemini, it’s worth bearing in mind that AI is just one of four stages at Google I/O 2025. The four stages are: Android, AI, Web and Cloud.

So, we think we’ll be getting announcements in all four of these areas today. There’s only 90 minutes left to go, we can’t wait to bring you all the new announcements!

Arriving at Google I/O 2025

It’s pretty early in the morning for technology journalists to function at full capacity, but the TechRadar team are on site and ready to report on Google I/O 2025. Here’s Phil. Ready, Set, I/O!

Taking a look at Google Labs

One way of trying to predict what Google might be announcing at its Google I/O event tonight is to take a look at what it is cooking up in its Google Labs development area. Google Labs is accessible to most people and lets you try a few of the fun tools that Google is working on before they are ready.

Looking in there right now I can see AI Mode, which is Google’s way of competing with ChatGPT search by using Gemini to help you search online. Also in there is Whisk Animate, which uses Veo 2 to turn your images into animations. I really like the look of Daily Listen, which uses Google’s powerful Audio Overviews (the podcast-like audio created in NotebookLM) to produce a daily summary of news for you. Finally, there’s Little Language Lessons, which uses AI to help you learn a foreign language.

Will any of these projects see fruition tonight in Google I/O? Stay with us here at TechRadar to find out.

How will you watch it?

There are about three hours left to go until the Google I/O event! How will you watch it? I think I’ll be tuning in on YouTube. Not only does YouTube work across most devices, but it’s a pretty solid system that can handle the sort of volumes you’d expect from a big event like this.

Don’t forget that we’ll be bringing you updates throughout the event on this blog, so keep a TechRadar window open for all the updates.

What feature do I want the most?

It’s Graham Barlow, TechRadar’s Senior AI editor taking over the live blog now in the run up to the big Google I/O event in a few short hours. I can’t wait to see what Google has in store for us, particularly with Gemini, it’s AI chatbot!

My current favorite feature of Gemini is Live, which gives you the ability to have a human-like conversation with Gemini. I like this feature so much that I’ve actually hacked my iPhone’s Action Button so that it launches straight into Gemini Live.

Forget Siri, Gemini Live is the sort of AI tool that I wish Apple had by default. Want to know how to add Gemini Live to your Action Button on iPhone right now? Don’t worry, I’ve written up the details of how to do it.

On some phones, like the Pixel 9 and Samsung Galaxy S25, Gemini can ‘see’ through your camera and you can ask it questions about what it’s looking at. I would be great if these features were expanded even further in tonight’s big event.

He adds, “Of course, that’s not all Wear OS fans are getting. We’re also due to get better battery life, to the tune of 10% more efficiency (for Pixel Watch users, that’s an extra 2.4 hours of use) and Gemini on your wrist.

We know you’ll be able to ask Gemini to do simple tasks for you, like remembering locker key codes, but we hope there are more surprises in store this evening. I’d personally love a Gemini notification summary feature, allowing me to summarize long email chains or WhatsApp group chat message threads without pulling out my phone.

What we’re not likely to see today is any new wearables hardware – Google will likely leave the Pixel Watch 4, and any Fitbits it decides to throw our way, until later in the year.”

Watching for signs of Wear OS 6

We asked Matt Evans, TechRadar’s Fitness & Wearables Editor, to give us more info on what we can expect from Wear OS 6.

He said, “We actually know quite a bit about what Wear OS 6 is going to entail, and expect this to be shown off in full during this year’s Google I/O.

A redesigned interface with Material 3 Expressive will create a seamless (or at least “less seams”) transition between Android and Wear OS, with a similar design language. A widget stack like watchOS 10’s redesigned Glances replacement on the best Apple watches is coming, and will use the Pixel watch’s rounded face to get smaller and bigger as you scroll.

Check out the GIF above showing it in action, as previously revealed by Google.”

A Pinterest competitor?

Some last-minute rumors hint at Google launching a rival to Pinterest. This would be another attempt at a social media platform after the last attempt, Google+, went offline.

According to sources, the new app will be based on image search results on Google, enabling users to collect different pictures into collections, which can then be shared with other people. If something like this is in the pipeline, then Google I/O 2025 offers the perfect platform from which to announce it.

As someone who has never really used Pinterest, this doesn’t fill me with excitement. But considering the website has millions of users globally, I suspect a Pinterest competitor could get some people talking.

Gemini’s image editing is super impressive

We recently compared Google Gemini’s new image editing feature to ChatGPT’s, and found that Google’s image generation is much better at sticking to the original image.

After comparing both Gemini and ChatGPT’s image editing prowess, TechRadar writer Eric came to this conclusion:

“Gemini’s image edits were fast and accurate, and mostly only changed what I asked in the way that I asked. I’d say it’s great, especially for quick edits. ChatGPT takes way longer to process the request, and wasn’t great at getting it right the first time. It would likely require a lot of back-and-forth in editing prompts to get just the changes you want unless you use the highlight tool, which takes up some extra time, too.

I still think ChatGPT’s overall image quality is higher than Gemini’s, but that only matters if you have patience and if ChatGPT gets it right the first time. I suspect I may use ChatGPT to make any images, but turn to Gemini if I want to make a few adjustments to an image that I otherwise find appealing.”

Google to cement Gemini as a leader in AI

I’m not afraid to say it, I use Gemini more than I use ChatGPT, and honestly, I think it’s just easier to use.

The other day I used Gemini Live to help cook a meal, and it was incredible asking AI questions based on what it could see through my smartphone’s camera. Gemini also offers Deep Research, which we’ve tested thoroughly, and even image and video generation that’s as good as some of its competitors.

In terms of consumer AI tools, Gemini is leading the pack in my opinion, and I expect Google to further the gap with OpenAI’s ChatGPT at I/O later today. ChatGPT may be more powerful depending on your use cases, but for the average consumer, Gemini ticks all the boxes.

Android XR, finally

We’ve been waiting to get more info on Google’s smart glasses for what feels like forever. The time might’ve come, however, as we’re fully expecting to get more of an insight into what Android XR and extended reality glasses are capable of at the event later today.

It’s rumored that Google, alongside its partner, Samsung, will showcase XR glasses alongside a larger project codenamed Moohan.

Not long to wait now…

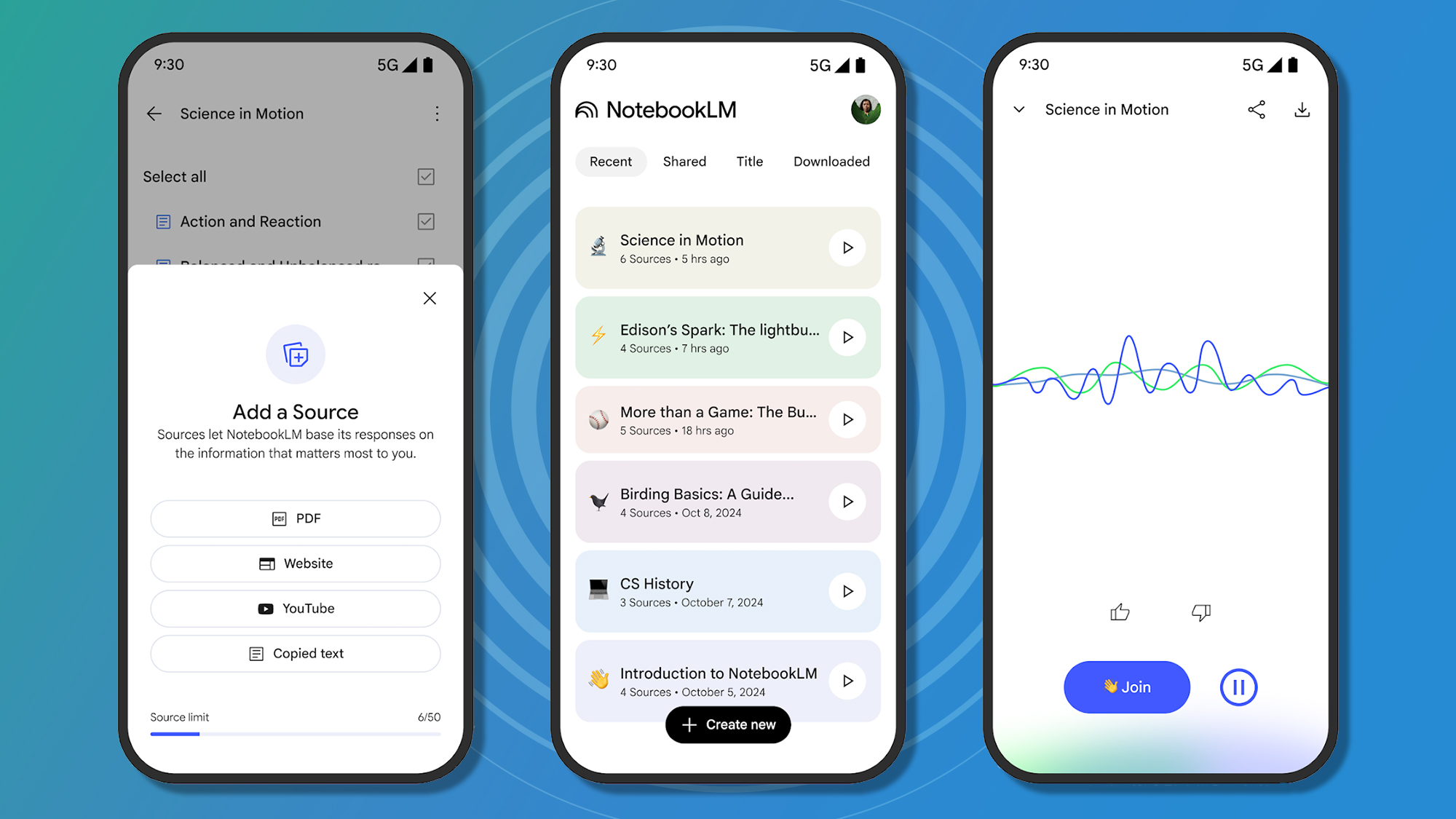

Notebook LM app now available on iOS and Android

Google started the day with the arrival of the NotebookLM app for iOS and Android.

If you’ve not used Notebook LM yet, it’s an impressive AI tool that is able to turn any written document into a short podcast clip with two hosts.

The tool is very useful for studying, research, and even just for a bit of fun. We’ve tested NotebookLM extensively at TechRadar, so I’ll leave a couple of articles below for you to read.

The app is completely free and available to download now.

Google Gemini updates?

In March, Google revealed Gemini 2.5 Pro Experimental, which the company called its ‘Most intelligent AI model’ yet.

I’m hoping to see more information on 2.5, and maybe a wider release. At the moment, naming schemes for AI models are getting increasingly hard to follow. Could Google rise up and find a way to simplify the process?

Gemini has so much to offer from Gemini Live, Deep Research, Canvas, Veo, the capabilities are almost endless. If Google could merge everything so that the AI can determine what you need, when you need it, that would be a major shift in the way we interact with artificial intelligence.

Normally we get a look at the future of Android at Google I/O, but this year is different after Google showcased Android 16 last week.

If you want to see what the upcoming mobile software update looks like, we’ve covered the 5 most useful features coming with Android 16.

Google I/O 2025 takes place over May 20 and May 21, but the main event is the Google keynote, which starts at 10am PT / 1pm ET / 6pm BST, which is 3am on May 21 for those in the AEST time zone.

The keynote will likely be around two hours long based on past form, and you’ll be able to live stream it from Google’s I/O website, and also on the Google YouTube channel.

We’ve embedded the YouTube video below, so you can watch it without even leaving this page if you want – and if you click the ‘Notify me’ button on the video, you can get a notification when the event is about to start.

One feature I expect Google to talk more about today is AI Mode. TechRadar writer Eric Hal Schwartz has tried Google’s AI Mode and says it might be the end of Search as we know it.

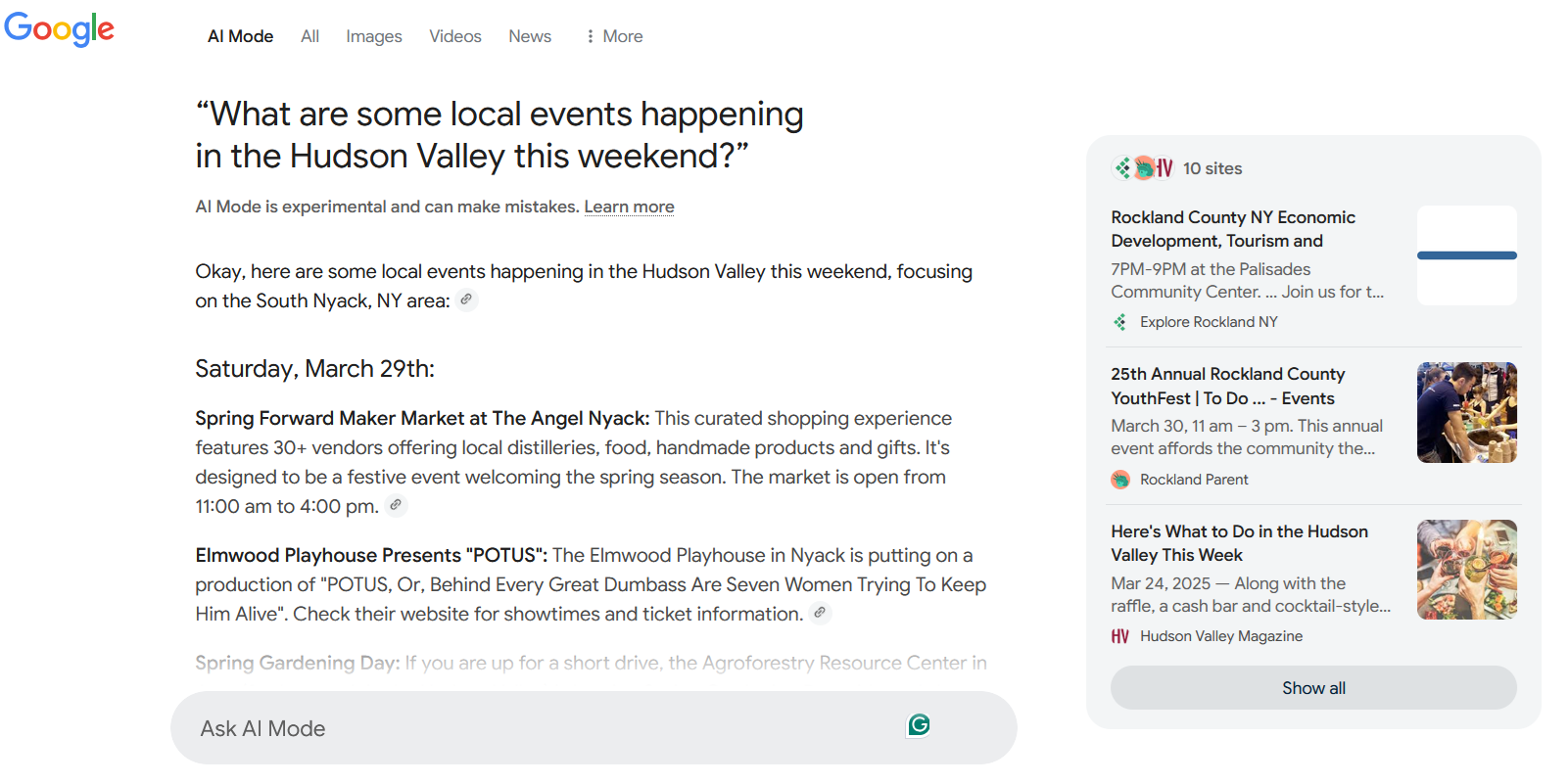

In his feature about the user experience he says, “There’s always a lot going on in my area, but finding information on what’s on can often be tough. Instead of checking six different websites and hoping they’ve been updated, I asked Google through AI Mode: “What are some local events happening in the Hudson Valley this weekend?”

As you can see above, the AI responded almost instantly with a tidy roundup of events. The list of links to the right showed where it was pulling from, and each event had a short description and details of location and time, as well as a hyperlink to where the information came from. The diversity of sources stood out, and I can’t deny it was faster than a regular search plus time spent opening each site to see what was listed.”

Think of AI Mode as Google’s take on ChatGPT Search, although, are we ready to fully embrace AI search yet? I’m not so sure.

On Google’s official website for the event, it says, “Discover how we’re furthering our mission to organize the world’s information and make it universally accessible and useful.”

This makes me think we’re going to see some big improvements to Google Search, and maybe even a wider roll-out of AI Mode.

AI Mode launched in beta earlier this year, bringing artificial intelligence to the forefront of the Google Search experience. Whether you love it or hate it, AI looks like it’s here to stay, and Google Gemini is probably going to play a part.

Google I/O 2025 starts in around 9 hours, at 10 am PT / 1 pm ET / 6 pm BST. That gives us loads of time to take you through everything we expect to see at Google’s headline keynote.

There’s going to be loads of AI, maybe some Android, and definitely some surprises, so you won’t want to miss what the tech giant has up its sleeve.

Welcome to TechRadar’s Google I/O 2025 live blog! John-Anthony Disotto, Senior AI Writer, here to take you through the first few hours of today as we build up to one of Google’s biggest events of the year.

So grab a coffee, set this tab up to the side of your monitor, and get ready for a huge day in the world of tech!

Services Marketplace – Listings, Bookings & Reviews