- AI teams still favor Nvidia, but rivals like Google, AMD and Intel are growing their share

- Survey reveals budget limits, power demands, and cloud reliance are shaping AI hardware decisions

- GPU shortages push workloads to cloud while efficiency and testing remain overlooked

AI hardware spending is beginning to evolve as teams weigh performance, financial considerations, and scalability, new research has claimed.

Liquid Web’s latest AI hardware study surveyed 252 trained AI professionals, and found while Nvidia remains comfortably the most used hardware supplier, its rivals are increasingly gaining traction.

Nearly one third of respondents reported using alternatives such as Google TPUs, AMD GPUs, or Intel chips for at least some part of their workloads.

You may like

The pitfalls of skipping due diligence

The sample size is admittedly small, so does not capture the full scale of global adoption, but the results do show a clear shift in how teams are beginning to think about infrastructure.

A single team can deploy hundreds of GPUs, so even limited adoption of non-Nvidia options can make a big difference to the hardware footprint.

Nvidia is still preferred by over two-thirds (68%) of surveyed teams, and many buyers don’t rigorously compare alternatives before deciding.

About 28% of those surveyed admitted to skipping structured evaluations and in some cases, that lack of testing led to mismatched infrastructure and underpowered setups.

“Our research shows that skipping due diligence leads to delayed or canceled initiatives – a costly mistake in a fast-moving industry,” said Ryan MacDonald, CTO at Liquid Web.

Familiarity and past experience are among the strongest drivers of GPU choice. Forty three percent of participants cited those factors, compared with 35% who valued cost and 37% who went for performance testing.

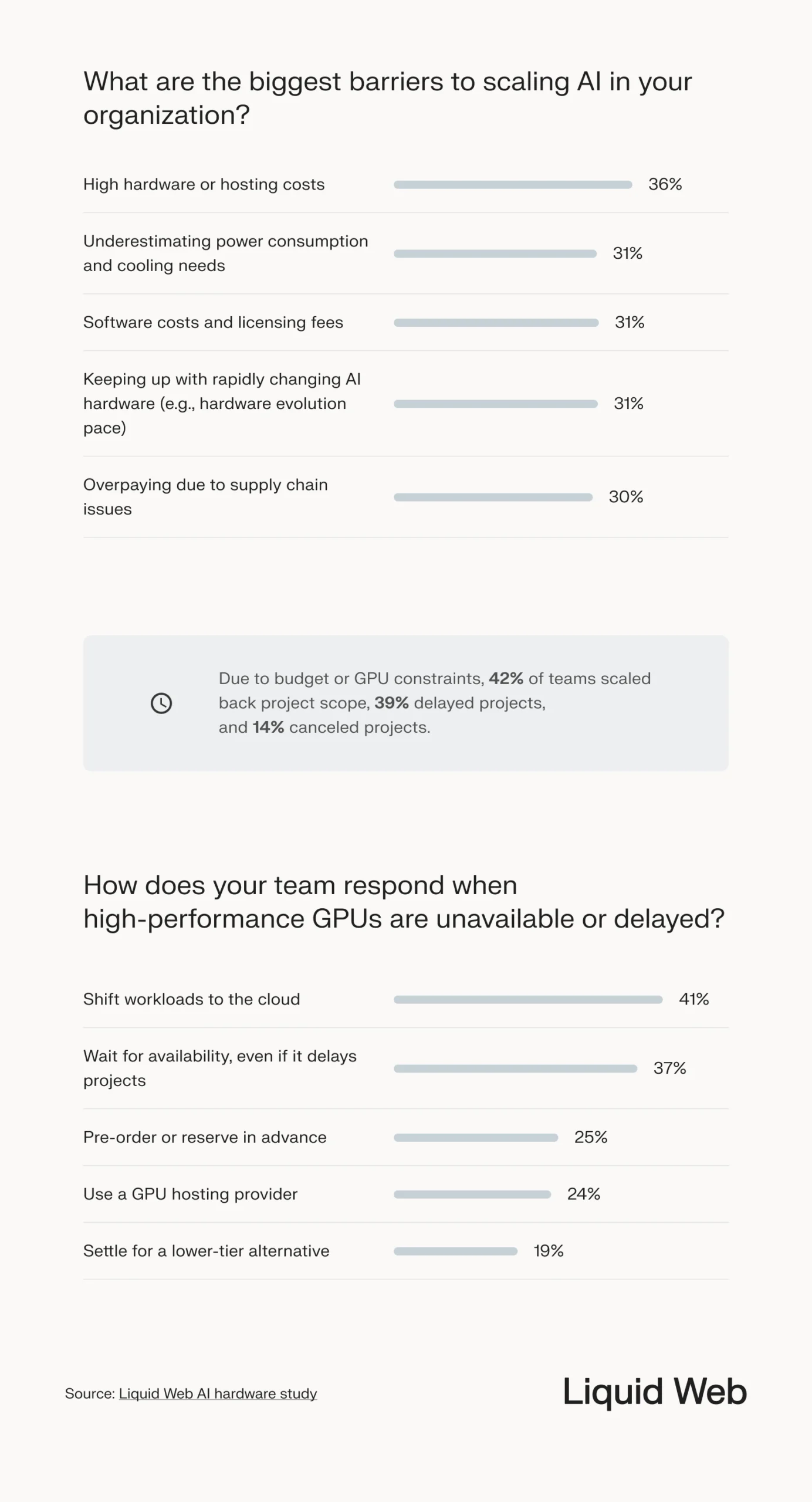

Budget limitations also weigh heavily, with 42% scaling back projects and 14% canceling them entirely thanks to hardware shortages or costs.

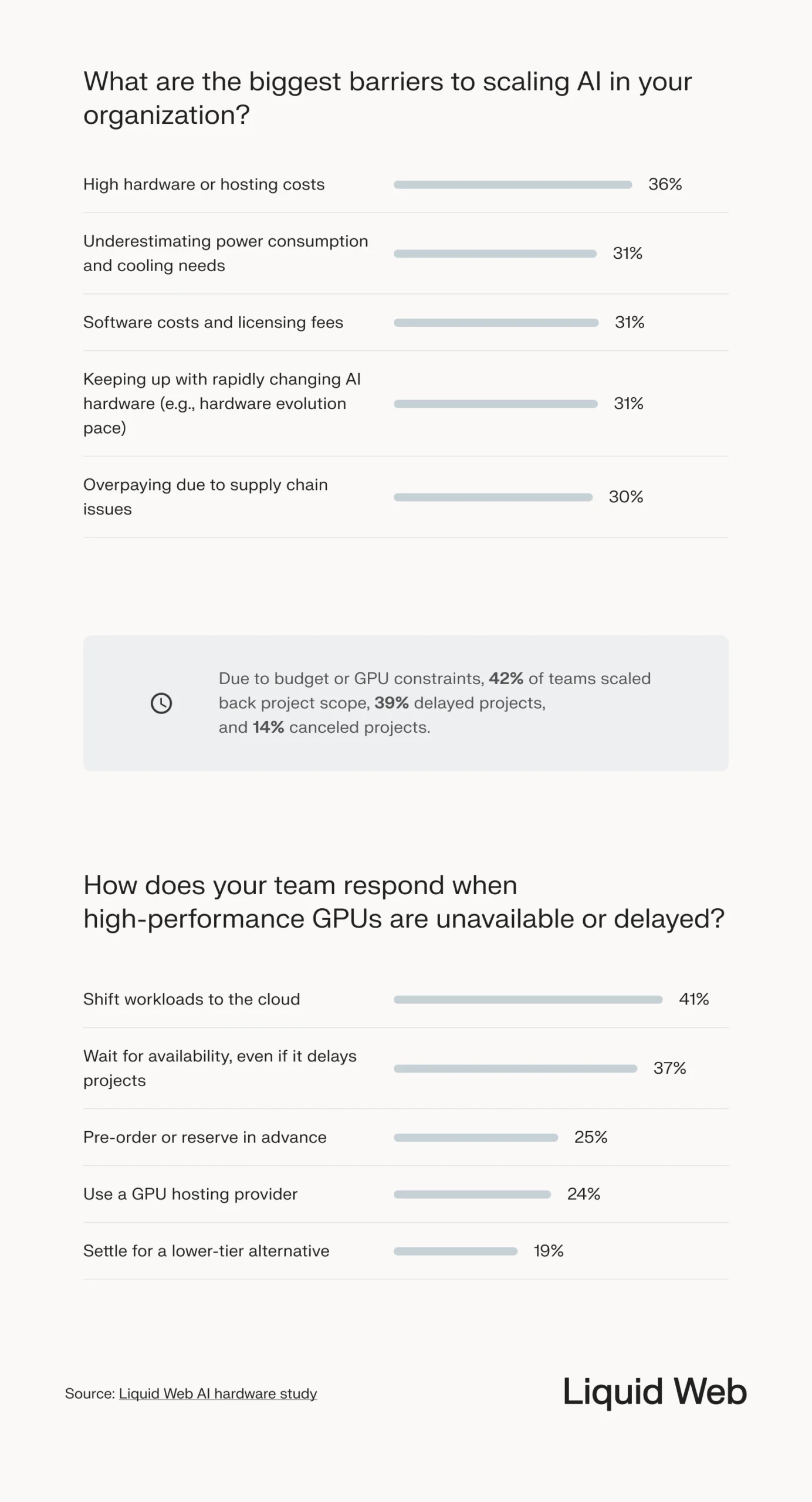

Hybrid and cloud-based solutions are becoming standard. More than half of respondents said they use both on-premises and cloud systems, and many expect to increase cloud spending as the year goes on.

Dedicated GPU hosting is seen by some as a way of avoiding the performance losses that come with shared or fractionalized hardware.

Energy use continues to be challenging. While 45% recognized efficiency as important, only 13% actively optimized for it. Many also regretted power, cooling, and supply chain setbacks.

While Nvidia continues to dominate the market, it’s clear that the competition is closing the gap. Teams are finding that balancing cost, efficiency, and reliability is almost as important as raw performance when building AI infrastructure.

You might also like

Services Marketplace – Listings, Bookings & Reviews