“LIDAR is a fool’s errand. Anyone relying on LIDAR is doomed. Doomed! Expensive sensors that are unnecessary. It’s like having a whole bunch of expensive appendices. Like, one appendix is bad, well now you have a whole bunch of them, it’s ridiculous, you’ll see.” Elon Musk, April 2019.

Tesla’s approach to driverless vehicle technology has been controversial at best. A series of increasingly poor marketing and branding choices have made it difficult to ascertain exactly what the company’s actual benchmark for autonomous driving is.

On the one hand, it’s clear that Tesla has one of the world’s most advanced driver-assistance features. Teslas are among the safest vehicles a human could drive. Yet, on the other hand, the company is probably years away from developing a product that doesn’t come with a warning for drivers to keep their hands on the wheel at all times and be prepared to take over at a split-second’s notice.

The competition, however, has already moved past that stage.

Yep, we specialize in zero intervention driving. Check out our steering wheel labels. 🚘🤖🚀 pic.twitter.com/WpYopuS3SW

— Waymo (@Waymo) October 8, 2020

It’s a bit unfair to compare Waymo and Tesla – though, I should point out, Tesla CEO Elon Musk invites these things when he trolls his competition under the guise of discourse – because the companies are trying to accomplish two entirely different things.

Waymo’s laying the ground work for a fleet of robotaxis that likely won’t have a steering wheel, driver’s seat, or cockpit of any kind. Tesla is trying to create the ultimate experience for car owners. While it may seem that for each the ultimate goal is L4/L5 autonomy, how they’re approaching it couldn’t be more different.

In the AI world L4 and L5 refer to levels of autonomy. It’s a bit complex, but the muddy version is that a car that can drive itself without outside assistance but can still be driven or operated by humans displays level 4 autonomy, and when you take out human intervention all-together you hit level 5.

Waymo relies on cameras and LIDAR-based sensor packages for its robotaxis’ self-driving capabilities where, as you can tell from the above Musk quote, Tesla repudiates the usefulness of LIDAR in such endeavors. The Musk-run outfit puts more emphasis on computer vision as the focal point of its so-called “Full Self Driving” system.

In execution this means, like any other video-based system, Teslas probably have to deal with the inherent latency involved in using state-of-the-art video codecs. It doesn’t matter how advanced and powerful your machine learning algorithms are if they’re waiting around for video signals to get sorted out.

Remember when a man died in a Tesla Model S that failed to distinguish a white truck in a highway intersection from the clouds in the sky? It’s easy to imagine such an error wouldn’t have happened if the AI had higher resolution real-time video that properly highlighted the important information in the scene which unfolded in the final seconds before impact.

We don’t think about it, but video codecs are a crucial backbone technology today. The Cliff’s Notes version of what they do is this: videos are too big to stream in their native size so they have to be compressed to send and decompressed to view. Codecs are what handles the compressing and decompressing.

When you think about compressing and decompressing videos for live streaming – whether on YouTube, Twitch gaming, or to an AI processor inside of a car – you have to take into account all the processing power it takes.

Modern codecs are great for what they do. You’ve probably heard of things like MPEG-4 and HVEC-H.265 or even DivX, well those are codecs. They let you watch videos that, otherwise, would have to be the size of a thumbnail or sent in tiny bursts (remember when everything was always buffering?). But they aren’t quite good enough for autonomous vehicles yet.

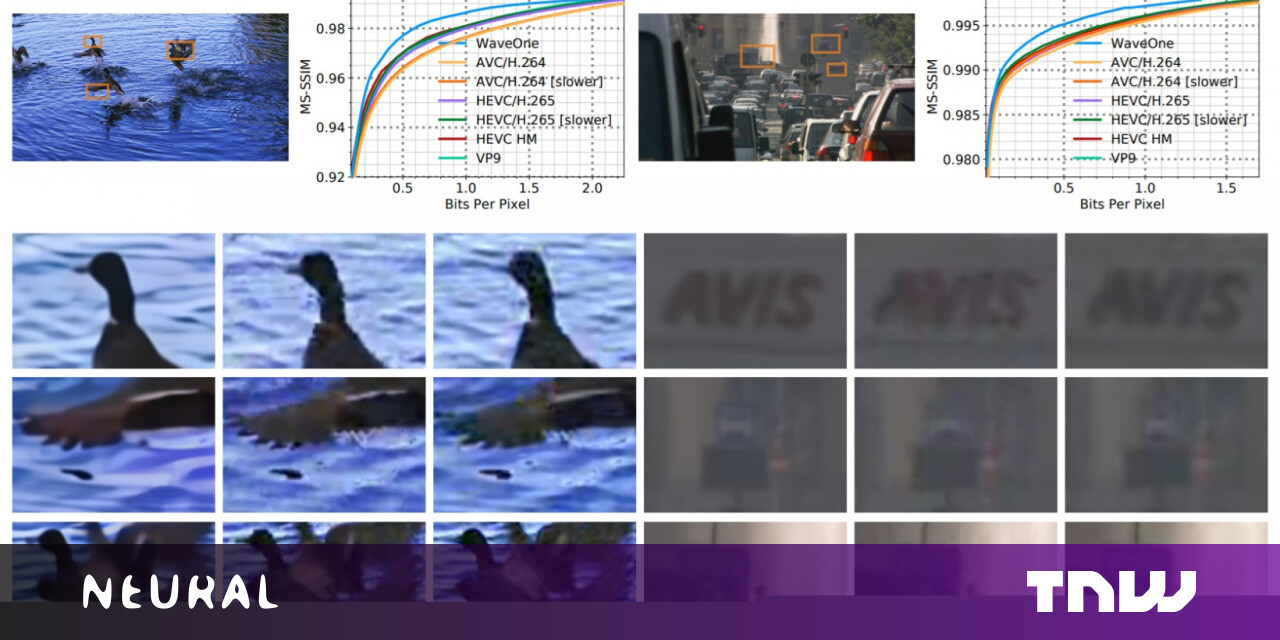

Tech Crunch’s Devin Coldewey yesterday published an in-depth piece on AI startup WaveOne, a company that appears to be developing an AI-powered video compression system that could revolutionize the video industry as a whole.

And yes, the article addresses the fact that every five or six months some outfit comes along claiming to have created the One True Codec to Rule Them All only to find out that “standards” don’t just conform to your work because you’ve got a good idea.

It turns out that running some of these software solutions requires specific hardware, an obstacle that prevents most “new and improved” codecs from ever even entering the marketplace. WaveOne’s work could change that. According to Coldewey’s piece:

Just one problem: when you get a new codec, you need new hardware.

But consider this: many new phones ship with a chip designed for running machine learning models, which like codecs can be accelerated, but unlike them the hardware is not bespoke for the model. So why aren’t we using this ML-optimized chip for video? Well, that’s exactly what WaveOne intends to do.

WaveOne’s designed their algorithms to run on AI chips. That means it’ll work natively on iPhones, Samsung devices, and most flagship phones in 2021 and beyond. And, of course, they’d work with whatever camera systems companies such as Tesla are using in their self-driving vehicles.

The key part here is, as Coldewey writes, that WaveOne’s system is purported to halve processing times while increasing image quality. Something that could be huge for self-driving cars. Coldewey continues:

A self-driving car, sending video between components or to a central server, could save time and improve video quality by focusing on what the autonomous system designates important — vehicles, pedestrians, animals — and not wasting time and bits on a featureless sky, trees in the distance, and so on.

This seems pretty close to a eureka moment. WaveOne’s tech doesn’t require consumers to do anything except keep buying new technology devices like they always have. And businesses won’t have to invest in a hardware ecosystem to get the benefits of the new, better video compression services. That means if everything pans out like this research paper seems to indicate it will, WaveOne’s future is bright.

And, potentially, so is the self-driving car industry’s. If an advance like this can keep Tesla’s cars from thinking trucks are clouds, it could represent a major leap for computer vision-based autonomous vehicle technology.

And not a moment too soon, because Musk has less than a month to make good on his guarantee that Tesla would be operating 1,000,000 robotaxis by the end of 2020. So far the company’s managed to produce exactly zero.

Published December 2, 2020 — 19:43 UTC