In digital conversation, Riley is a young person who is trying to come out as genderqueer. When you message Riley, they’ll offer brief replies to open-ended questions, sprinkle ellipses throughout when saying something difficult, and type in lowercase, though they’ll capitalize a word or two for emphasis.

Riley’s humanness is impressive given that they’re a chatbot driven by artificial intelligence to accomplish a unique goal: simulate what it’s like to talk to a young person in crisis so that volunteer counselors can become skilled at interacting with them and practice asking about thoughts of suicide.

The Trevor Project, a nonprofit organization that operates a suicide prevention hotline for lesbian, gay, bisexual, transgender, and queer youth, launched Riley as the first persona from its new Crisis Contact Simulator. The AI tool is the product of a partnership with Google.org’s Fellowship program. That pro-bono initiative pairs Google staff volunteers with nonprofits to develop technical projects.

“This tool’s really powerful because it can help ensure that trainees understand how to engage with LGBTQ youth as a crisis counselor.”

The Crisis Contact Simulator is designed to make training for volunteer counselors more efficient and accessible. When someone in crisis calls or messages a suicide prevention hotline, they typically reach a volunteer who’s trained to be empathetic, respectful, and welcoming. They’ve also been taught to de-escalate the situation.

Preparing volunteers for such high-stakes conversations is a time-intensive process. It requires screening applicants, teaching them crisis intervention skills, role-playing various scenarios, and monitoring their progress.

The Crisis Contact Simulator makes it possible for volunteers to practice role-playing whenever they choose, a significant advantage when counselors have busy schedules and may be available only early in the morning, late in the evening, or on the weekend. Once the role-play with the simulator is complete, it generates a transcript that Trevor Project training coordinators use to offer the trainee feedback on how they handled the interaction. Previously, role-play could only be done in real time with a live trainer.

“This tool’s really powerful because it can help ensure that trainees understand how to engage with LGBTQ youth as a crisis counselor,” said Kendra Gaunt, data and artificial intelligence product manager at The Trevor Project.

“Youth are reaching out to us for so many different reasons — coming out, breaking up, maybe they’re experiencing homelessness, and even those who have current thoughts or plans of suicide. This tool provides trainees with the opportunity to improve.”

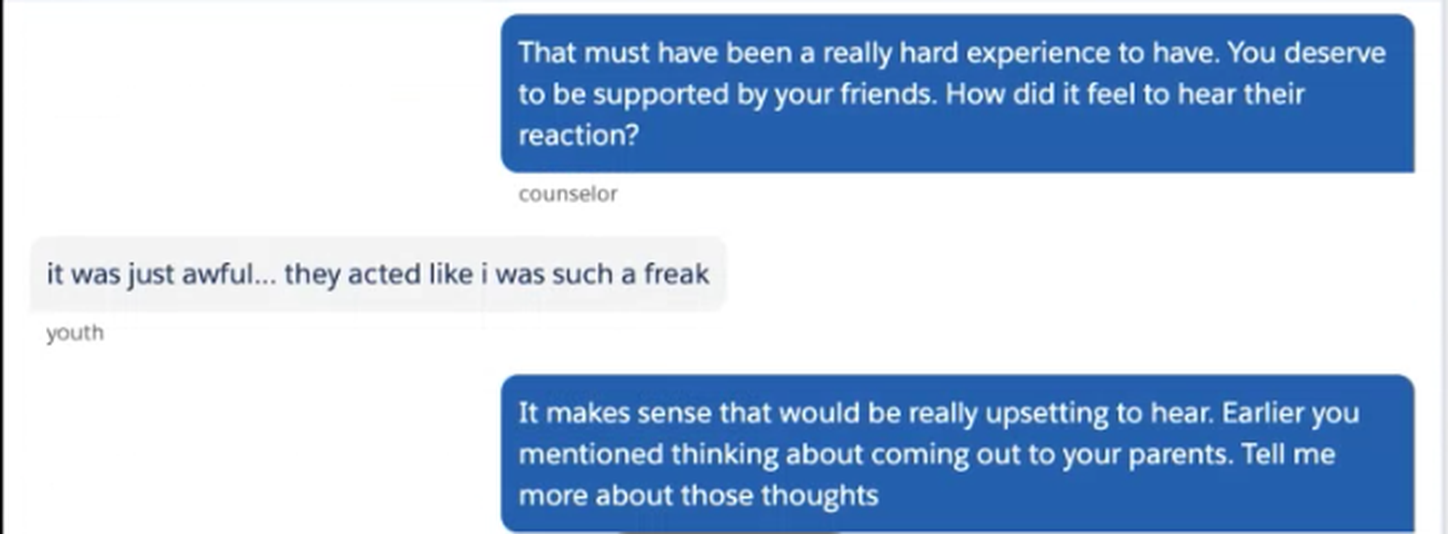

In one demo of the simulator, Riley has indicated they’re feeling depressed and anxious, so the volunteer asks, “[W]hat’s been going on?”

Riley shares that they’ve been wanting to come out to their parents as genderqueer, but recently had a negative experience doing so with their friends.

“That must have been a really hard experience to have,” replies the volunteer. “You deserve to be supported by your friends. How did it feel to hear their reaction?”

“it was just awful… they acted like I was such a freak,” Riley says.

Image: The Trevor Project

Over the course of several minutes, the volunteer affirms Riley’s feelings, asks whether they’re currently feeling suicidal, and talks through what they’ve done in the past to feel less anxious, like painting and going for walks. Since Riley isn’t having suicidal thoughts during the conversation, the volunteer encourages Riley to paint and go for walks when they’re feeling overwhelmed — and to use TrevorChat if they’re feeling overwhelmed again.

Gaunt, who helped develop the simulator, said personally going through the volunteer training helped her better understand what it would feel like to engage with LGBTQ youth in crisis. “It was even difficult for me to just keep in the back of my mind, this is not a conversation with a real human,” she said.

Shelby Rowe, program manager for the Suicide Prevention Resource Center, had no experience working with The Trevor Project on the simulator but described the tool as a “clever” use of technology. Rowe, who once served as executive director of the Arkansas Crisis Center, knows firsthand the logistical challenges of training volunteer counselors. There may be high turnover due to burnout, as well as difficulties scheduling role-playing practice. That aspect of training is critical because it builds a volunteer’s confidence and competence, said Rowe.

“It’s one thing to learn in theory. It’s different when you have to be the one to help.”

“It’s one thing to learn in theory,” she said. “It’s different when you have to be the one to help.”

Even role-playing can trigger a surge of adrenaline for volunteers, who know the experience isn’t real but still feel nervous about guiding someone through complex emotions or a mental health crisis. High-quality practice helps volunteers feel prepared to have tough conversations, and Rowe said it can improve how they see their contributions to a crisis line.

“If we, as that crisis intervention specialist, are overwhelmed and scared of their situation, we can escalate things,” she said. “They’re in a very dark place oftentimes and we have to have the confidence and courage to sit with them in that dark place so they don’t have to be alone.”

Rowe believes that there should be little downside to incorporating AI into volunteer training provided it doesn’t eventually replace human supervisors.

Image: The Trevor Project

The Trevor Project simulator was trained to learn natural human language from a machine learning model, which provided it with base knowledge about syntax and sentence structure. To build Riley’s persona, The Trevor Project created a backstory and trained the bot on transcripts from past role-plays between volunteer trainees and human instructors. That significantly informs what Riley says in conversation — and guards against the possibility that the bot will make abusive or bigoted comments. The Trevor Project is currently developing other personas to reflect the diversity of life experiences, sexual orientations, gender identities, and risk levels.

More than 700 digital crisis counselors currently volunteer for The Trevor Project. The organization is aiming to triple its digital volunteer crisis counselors in 2021 to meet a surge in demand.

If you want to talk to someone or are experiencing suicidal thoughts, the Trevor Lifeline is available 24/7 at 1-866-488-7386. TrevorChat, an online instant messaging tool, can be accessed here. To contact TrevorText, text START to 678-678. Crisis Text Line provides free, confidential support 24/7. Text CRISIS to 741741 to be connected to a crisis counselor. Contact the NAMI HelpLine at 1-800-950-NAMI, Monday through Friday from 10:00 a.m. – 8:00 p.m. ET, or email info@nami.org. Here is a list of international resources.