Google has announced that it will begin rolling out a new feature to help users “better understand how a particular piece of content was created and modified”.

This comes after the company joined the Coalition for Content Provenance and Authenticity (C2PA) – a group of major brands trying to combat the spread of misleading information online – and helped develop the latest Content Credentials standard. Amazon, Adobe and Microsoft are also committee members.

Set to release over the coming months, Google says it will use the current Content Credentials guidelines – aka an image’s metadata – within its Search parameters to add a label to images that are AI-generated or edited, providing more transparency for users. This metadata includes information like the origin of the image, as well as when, where and how it was created.

However, the C2PA standard, which gives users the ability to trace the origin of different media types, has been declined by many AI developers like Black Forrest Labs — the company behind the Flux model that X’s (formerly Twitter) Grok uses for image generation.

This AI-flagging will be implemented through Google’s current About This Image window, which means it will also be available to users through Google Lens and Android’s ‘Circle to Search’ feature. When live, users will be able to click the three dots above an image and select “About this image” to check if it was AI-generated – so it’s not going to be as evident as we hoped.

Is this enough?

While Google needed to do something about AI images in its Search results, the question remains as to whether a hidden label is enough. If the feature works as stated, users will need to perform extra steps to verify whether an image has been created using AI before Google confirms it. Those who don’t already know about the existence of the About This Image feature may not even realize a new tool is available to them.

While video deepfakes have seen instances like earlier this year when a finance worker was scammed into paying $25 million to a group posing as his CFO, AI-generated images are nearly as problematic. Donald Trump recently posted digitally rendered images of Taylor Swift and her fans falsely endorsing his campaign for President, and Swift found herself the victim of image-based sexual abuse when AI-generated nudes of her went viral.

While it’s easy to complain that Google isn’t doing enough, even Meta isn’t too keen to spring the cat out of the bag. The social media giant recently updated its policy on making labels less visible, moving the relevant information to a post’s menu.

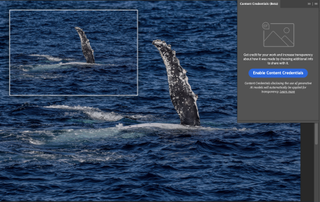

While this upgrade to the ’About this image’ tool is a positive first step, additional aggressive measures will be required to keep users informed and protected. More companies, like camera makers and developers of AI tools, will also need to accept and use the C2PA’s watermarks to ensure this system is as effective as it can be as Google will be dependent on that data. Few camera models like the Leica M-11P and the Nikon Z9 possess the built-in Content Credentials features, while Adobe has implemented a beta version in both Photoshop and Lightroom. But again, it’s up to the user to use the features and provide accurate information.

In a study by the University of Waterloo, only 61% of people could tell the difference between AI-generated and real images. If those numbers are accurate, Google’s labeling system won’t offer any added transparency to more than a third of people. Still, it’s a positive step from Google against the fight to reduce misinformation online, but it would be good if the tech giants made these labels a lot more accessible.

You might also like…

Services Marketplace – Listings, Bookings & Reviews