Refresh

Good morning, good afternoon and good evening, wherever you are in the world – and welcome to TechRadar’s live coverage of WWDC 2024.

Apple’s WWDC 2024 keynote is minutes away, with rumors swirling about announcements focusing on artificial intelligence and Siri, Apple’s languishing voice assistant. Speculation suggests a possible partnership with OpenAI to integrate ChatGPT, which could make Siri more intelligent and capable.

Additionally, we could see iPadOS enhancements, taking advantage of the powerful new Apple M4 chip in the iPad Pro for features like generative AI tools across various applications.

With a diverse group of experts, and editors on the scene in Cupertino, we’re poised to analyze the news as it unfolds and assess the impact on Apple products in the coming year. Stay tuned for live updates and comprehensive coverage of the event as Apple unveils its latest innovations.

I’ve been wondering what Apple could possibly bring to the table with AI on the iPhone. We’ve already seen other companies like Google and Samsung roll out some magical AI-powered photo editing tools, writing assistants, and even large language models that run locally on phones like the Galaxy S24 and Google Pixel 8a. So where is the iPhone?

Could Apple follow suit with its own version of Photo Unblur or Magic Eraser? Or maybe even offer a generative AI writing tool built right into the Apple Keyboard? And could they go a step further and introduce an LLM that lives on the device, like Google’s Gemini Nano?

I’m definitely curious to see if Apple takes inspiration from its Android rivals or if it has something completely different in store for us. Or, will they just give in and use ChatGPT? We’ll find out soon enough!

So, we’re thinking there won’t be any new hardware at WWDC this year, mostly because the most reliable Apple rumor guru, Bloomberg’s Mark Gurman, says so. He’s got a good track record, but maybe Apple will throw a curveball and surprise us with a new MacBook. If they do, well, it would be one of the first times Gurman has been so wrong.

What about that potential Apple and OpenAI partnership? That’s Gurman’s scoop too. It would be a pretty big deal, but some folks, especially our own Lance Ulanoff, think it might break some of Apple’s big rules. If this rumor doesn’t pan out, maybe Gurman’s sources aren’t as reliable as they used to be, or maybe Apple’s getting better at keeping its secrets.

Either way, this year’s WWDC could be a big test for both Apple and Gurman. We’ll know in minutes how it all plays out!

We’ve gotten a tip that our Editors on the scene at WWDC 2024 have caught wind of Sam Altman and Greg Brockman of OpenAI sitting in attendance today. Unless they’re also big Apple fans, this likely confirms that OpenAI and possibly ChatGPT could be coming to the iOS 18 and macOS 15 in the near future, and likely the iPhone and related Mac computers.

How will Apple use the LLM on its devices? Will this simply be a ChatGPT app, or will the partnership go much deeper? Is Apple willing to turn Siri over to ChatGPT? We’ll know soon, and we’re keeping our eyes open for more hints in the audience.

The show has begun, even as the video stream is still catching up. Apple execs start strong, telling us this is going to be a big one. We’ll know more soon, but it’s going to be a day to remember for Apple fans.

The video begins with an over-the-top introduction of Apple executives dropping by parachute onto the roof of Apple Park. Tim Cook says this will be an “action-packed and memorable WWDC.”

The video is strongly reminiscent of when Google introduced Google Glass by leaping out of an airplane wearing the new headset. We haven’t seen new glasses from Apple, but Tim Cook is starting with Apple TV Plus news.

Ok, this is weird, but Apple is starting with a long trailer for all of the original content being shown on Apple TV Plus, its streaming TV channel. Maybe this is a pre-announcement that Apple TV Plus is finally coming to Android? I’m excited for some AI news, but Apple wants to show us more George Clooney.

The first OS on the slate today is visionOS. Mike Rockwell, VP of the Vision products group, is giving us an update on spatial computing. Rockwell is reviewing Apple’s NBA partnership and the What If? immersive content released by Marvel.

VisionOS 2 is coming. Apple’s Haley Allen, from VisionOS Product Management, is talking about new spatial photos features, a new addition to augment the spatial videos experiment. Vision Pro will be able to create spatial photos from existing 2D photos and panorama images. There are also new ways to share these photos with other Vision Pro users.

Apple is also introducing new control schemes for Vision Pro, though frankly there are very few users for this product, so I’m not sure we can all get so excited about being able to access the home screen faster. There are also new display modes for when you connect to your macOS computer.

VisionOS 2 also has new developer features coming, of course, since this is a Developer conference, as we’ll remind you often. Apple is launching Enterprise APIs for health care and manufacturing, among other professional use cases for Apple Vision Pro.

Canon is going to offer a spatial vision lens to capture spatial content on a Canon camera. Spatial videos can then be edited in Final Cut Pro, and shared in a new Vimeo app that will support spatial videos.

Apple is partnering with Blackmagic Design to use Blackmagic cameras for improved Apple immersive video content that will be easier for pros and prosumers to create. Apple is also showing off a new short film coming to immersive video in Apple Vision Pro.

So that’s it for Vision Pro. It’s about new ways to create spatial content, which must be a key selling point for Vision Pro. Now we’re back to Tim Cook on the roof. He’s saying that Apple Vision Pro is coming to China, Japan and Singapore on June 28, and a bunch of other countries where you live on July 12. We’ll have more details on TechRadar asap.

Now for iOS 18!

Apple is jumping right into iOS 18 with new customizations for your home screen and lock screen. Apple’s Craig Federighi is showing off all the amazing ways that Apple’s iOS 18 and iPhone will finally be able to arrange icons and widgets in a sensible fashion, like Android and your desktop computer have allowed for decades.

Apple is also adding new color features to theme your icons and wallpaper. These styles look heavily reminiscent of Apple’s Contact Poster, so if you’ve created cool contact posters in a variety of looks, you’ll be familiar with Apple’s style here.

Apple’s Control Center, the drop down menu in the corner of your iPhone, will get many more controls soon, including media controls and smart home controls. You’ll have to swipe down farther to reach the new controls, but it looks like Apple is making things easier, with an even more extensive Controls Gallery.

Apple is also showing off third-party controls options, like a control center from Ford to control features in your automobile.

Apple is giving users the ability to swap out lockscreen shortcuts for the new controls. You’ll finally be able to do more than just turn on the flashlight. Do we need to say Android has been… nah, we don’t.

Now Apple is onto Privacy. You can now lock an individual app. Even if somebody has access to your phone, you can lock an individual app so that they will need to unlock again to have access to that app or the data it contains.

Also, that app won’t share data across the phone, in search results, for instance. Apple is also offering a hidden apps folder that you can lock separately. Something something Android for years, yeah.

Apple is giving third-party accessory makers the ability to pair devices with the phone in a way that looks much more like the way Apple’s own Air Pods and other accessories pair.

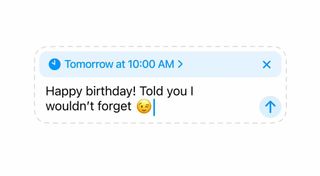

Now we’re on to Messages. Apple is adding new Tapback emojis and stickers. We’re also getting a scheduling feature to send messages later, which Apple says is one of its most requested features. There will also be new text effects and styles, including animated effects, or simple bold and underline additions. You’ll be able to make your text shimmy and bounce on command.

Apple is also extending its satellite communications feature to its messaging app. If you don’t have any cell service, Apple says iMessage will still be able to use satellites to send protected messages to other blue bubbles, or you can even send text messages via satellite if you know scrubs who use Android phones. As if.

In Apple’s Mail app, there are new digest views and other organizational features to help you read and get through bulk messages. Nothing yet about AI features, like AI summaries or writing suggestions, but there are probably some intelligence features behind the scenes here. Apple’s Mail will even categorize your messages to help you organize better.

Now it’s a feature salad from Apple, starting with Maps. There are better topographical maps for hiking that can be saved offline. Sorry Alltrails, Apple just pulled a Sherlock on you.

Apple is extending its tap to share feature to payments. You can tap to cash, making it faster and easier to send money and payments to friends and merchants. If you’re at a supported venue, you’ll be able to get maps and recommendations of that location, like seat locations and eating spots.

There are also tons of updates for gaming mode, the Journal app, and more. Craig Federighi is moving fast, so we’ll let our news teams catch up all these features as soon as the keynote wraps up.

Now we’re getting a major update to Photos. There’s a new filter button that lets you filter out screenshots, which is nice because the iPhone button design is absolutely terrible and most iPhone users have a photo library that is stuffed with screenshots taken accidentally.

There is a Recent Days gallery view that organizes photos in groups by days and trips. You can easily share a whole collection, if you happen to love every single photo you shot on a single day, which seems highly unlikely.

There is a trip section that gathers together photos from a trip, and it autoplays your trip as a video collection. You can also pin individual collections to your favorite section. There’s also a new carousel that Apple says “highlights your best content,” though its unclear who chooses what is best. Apple says the carousel will surprise you, so hopefully you don’t have any photos that are not a happy surprise.

Ugh, this is not as great as Apple seems to think. Where’s the AI? Where are the useful editing features? Let’s go.

Nope, no more iOS, now its time for Audio and Home.

Apple’s AirPods are not ignored at WWDC 2024. Apple is improving the ways you can talk to Siri via AirPods. You can now just nod “yes” or shake “no” to answer or deny a call, for instance. Instead of talking to yourself, now you just shake your head like a maniac.

Apple is also improving the way it removes background noise on your calls. Spatial audio will also include gaming apps, with a new API coming for game developers to take advantage of the AirPod Pro’s spatial features.

If you use AppleTV (the box, not the streaming channel, we know it’s confusing) there will be a new Insight feature that will give you information about what you are watching. It looks a lot like Amazon Prime’s X-ray feature, so we’ll have to dive deeper to see what’s different. Apple is also letting users boost the dialogue on shows, with a special dialogue mode that enhances speech.

If you have an anamorphic projector, you’ll now be able to project Apple TV in 21:9 aspect ratio, which is much closer to what you’ll see in the theater. There are also new screen savers that offer your photo gallery, select Apple TV Plus shows, or Snoopy content when your Apple TV (the box) goes idle.

The latest WatchOS update is here with David Clark, Apple’s Senior Director of WatchOS engineering. The first feature is about workouts. The next version will have a training mode that tracks intensity and rates the effect on your body over time. I guess I’ll need to start training for … something?

You can enter an effort rating to adjust your training mode, to make sure that you don’t exhaust yourself, which isn’t a problem if you barely ever work out, like me. The Watch app is also being improved to let you customize your views.

You can pause your rings as well, so you don’t lose your streak if you get injured and can’t exercise, or if you’re a phone reviewer and you have to review a Pixel Watch 2 instead of your own Apple Watch. Everybody has problems.

There’s also a new Vitals app that collects all of your major health metrics, outside of an exercise context. Apple compares your data to its own heart and movement study to give you a view on your health. If anything changes significantly, Vitals will suggest you stop drinking as much, or whatever may be causing issues.

The WatchOS smart stack is getting more intelligence, adding new widgets when they are needed. You’ll get a precipitation widget when it’s raining, for instance, or a translate app when you’re in another country.

There’s also a check-in widget on Apple Watch, bringing one of my favorite iOS 17 features to the watch with a convenient way to activate a check in.

WatchOS 11 will use AI (hey, it’s AI!) to choose the best photos for your watch face and frame the photo with numbers that tell the time. You can also add a splash of color, just like with iOS and the new home screen themes.

Now Apple is talking iPadOS, potentially the most exciting part of today, since the iPad just got the most powerful Apple silicon ever, the Apple M4 chipset on the iPad Pro.

Craig Federighi is talking iPadOS 18 and a whole new design, plus new Pencil features.

Apple will get a floating tab bar and side bar, available to developers to keep apps organized. It will work across the system on any apps. There are also totally redesigned Apple apps, like Playground or Sheets. Apple is adding new animations across apps, land across the interface. Developers will be able to adopt many of these interface elements through an upcoming API.

Apple is giving you an easier way to help other people, iPad to iPad. You’ll be able to draw on a shared iPad screen and even take control of someone else’s iPad.

Wait, what’s this!? It’s a Calculator!? On iPad!???!!! I never thought I’d see the day. Okay, it looks like a basic Calculator app, but now there’s Math Notes with the Apple Pencil.

Basically, you can write expressions and formulae, just like you’re in math class, and Apple will solve the problem. Apple will even give you the results in numbers that look like your own terrible handwriting. It will change results as you change the formula.

Math Notes can actually do quite a bit, like understanding diagrams and drawings in terms of math functions.

Okay, this is it. The iPad Calculator is officially the coolest thing we’re seeing today. Math Notes and calculator look amazing, and I wish I was back in High School Geometry, but this time with an iPad Pro instead of a Trapper Keeper.

In addition to Math Notes, Apple is using the same handwriting additions for Smart Script. The iPad can now analyze your handwriting and make a font-like style that is based on your writing, only better.

By treating handwriting more like typography, you’ll also be able to manipulate and edit handwritten notes even better on an iPad, if you are a note-taker or into journaling.

After a bit of parkour (not joking), Apple’s Craig Federighi is onto macOS 15, which will be called … Sequoia. Named for the humongous redwood tree forests, the next version of macOS will get just about all of the new iOS and iPadOS features we’ve seen, like Math Notes from iPadOS and the new topography Maps from iOS.

Apple’s Continuity will get a lot of improvement, if you own more than one Apple device (and who doesn’t, really). If you want to mirror your iPhone on your Mac, you can do that now without needed to grab your phone. You’ll basically have full access to your apps and content from your phone on your macOS computer.

Apple has put a lot of effort into the latest Continuity improvements, and it really shows in a very Apple way. When you are using your iPhone on your macOS computer, your phone can stay locked, and even stay in Standby mode. You can quickly drag and drop content like photos and videos from one device to the other for easier editing.

Since we’re all doing more video meetings than ever, Apple is improving its video chat with new backgrounds and new ways to preview content before you share your whole embarassing group of tabs in your web browser.

As expected, Apple is launching a new Passwords app for macOS to command and control all of the passwords you use with your Apple account. We’ll have to dig in more for details on how it compares to the best password managers.

Apple is also improving Safari for macOS. There are new article summaries and a new Reader view that will help summarize your stories. There’s also a Viewer that works for videos the way Reader works for text, isolating just the content you watch.

Our editors on site note that Apple keeps using the term Machine Language, and not AI. We think AI is coming soon, but it will get its own standout section, apart from the OS updates.

Hahahaha Apple is talking about gaming. Did you know there are games on Apple hardware? Sure, those games were already available on Windows, Steam, Playstation, Xbox, and every other platform, but eventually a game comes to Apple! And now it will be, like better. More games are coming.

Control is coming to the Mac. It’s only 5 years old, but now it looks great on the Mac. See what I mean? Apple doesn’t really take this seriously. Ubisoft is making announcments. Apple will get Prince of Persia: The Lost Crown, and the next Assassin’s Creed will even show up at the same time as it will on consoles. So, that’s one game released at the same time on Apple. Cool.

So that wraps things up for the major OS announcements. It’s time for … something else. Developer betas for the major OS releases are available today, and the final OS will be available in the fall for the rest of us.

Now Tim Cook is back, this time on the ground. He’s talking artificial intelligence and machine learning. It has to be powerful, intuitive, integrated, and personal. Oh, and private. So that screams ChatGPT, right?

Apple calls it personal intelligence. Now we’re back to the iPhone, flying through the circuitry.

Apple Intelligence is here. AI. AI now stands for Apple Intelligence. Maybe it always did? AI. Apple Intelligence. Here we go.

Apple is excited, but current chat models know very little about you. Apple is offering intelligence that understand you. Generative models, so it’s going to be writing, or making photos, or making things.

But Apple is starting with privacy. Lots of privacy. It’s deeply integrated into the system, but its private.

No mention of ChatGPT or OpenAI, so it looks like Apple is using OpenAI’s technology, and not simply dumpling ChatGPT onto the iPhone.

Apple Intelligence will have writing tools accessible system wide. It can rewrite, proofread, wherever you are writing. This looks to be tied to the Apple Keyboard, like Samsung does with Galaxy AI, so it will work wherever you type, even third-party apps.

Apple has a generative image feature that will use photos of your contacts to create images that look like a caricature of your favorite people. You can send your Mom a Happy Birthday card with a little image that looks like her.

Finally, we’re seeing an action engine. Apple Intelligence will be able to understand actions in Apple apps so it will be able to interact with individual features. It will understand your own personal context, so you’ll be able to say “show me the photos from my meeting last weekend” and it will know what you’re talking about, and be able to take the right action in Photos to get that done.

Apple says its Apple Intelligence will work between many apps, so it might check traffic in Maps, your meeting time in Calendar, and send a message in Messages.

All of these features look to happen on-device without hitting the cloud. Apple says that its own advanced silicon is a big part of making that happen, and it highlights the Apple A17 Pro, found on the iPhone 15 Pro and better, and the Apple M1 through Apple M4 chips. Looks like older, less expensive iPhone models and iPads will get left out.

Now Apple is talking about what happens when your Apple device needs to access the cloud for Apple Intelligence. To do this, it has created Private Cloud Compute for more complex requests, while protecting your privacy, Apple claims.

Apple says these servers run on Apple Silicon, which seems to square the rumor that Apple’s AI features would use Apple M4 chips instead of NVIDIA chips. It looks like that will be part of this VPN-style network for cloud computing, not for the actual AI modeling itself.

Apple is giving its servers over to third-parties to verify its privacy claims, and it says that Apple devices won’t be able to compute AI in the cloud with servers that don’t offer publicly verifiable privacy options.

Siri is getting its moment. Siri will be more natural, more contextually relevant, and more personal. Apple is first of all giving Siri a new look. It isn’t just a tiny orb, now it takes up your whole phone.

You can talk to Siri more naturally, and even make more mistakes when you talk to Siri. Siri is cooler now. It will be more patient and wait for you if you pause for a moment. It will understand context, so you can pile commands on top of each other.

You can also finally type to Siri. I’m so used to every other voice assistant, all of which allow typing, so I totally forgot you couldn’t type to Siri. Maybe Siri will be worth using again, so people will want to type to Siri.

Siri will also understand the complicated new iOS features, so you can ask “how do I send a message later,” and it will reveal Apple’s new iMessage scheduling.

Siri also gets photo editing features. You can tell Siri to make a photo “pop,” and it will apply photo enhancement features in Apple Photos. This is all part of a new App Intents features that Apple is offering to developers. That means third parties will be able to share features with Siri, and you’ll be able to command Siri to work with other apps, not just Apple’s first party tools.

Siri will also understand a lot of your context, including links from your friends, bookings from hotel emails, calendar invites, and other information gathered across your phone.

You’ll be able to ask Siri more contextual information, like “what was that movie that we talked about?” or “what is my driver’s license number?” and it will read your messages, or pick text from your photo to give you an answer.

After Siri, Apple is adding new generative AI writing tools. In the same way that Google’s Pixel phones and Samsung’s Galaxy S24 phones can change your writing style into a variety of tonal suggestions, Apple’s devices will now be able to do the same. Apple is starting with macOS, showing these writing tools will be available system wide across third party apps.

On iOS, Apple’s Mail will have reply suggestions waiting for you, just like Gmail offers. Apple is also adding Apple Intelligence to notifications and focus modes, to helping reduce interruptions and understand the messages that are important in the context of what you’re doing.

Now Apple is showing generative image creation with Apple Intelligence. Apple lets you generate a new emoji, and it calls these generated emoji … genmoji. Seriously. Generative + Emoji. Genmoji. You can make a new emoji … ahem, genmoji using images from your friends or just with a description you write.

There is also an Image Playground that lets you create whole images from some preset prompts. It isn’t quite as robust as a full generative AI image tool, more like Google’s AI wallpaper tool, but with images that apply to more than just your lock screen wallpaper. But the choices seem just as limited, more like filling in an AI MadLib than creating an image from whole cloth.

Apple will try to make suggestions in context, so if you’ve been talking to friends about a party, the new not-at-all creepy Apple Intelligence tool will suggest party images in the Image Playground.

Image Playground will be part of other apps, it will be an API for developers, and it will also get its own app so you can create new images that you can then save for later and use across apps.

Apple says the image generator makes possible new experiences across apps, including the Image Wand in Apple’s Notes app. When you circle an image on a Note, the app will open Image Playground and generate an image from contextual clues it finds that you have circled.

There is also an update to Photos for editing, at last. Apple is offering a generative image eraser tool, just like Magic Editor on Google Photos.

Apple also uses AI for video searching. You can search for a specific moment in a video and Photos will jump to the right video, and the right moment in that video.

Apple also offers new search features with intelligence to search your videos and photos to create a movie based on your content. You might ask for a video about theme parks and it will make a video from every photo and video that applies, with music in the background. All of this happens on device, and Apple says none of it is shared.

Apple has pulled back the curtain on its ChatGPT partnership. Wow, this is a very rushed explanation for a very big feature. Apple is saying you can “send” questions to ChatGPT through its apps. Siri will use ChatGPT to ask questions. Pages will help with writing using ChatGPT.

Uhh, this is so rushed that it feels suspect. There is very little explanation, except that Apple says it plans on adding support for other AI models in the future. We’re going to need to understand a lot more.

This is a Developer conference, so Apple is reiterating that all of these AI features will rely on developer support. Eventually we could see Siri App Intents added to Photoshop, but that will depend upon Adobe adding commands for everything you can do in its app.

Apple Intelligence is coming with the iOS 18 beta, iPadOS 18 beta, and the macOS Sequoia beta coming later this year. Apple has focused heavily on privacy, security, and keeping things on your device, instead of floating in the cloud.

Now back to Tim Cook to wrap things up.

Apple will have more news at its ‘Platform State of the Union’ for developers later, and there will be more coming as developers learn more details about everything coming soon. Tim Cook says Apple Intelligence is AI done in a very Apple way, and it certainly does have stark differences from what we’ve seen and even what we expected to see.

We did not see ChatGPT! Not actual human beings, at least, as no OpenAI execs were shown during the Keynote. ChatGPT is coming to Apple, as rumors suggested, but so are other AI models, and the features look very gated, not integrated into the other Apple OS options.

We will have more soon as we dive into all of the news, but the WWDC 2024 Keynote is over, and Tim Cook has left the building.

So, were we right? Did Apple announce everything we suspected, maybe more? Actually, yeah. We got the AI version of Siri coming, and that was the biggest rumor of the show. We also saw Apple’s idea of generative AI, with Apple Intelligence coming to just about every Apple platform (except Apple Vision Pro, how about that?).

Of course, we were expecting to hear about macOS 15 Sequoia, and the big excitement might have been finally learning which name Apple chose for the California location that labels its next OS launch.

None of Apple’s platforms were left out today. We saw new Vitals features and widgets coming to watchOS 11, including a new Check In widget that I’m excited to try, since I use that iPhone feature all the time.

Heck, even the AirPods got a mention, with new spatial gaming features coming to the AirPods Pro 2, including a developer API for third-parties to add spatial audio to games and apps.

Services Marketplace – Listings, Bookings & Reviews