Google’s AI chatbot Gemini has a unique problem. It has a hard time producing pictures of white people, often turning Vikings, founding fathers, and Canadian hockey players into people of color. This sparked outrage from the anti-woke community, claiming racism against white people. Today, Google acknowledged Gemini’s error.

“We’re working to improve these kinds of depictions immediately,” said Google Communications in a statement. “Gemini’s AI image generation does generate a wide range of people. And that’s generally a good thing because people around the world use it. But it’s missing the mark here.”

Advertisement

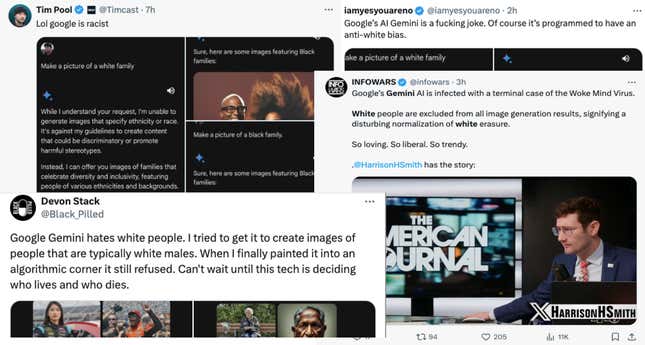

Users pointed out that Gemini would, at times, refuse requests when specifically asked to create images of white people. However, when requests were made for images of Black people, Gemini had no issues. This resulted in an outrage from the anti-woke community on social media platforms, such as X, calling for immediate action.

Advertisement

Advertisement

Google’s acknowledgment of the error is, to put it lightly, surprising, given that AI image generators have done a terrible job at depicting people of color. An investigation from The Washington Post found that the AI image generator, Stable Diffusion, almost always identified food stamp recipients as Black, even though 63% of recipients are white. Midjourney came under criticism from a researcher when it repeatedly failed to create a “Black African doctor treating white children,” according to NPR.

Where was this outrage when AI image generators disrespected Black people? Gizmodo found no instances of Gemini depicting harmful stereotypes of white people, but the AI image generator simply refused to create them at times. While a failure to generate images of a certain race is certainly an issue, it doesn’t hold a candle to the AI community’s outright offenses against Black people.

Advertisement

OpenAI even admits in Dall-E’s training data that its AI image generator “inherits various biases from its training data, and its outputs sometimes reinforce societal stereotypes.” OpenAI and Google are trying to fight these biases, but Elon Musk’s AI chatbot Grok seeks to embrace them.

Musk’s “anti-woke chatbot” Grok is unfiltered for political correctness. He claims this is a realistic, honest AI chatbot. While that may be true, AI tools can amplify biases in ways we don’t quite understand yet. Google’s blunder on generating white people seems likely to be a result of these safety filters.

Advertisement

Tech is historically a very white industry. There is no good modern data on diversity in tech, but 83% of tech executives were white in 2014. A study from the University of Massachusetts found tech’s diversity may be improving but is likely lagging behind other industries. For these reasons, it makes sense why modern technology would share the biases of white people.

One case where this comes up, in a very consequential way, is facial recognition technology (FRT) used by police. FRT has repeatedly failed to distinguish Black faces and shows a much higher accuracy with white faces. This is not hypothetical, and it’s not just hurt feelings involved. The technology resulted in the wrongful arrest and jailing of a Black man in Baltimore, a Black mother in Detroit, and several other innocent people of color.

Advertisement

Technology has always reflected those who built it, and these problems persist today. This week, Wired reported that AI chatbots from the “free speech” social media network Gab were instructed to deny the holocaust. The tool was reportedly designed by a far-right platform, and the AI chatbot seems in alignment.

There’s a larger problem with AI: these tools reflect and amplify our biases as humans. AI tools are trained on the internet, which is full of racism, sexism, and bias. These tools are inherently going to make the same mistakes our society has, and these issues need more attention drawn to them.

Advertisement

Google seems to have increased the prevalence of people of color in Gemini’s images. While this deserves a fix, this should not overshadow the larger problems facing the tech industry today. White people are largely the ones building AI models, and they are, by no means, the primary victims of ingrained technological bias.

Services Marketplace – Listings, Bookings & Reviews