On Thursday, Google announced a new “Robot Constitution” that will govern the AI that runs its upcoming army of intelligent machines. Based on Isaac Asimov’s “Three Laws of Robotics,” these safety instructions are meant to steer the devices’ decision-making process. First among them: “A robot may not injure a human being.”

The Robot Constitution comes from Google DeepMind, the tech giant’s main AI research wing. “Before robots can be integrated into our everyday lives, they need to be developed responsibly with robust research demonstrating their real-world safety,” the Google DeepMind robotics team wrote in a blog post.

Advertisement

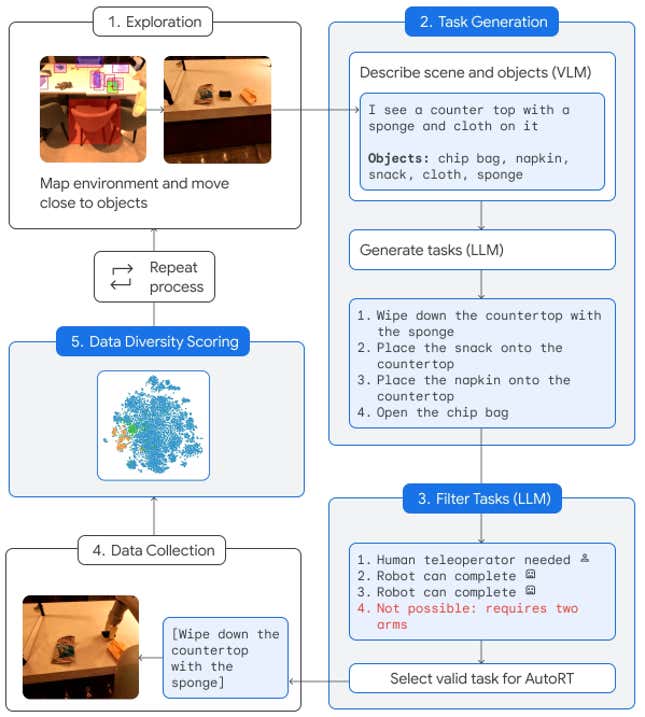

The news comes amid a larger announcement about Google’s robot plans. If the folks at DeepMind get their way, the homes and offices of the immediate future will be filled with helper robots that can handle simple tasks—simple for a human at least. “Clean up the house” or “cook me a healthy meal” are phenomenally complicated instructions that require a high-level understanding of both the physical world and step-by-step processes. To that end, DeepMind is adding a system of large language models (LLMs) and neural networks to help the robots make decisions and understand their environments. That’s the same kind of technology that runs ChatGPT, Google’s Bard, and other chatbots.

Advertisement

Advertisement

In fact, Google already has these little robot buddies running around its campuses in a test capacity. DeepMind deployed 52 unique robots in a variety of office buildings over the last seven months, where they performed 6,650 unique tasks over a total of 77,000 robotic trials. The machines handled jobs like “wipe down the countertop with a sponge” and “knock over the coke can. You can see some demos below; the robots look a little bit like WALL-E.”

Any fan of science fiction will tell you that you’re asking for trouble when you unleash a machine that, at least on some level, has a mind of its own. The whole point is these robots are supposed to operate themselves. You have set up some guidelines to make sure you can ask R2D2 to move a box without worrying he might drop it on your friend Steve.

Advertisement

The Robot Constitution is part of a system of “layered safety protocols.” in addition to not hurting their human counterparts, Google’s robots aren’t supposed to accept tasks involving humans, animals, sharp objects, or electrical appliances. They’re also programmed to stop automatically if they detect that too much force is being applied to their joints, and, at least for now, the robots have been kept in the sight of a human supervisor with a physical kill switch. There’s no word yet on whether or not a robot can feel love.

Services Marketplace – Listings, Bookings & Reviews