Artificial intelligence is driving unprecedented demand in data centers, as the need for processing vast amounts of data continues to surge.

As tech giants race to expand their infrastructures to accommodate AI workloads, they are faced with the growing challenge of how to sustainably and affordably power these operations – and this has even led companies like Oracle and Microsoft to explore nuclear energy as a potential solution.

Another critical issue is managing the heat generated by powerful AI hardware. Liquid cooling has emerged as a promising way to maintain optimal system performance while handling rising energy demands. In October 2024 alone, several tech firms announced liquid cooled solutions, highlighting a clear industry shift in that direction.

Liquid-cooled SuperClusters

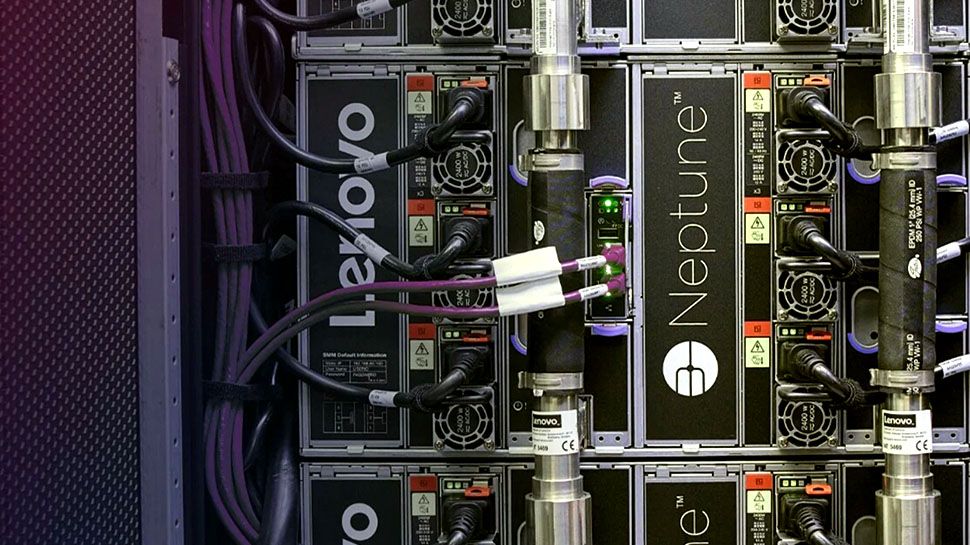

At its recent Lenovo Tech World event, the company showcased its next-gen Neptune liquid cooling solution for servers.

The sixth generation of Neptune, which uses open-loop, direct warm-water cooling, is now being deployed across the company’s partner ecosystem, enabling organizations to build and run accelerated computing for generative AI while reducing data center power consumption by up to 40%, the company says.

At OCP Global Summit 2024, Giga Computing, a subsidiary of Gigabyte, presented a direct liquid cooling (DLC) server designed for Nvidia HGX H200 systems. In addition to the DLC server, Giga also revealed the G593-SD1, which features a dedicated air cooling chamber for the Nvidia H200 Tensor Core GPU, aimed at those data centers not yet ready to fully embrace liquid cooling.

Dell‘s new Integrated Rack 7000 (IR7000) is a scalable system designed specifically with liquid cooling in mind. It’s capable of managing future deployments of up to 480KW, while capturing nearly 100% of the generated heat.

“Today’s data centers can’t keep up with the demands of AI, requiring high-density compute and liquid cooling innovations with modular, flexible and efficient designs,” said Arthur Lewis, president of Dell’s Infrastructure Solutions Group. “These new systems deliver the performance needed for organizations to remain competitive in the fast-evolving AI landscape.”

Supermicro has also revealed liquid-cooled SuperClusters designed for AI workloads, powered by the Nvidia Blackwell platform. Supermicro’s liquid-cooling solutions, supported by the Nvidia GB200 NVL72 platform for exascale computing, have begun sampling to select customers, with full-scale production expected in late Q4.

“We’re driving the future of sustainable AI computing, and our liquid-cooled AI solutions are rapidly being adopted by some of the most ambitious AI infrastructure projects in the world with over 2,000 liquid-cooled racks shipped since June 2024,” said Charles Liang, president and CEO of Supermicro.

The liquid-cooled SuperClusters feature advanced in-rack or in-row coolant distribution units (CDUs) and custom cold plates for housing two Nvidia GB200 Grace Blackwell Superchips in a 1U form factor.

It seems clear that liquid cooling is going to be at the heart of data center operations as workloads continue to grow. This technology will be critical for managing the heat and energy demands of the next generation of AI computing, and I think we’re only just starting to see potential impact it will have on efficiency, scalability, and sustainability in the years to come.

More from TechRadar Pro

Services Marketplace – Listings, Bookings & Reviews