Powerful software and talented visual effects artists are usually needed to convincingly pull off the effect where an actor filmed against a green screen is inserted into another shot. But researchers at Netflix say they have come up with a much easier, and potentially more accurate, green screen technique that takes advantage of how digital cameras work—with a dash of AI.

Replacing the background of footage where actors have been filmed against a brightly colored backdrop is a technique that’s been used by Hollywood, and even your local news, for decades, as it’s a much faster alternative than an artist having to painstakingly rotoscope an actor out of a clip by hand. Typically, either bright green or bright blue backdrops are used, as those are colors that don’t naturally appear in human skin tones so that when the software algorithms do their thing, the actors don’t end up getting automatically removed, too.

Advertisement

The typical green screen technique does come with some challenges, however. The on-screen talent can’t wear makeup or clothing that matches the color of the backdrop, and often times additional cleanup is needed in post production to remove a brightly colored glow around the talent. Extracting fine details, like wispy hair, from green screen footage can also be a challenge, as can dealing with transparent objects like windows which can’t disappear entirely, despite the green backdrop showing through.

More time needed in post-production to fix these types of issues also increases the cost of completing a shot, so it makes sense why a company like Netflix would want to find ways to make the green screen process easier and faster. But the alternative technique the company’s researchers have come up with almost seems counter-intuitive when you see what the captured green screen footage actually looks like.

Advertisement

Advertisement

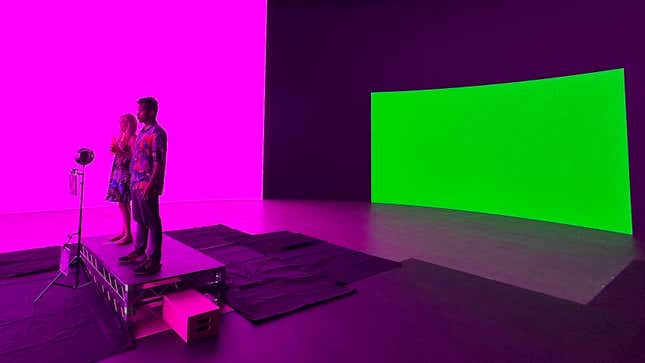

A bright green backdrop is still employed, but the foreground lighting (which in testing came from a giant wraparound LED screen) bathes the actors, props, and set pieces in a mix of only blue and red light so they all appear to have a heavy magenta tint. To the human eye, the footage looks all wrong, but to a digital camera whose sensor’s pixels record the level of red, green, and blue light detected in a scene, the bizarre lighting ends up producing a green channel where actors and objects in the foreground are perfectly silhouetted against a bright, even background.

The green channel of the captured digital footage can be easily used to create what’s known as an alpha channel, a black and white image that defines what parts of each frame should stay, and which should be removed and replaced with other footage. The new technique even works with transparent objects, ike the glass bottle held by one of the performers in the test footage, ensuring the background replacement shows through the bottle as well, as it would in real life.

There’s, of course, one very obvious problem with this technique. To deal with the bright magenta tint of everything in front of the green screen, the Netflix researchers employed a “machine learning colorization technique” which is trained on additional footage of the foreground subject filmed under traditional white lighting so the AI model knows how everything is supposed to look and how to remove the tint, after the fact.

Advertisement

Besides potentially being faster with more accurate results, the new technique has another big advantage: it doesn’t limit what the on-screen talent can wear. In the test footage, a performer is seen wearing a green dress and holding a green glass bottle and neither disappear when the green backdrop is replaced.

But the technique introduces some new challenges, too. Having everything on set tinted magenta can make it harder for the crew to properly visualize and preview footage while on set, and the technique doesn’t work in real time, like the systems employed by weather forecasters who often appear in front of a forecast map. The researchers are working on techniques to color correct the footage in real time to facilitate on-set previews, and even if the results aren’t perfect for the crew, the compromises could be worth it if the final product is even more convincing to audiences.

Services Marketplace – Listings, Bookings & Reviews