We take our sense of touch for granted. Simple tasks like opening a jar or tying our shoelaces would be a whole lot more complex if we couldn’t feel the object with our hands.

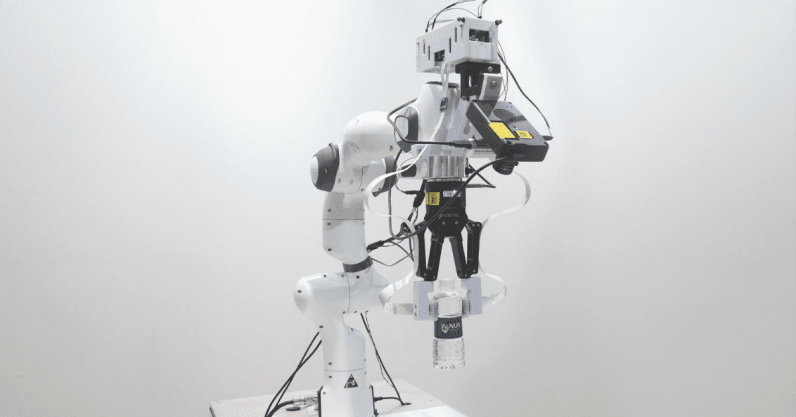

Robots typically struggle to replicate this sense, restricting their ability to manipulate objects. But researchers from the National University of Singapore (NUS) might have found a solution: pairing artificial skin with a neuromorphic “brain.”

The system was developed by a team led by Assistant Professors Benjamin Tee, an electronic skin expert, and Harold Soh, an AI specialist. Together, the duo has created a robotic perception system that combines touch and sight.

They told TNW it’s 1,000 times more responsive than the human nervous system, and capable of identifying the shape, texture, and hardness of objects 10 times faster than the blink of an eye. Tee calls it “the most intelligent skin ever.”

[Read: Neuromorphic computing chip could ‘smell’ explosives]

However, the artificial skin only solves half the puzzle of giving robots a sense of touch.

“They also need an artificial brain that can ultimately achieve perception and learning to complete the puzzle,” said Soh.

An artificial nervous system

The researchers built their artificial brain with Intel’s neuromorphic research chip: Loihi. The chip processes data from an artificial “spiking” neural network inspired by human neurons, which communicate by sending electrical spikes to one other.

“The ability to transmit a large amount of information very quickly and efficiently — and do the computation as well — is something humans are very good at,” said Tee.

“To grab an object so that it’s not going to slip out of your grasp — and if it is slipping out, you’re reflexively quickly able to give it a stronger grab — these are things that humans do at a millisecond level.”

In their initial test of the system, the researchers applied the artificial skin to a robotic hand and tasked it to read Braille. The system then sent signals collected by the skin to the Liohi chip, which converted the data into a semantic meaning. It managed to classify the Braille letters with over 92 percent, using 20 times less power than a standard Von Neumann processor.

The researchers then tested the benefits of combining vision and touch data. They added a camera to the system and trained the robot to classify containers filled with differing amounts of liquid. They say the Loihi chip processed this data 21% faster than the best performing GPU — while using 45 times less power.

The researchers envision the system being used to handle objects in factories and warehouses. Eventually, they believe it could improve human-robot interactions in caregiving and surgery.

“In 50 years, I really think we will have surgical robots that can perform certain surgeries autonomously because they have a sense of touch,” said Tee.

Soh was more reticent to make predictions.

“This is really an enabling technology, I think. I’m looking forward to the innovative ways that people are going to make use of this intelligent skin,” he said. “I think there are a lot of things that we haven’t even come up with yet.”

Published July 29, 2020 — 15:46 UTC