Google is generally reserved when it takes shots at competitors, but the company didn’t mince words. According to Google, Gemini has OpenAI beat on almost every measure.

Advertisement

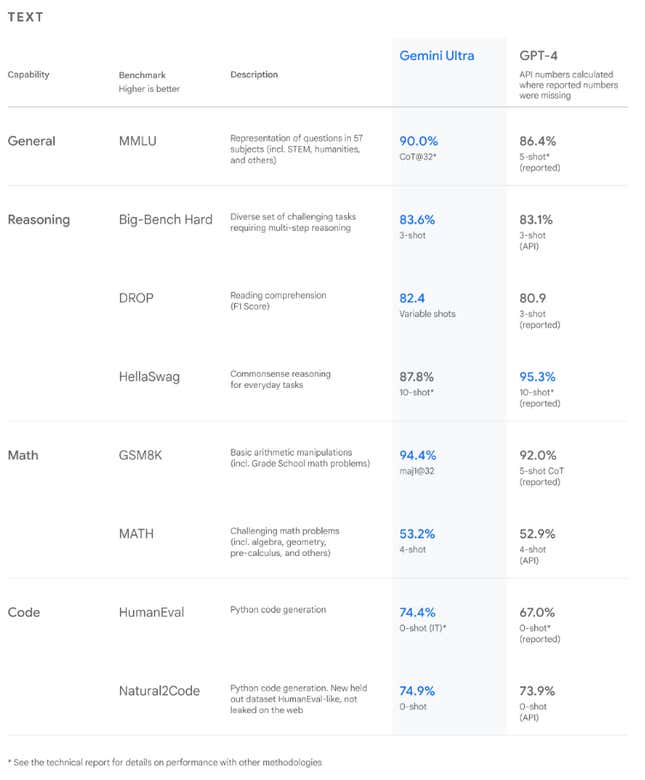

“With a score of over 90%, Gemini is the first A.I. model to outperform human experts on the industry standard benchmark MMLU,” said Eli Collins, Vice President of Product at Google DeepMind, speaking at a press conference. “It’s our largest and most capable A.I. model.” MMLU, short for Massive Multitask Language Understanding, measures AI capabilities using standard tests in a combination of 57 subjects such as math, physics, history, law, medicine, and ethics.

“Gemini’s performance also exceeds current state-of-the-art results on 30 out of 32 widely used industry benchmarks,” Collins said.

Services Marketplace – Listings, Bookings & Reviews