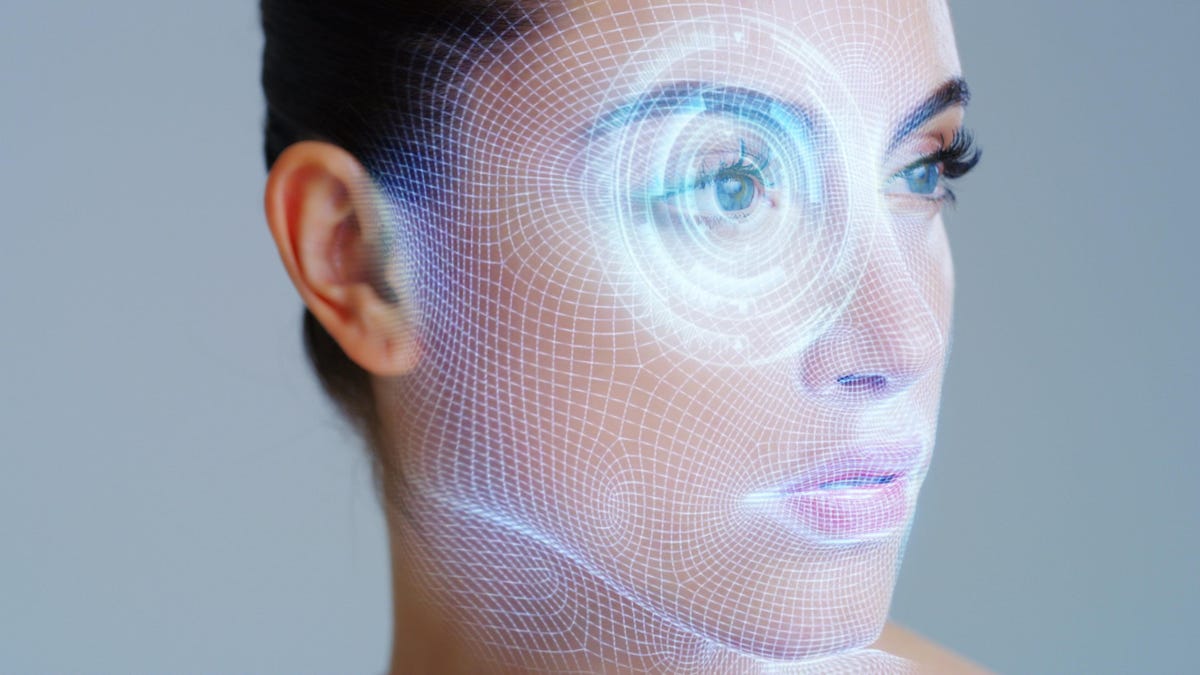

The world is being ripped apart by AI-generated deepfakes, and the latest half-assed attempts to stop them aren’t doing a thing. Federal regulators outlawed deepfake robocalls on Thursday, like the ones impersonating President Biden in New Hampshire’s primary election. Meanwhile, OpenAI and Google released watermarks this week to label images as AI-generated. However, these measures lack the teeth necessary to stop AI deepfakes.

“They’re here to stay,” said Vijay Balasubramaniyan, CEO of Pindrop, which identified ElevenLabs as the service used to create the fake Biden robocall. “Deepfake detection technologies need to be adopted at the source, at the transmission point, and at the destination. It just needs to happen across the board.”

Advertisement

Deepfake Prevention Efforts Are Only Skin Deep

The Federal Communications Commission (FCC) outlawing deepfake robocalls is a step in the right direction, according to Balasubramaniyan, but there’s minimal clarification on how this is going to be enforced. Currently, we’re catching deepfakes after the damage is done, and rarely punishing the bad actors responsible. That’s way too slow, and it’s not actually addressing the problem at hand.

Advertisement

OpenAI introduced watermarks to Dall-E’s images this week, both visually and embedded in a photo’s metadata. However, the company simultaneously acknowledged that this can be easily avoided by taking a screenshot. This felt less like a solution, and more like the company saying, “Oh well, at least we tried!”

Meanwhile, deepfakes of a finance worker’s boss in Hong Kong duped him out of $25 million. It was a shocking case that showed how deepfake technology is blurring the lines of reality.

Advertisement

The Deepfake Problem Is Only Going to Get Worse

These solutions are simply not enough. The issue is that deepfake detection technology is new, and it’s not catching on as quickly as generative AI. Platforms like Meta, X, and even your phone company need to embrace deepfake detection. These companies are making headlines about all their new AI features, but what about their AI-detecting features?

Advertisement

If you’re watching a deepfake video on Facebook, they should have a warning about it. If you’re getting a deepfaked phone call, your service provider should have software to catch it. These companies can’t just throw their hands in the air, but they are certainly trying to.

Deepfake detection technology also needs to get a lot better and become much more widespread. Currently, deepfake detection is not 100% accurate for anything, according to Copyleaks CEO Alon Yamin. His company has one of the better tools for detecting AI-generated text, but detecting AI speech and video is another challenge altogether. Deepfake detection is lagging generative AI, and it needs to ramp up, fast.

Advertisement

Deepfakes are truly the new misinformation, but it’s so much more convincing. There is some hope that technology and regulators are catching up to address this problem, but experts agree that deepfakes are only going to get worse before they get better.

Services Marketplace – Listings, Bookings & Reviews