No, you’re not imaging things: More YouTube videos have been getting pulled down since the start of the coronavirus pandemic.

The Google-owned company confirmed as much Tuesday, making clear in its second quarter Community Guidelines Enforcement Report that it had removed scores of videos that did not, in fact, violate community standards.

At issue, the report explains, is an increased reliance on automated systems resulting from a decision to keep its more sophisticated human moderators at home. (“Some teams just can’t work from home if their work is sensitive, or if they live in areas that don’t have the right technical infrastructure in place,” Matt Koval, creator liaison at YouTube has explained.)

While potentially upsetting to the untold number of people who mistakenly had their innocent videos booted from the platform, this should not have come as a surprise. Back in March, YouTube warned that, going forward, it would err on the side of a particular type of caution when it came to having its automated systems remove content.

“When reckoning with greatly reduced human review capacity due to COVID-19, we were forced to make a choice between potential under-enforcement or potential over-enforcement,” reads Tuesday’s report in part. “Because responsibility is our top priority, we chose the latter—using technology to help with some of the work normally done by reviewers.”

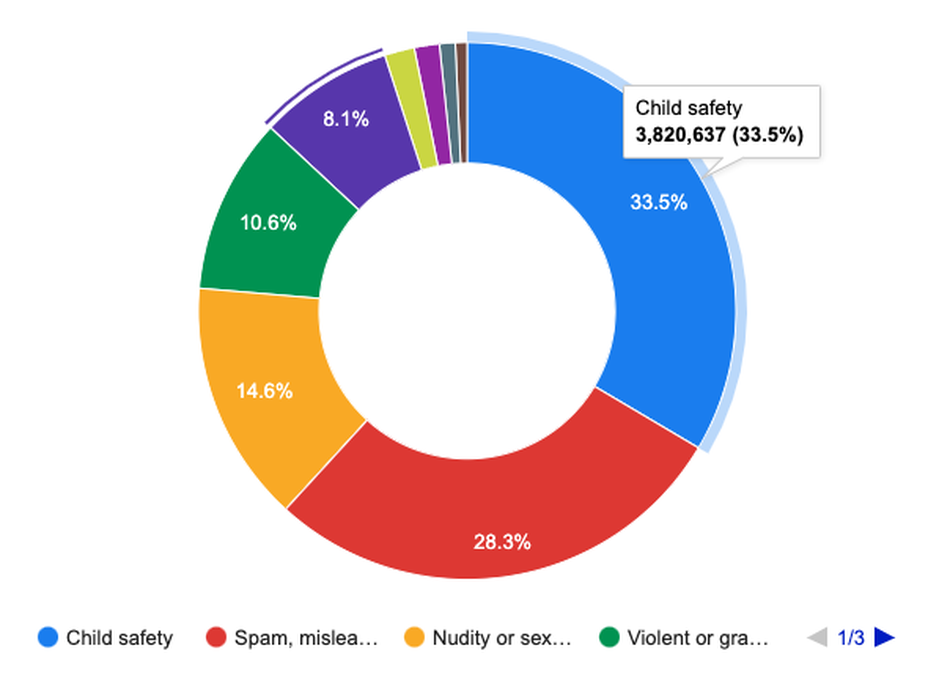

This increased reliance on machine learning had a noticeable and real effect. Specifically, YouTube claims it pulled down “more than double the number of videos we removed in the previous quarter.” Over 11 million videos were removed from April to June 2020.

And, to be clear, YouTube now admits many of those removed videos were in fact harmless.

“Though the number of appeals remains a small fraction of total removals — less than 3% of video removals — we saw both the number of appeals and the reinstatement rate double from the previous quarter,” explained the report. “Notably, the number of videos reinstated on appeal increased from 25% of appeals in Q1 to 50% of appeals in Q2.”

Image: screenshot / youtube

In other words, when called out for making an alleged mistake, YouTube was much more likely to agree with the video creator during the second quarter of this year than in years past.

Notably, YouTube’s second quarter push toward automation is a reversal of at least some previous trends at the company. In 2017, YouTube CEO Susan Wojcicki announced a plan to hire 10,000 more human moderators.

“Human reviewers remain essential to both removing content and training machine learning systems because human judgment is critical to making contextualized decisions on content,” Wojcicki wrote at the time.

SEE ALSO: YouTube is finally removing child exploitation videos

It is possible, of course, that those human moderators did their job all too well — training YouTube’s automated systems well enough that the company felt comfortable giving its humans the boot.

We asked YouTube what effect, if any, this push to automation has had on its machine learning and automated enforcement systems. A company spokesperson replied that YouTube had no other comment than what is in the Tuesday report.

Perhaps if we asked one of its automated systems we’d get a more detailed reply.