From medical imaging and language translation to facial recognition and self-driving cars, examples of artificial intelligence (AI) are everywhere. And let’s face it: although not perfect, AI’s capabilities are pretty impressive.

Even something as seemingly simple and routine as a Google search represents one of AI’s most successful examples, capable of searching vastly more information at a vastly greater rate than humanly possible and consistently providing results that are (at least most of the time) exactly what you were looking for.

The problem with all of these AI examples, though, is that the artificial intelligence on display is not really all that intelligent. While today’s AI can do some extraordinary things, the functionality underlying its accomplishments works by analyzing massive data sets and looking for patterns and correlations without understanding the data it is processing. As a result, an AI system relying on today’s AI algorithms and requiring thousands of tagged samples only gives the appearance of intelligence. It lacks any real, common sense understanding. If you don’t believe me, just ask a customer service bot a question that is off-script.

AI’s fundamental shortcoming can be traced back to the principle assumption at the heart of most AI development over the past 50 years, namely that if difficult intelligence problems could be solved, the simple intelligence problems would fall into place. This turned out to be false.

In 1988, Carnegie Mellon roboticist Hans Moravec wrote, “It is comparatively easy to make computers exhibit adult-level performance on intelligence tests or playing checkers, and difficult or impossible to give them the skills of a one-year-old when it comes to perception and mobility.” In other words, the difficult problems turn out to be simpler to solve and what appear to be simple problems can be prohibitively difficult.

Two other assumptions which played a prominent role in AI development have also proven to be false:

– First, it was assumed that if enough narrow AI applications (i.e., applications which can solve a specific problem using AI techniques) were built, they would grow together into a form of general intelligence. Narrow AI applications, however, don’t store information in a generalized form and can’t be used by other narrow AI applications to expand their breadth. So while stitching together applications for, say, language processing and image processing might be possible, those apps cannot be integrated in the same way that a child integrates hearing and vision.

– Second, some AI researchers assumed that if a big enough machine learning system with enough computer power could be built, it would spontaneously exhibit general intelligence. As expert systems that attempted to capture the knowledge of a specific field have clearly demonstrated, it is simply impossible to create enough cases and example data to overcome a system’s underlying lack of understanding.

If the AI industry knows that the key assumptions it made in development have turned out to be false, why hasn’t anyone taken the necessary steps to move past them in a way that advances true thinking in AI? The answer is likely found in AI’s principal competitor: let’s call her Sally. She’s about three years old and already knows lots of things no AI does and can solve problems no AI can. When you stop to think about it, many of the problems that we have with AI today are things any three-year-old could do.

Think of the knowledge necessary for Sally to stack a group of blocks. At a fundamental level, Sally understands blocks or any other physical objects) exist in a 3D world. She knows they persist even when she can’t see them. She knows innately that they have a set of physical properties like weight and shape and color. She knows she can’t stack more blocks on top of a round, rolly one. She understands causality and the passage of time. She knows she has to build a tower of blocks first before she can knock it over.

What does Sally have to do with the AI industry? Sally has what today’s AI lacks. She possesses situational awareness and contextual understanding. Sally’s biological brain is capable of interpreting everything it encounters in the context of everything else it has previously learned. More importantly, three-year-old Sally will grow to become four years old, and five years old, and 10 years old, and so on. In short, three-year-old Sally innately possesses the capabilities to grow into a fully functioning, intelligent adult.

In stark contrast, AI analyzes massive data sets looking for patterns and correlations without understanding any of the data it is processing. Even the recent “neuromorphic” chips rely on capabilities absent in biology.

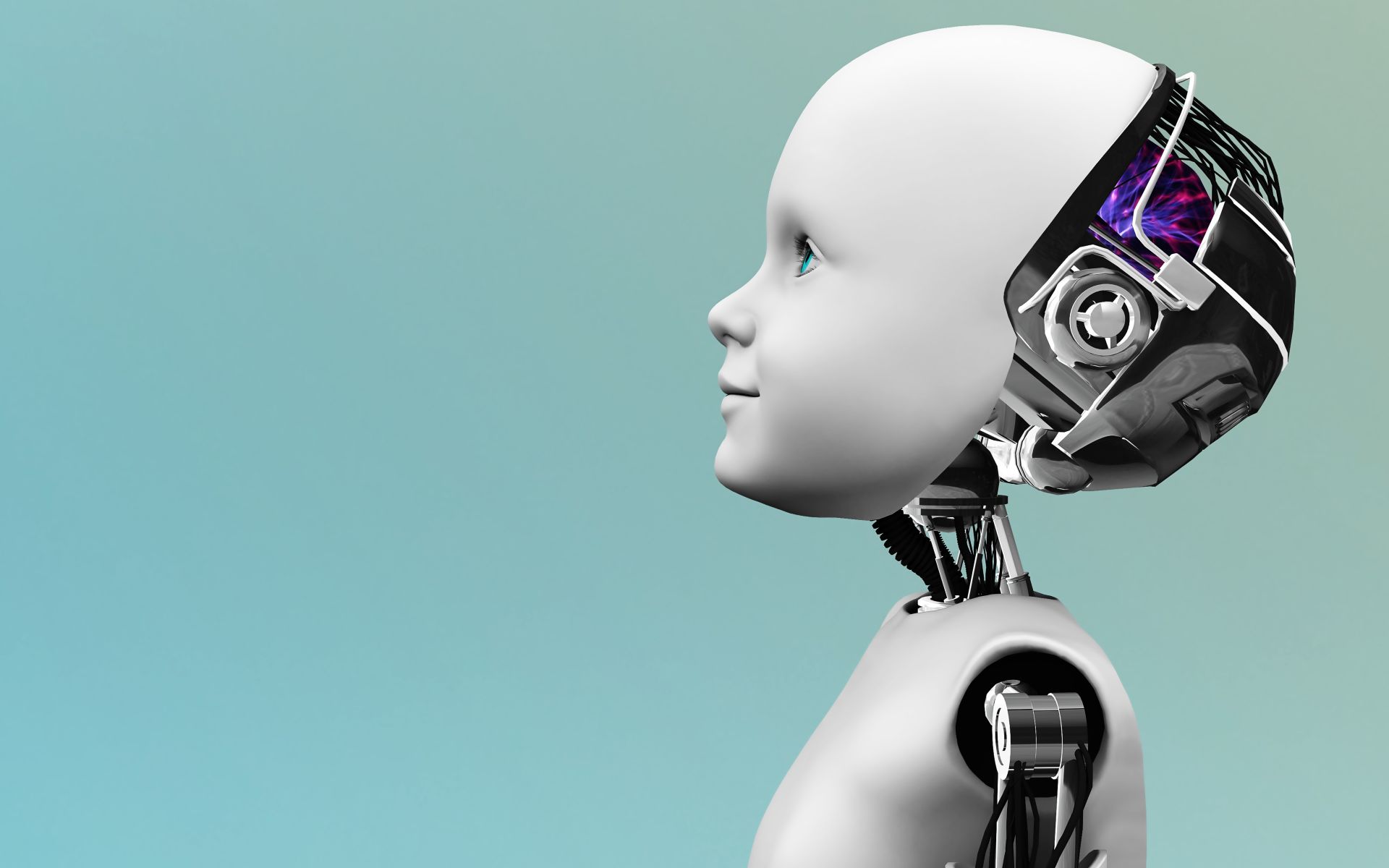

For today’s AI to overcome its inherent limitations and evolve into its next phase – defined as artificial general intelligence (AGI) – it must be able to understand or learn any intellectual task that a human can. It must attain consciousness. Doing so will enable it to consistently grow its intelligence and abilities in the same way that a human three-year-old grows to possess the intelligence of a four-year-old, and eventually a 10-year-old, a 20-year-old, and so on.

Sadly, the research required to shed light on what ultimately will be needed to replicate the contextual understanding of the human brain, enabling AI to attain true consciousness, is highly unlikely to receive funding. Why not? Quite simply, no one—at least no one to date—has been willing to put millions of dollars and years of development into an AI application that can do what any three-year-old can do.

And that inevitably brings us back to the conclusion that today’s artificial intelligence really isn’t all that intelligent. Of course, that won’t stop numerous AI companies from bragging that their AI applications “work just like your brain.” But the truth is that they would be closer to the mark if they admitted their apps are based on a single algorithm – backpropagation – and represent a powerful statistical method. Unfortunately, the truth is just not as interesting as “works like your brain.”

This article was originally published by Ben Dickson on TechTalks, a publication that examines trends in technology, how they affect the way we live and do business, and the problems they solve. But we also discuss the evil side of technology, the darker implications of new tech, and what we need to look out for. You can read the original article here.