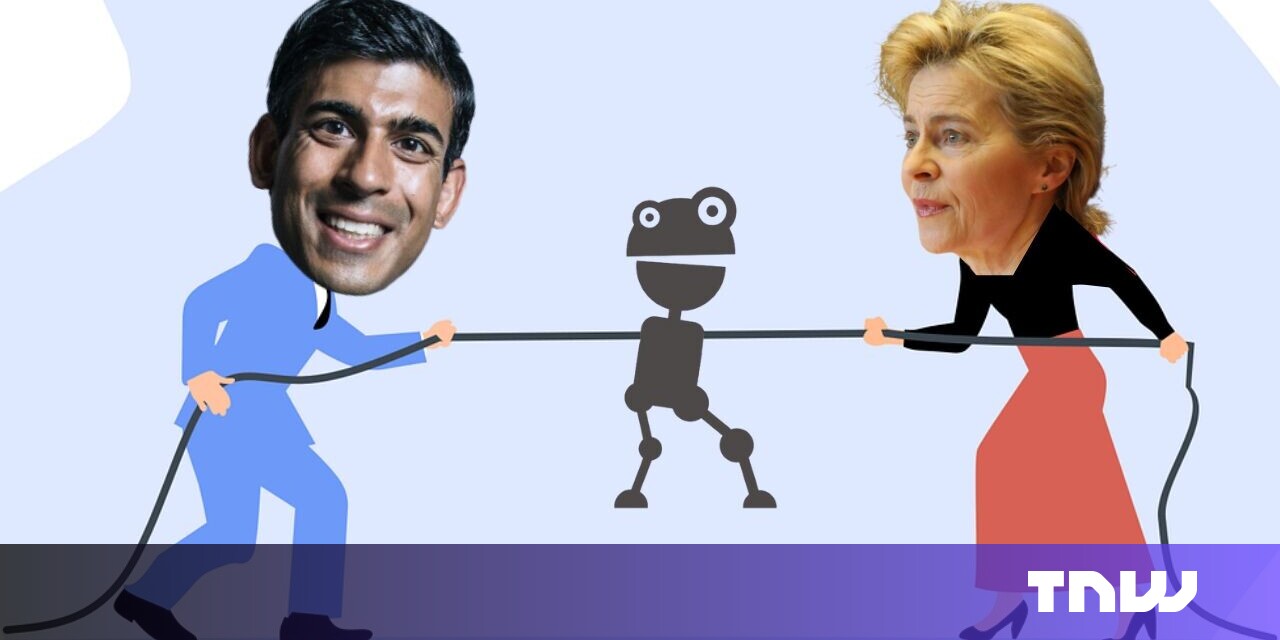

Britain has expanded its principles-based approach to AI regulation, which aims to create a pro-innovation edge over the EU.

In an announcement yesterday, the UK government unveiled seven new principles for so-called foundation models (FMs), which underpin applications such as ChatGPT, Google’s Bard, and Midjourney. Trained on immense datasets and adaptable to various applications, these systems are at the epicentre of the AI boom.

Their power has also sparked alarm. Critics warn that FMs can amplify inequalities, spread inaccurate information, and leave huge carbon footprints. The new principles are designed to mitigate these risks. Yet they also aim to spur innovation, competition, and economic growth.

“There is real potential for this technology to turbocharge productivity and make millions of everyday tasks easier — but we can’t take a positive future for granted,” said Sarah Cardell, CEO of Britain’s anti-trust regulator, the Competition and Markets Authority (CMA).

To safeguard that future, the CMA set out the following principles:

- Accountability – FM developers and deployers are accountable for outputs provided to consumers.

- Access – ongoing ready access to key inputs, without unnecessary restrictions.

- Diversity – sustained diversity of business models, including both open and closed.

- Choice – sufficient choice for businesses so they can decide how to use FMs.

- Flexibility – having the flexibility to switch and/or use multiple FMs according to need.

- Fair dealing – no anti-competitive conduct, including anti-competitive self-preferencing, tying, or bundling.

- Transparency – consumers and businesses are given information about the risks and limitations of FM-generated content so they can make informed choices.

Broad in nature, the principles reflect British plans to gain a global foothold in AI.

Across the channel, the EU has taken a more centralised approach to regulation, with a stricter emphasis on safety. At the cornerstone of the bloc’s vision is the landmark AI Act — the first-ever comprehensive legislation for artificial intelligence. The rulebook has won praise from safety experts, but criticism from businesses. In a recent open letter, a group of executives at some of Europe’s biggest companies warned that the proposals “would jeopardise Europe’s competitiveness and technological sovereignty.”

Notably, the UK government has also publicly criticised the EU’s AI regulation. When reports emerged that Britain’s tech sector is the most valuable in Europe, the country’s “less centralised” and “pro-innovation” programme was spotlighted for praise.

At the foundation of this approach is a principles-based framework. Rather than relying on rules that are overseen by a new body, the framework emphasises adaptable guidance and delegates responsibility to existing regulators.

It’s an approach that’s raised suspicions among AI ethicists, but praise from businesses. Gareth Mills, a partner at law Charles Russell Speechlys who represents clients in the tech sector, described the new principles as “necessarily broad”

“The principles themselves are clearly aimed at facilitating a dynamic sector with low entry requirements that allows smaller players to compete effectively with more established names, whilst at the same time mitigating against the potential for AI technologies to have adverse consequences for consumers,” he said.

Mills also praised the CMA’s collaboration with the tech sector. In the coming months, the regulator aims to further consult leading FM developers such as Google, Meta, OpenAI, Microsoft, NVIDIA, and Anthropic. It will also speak to other regulators, consumer groups, civil society, government experts, and other regulators.

“The CMA has shown a laudable willingness to engage proactively with the rapidly growing AI sector, to ensure that its competition and consumer protection agendas are engaged [at] as early a juncture as possible,” said Mills.

However, not everyone is so impressed by the approach. In recent years, a flood of AI principles and codes of ethics have been released, but critics argue that they’re “useless.”

“AI ethical principles are useless, failing to mitigate the racial, social, and environmental damages of AI technologies in any meaningful sense,” Luke Munn, a researcher at Western Sydney University, said in a recent paper. “The result is a gap between high-minded principles and technological practice.”

Services Marketplace – Listings, Bookings & Reviews