My editor-in-chief dared me to pull a bunch of random topics out of a hat and write a compelling tech story about them. Here’s what I got: Jay-Z, neural networks, the US Navy SEALs, and jazz music.

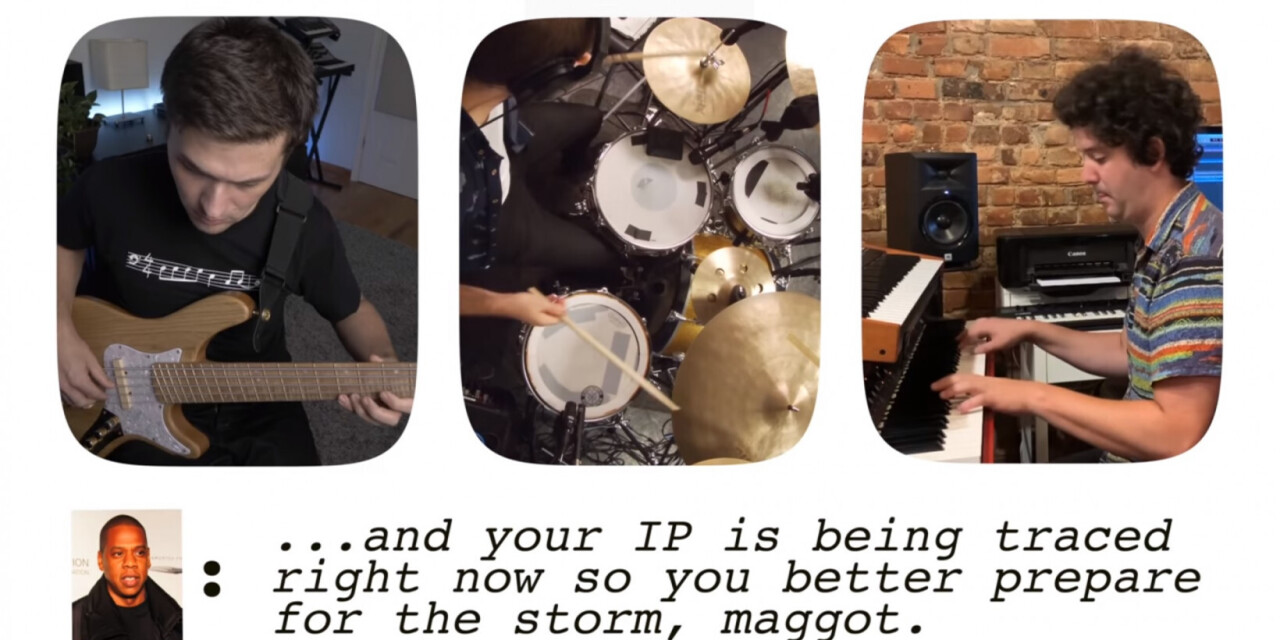

Luckily for me, I happened across this video featuring a group of talented jazz musicians interpreting a Deepfake AI-generated version of Jay-Z rapping the infamous Navy SEAL copypasta meme. Natch.

[embedded content]

Adam Neely, a popular music YouTuber with over a million subscribers, released the video as part of a CuriosityStream documentary. It features a very human quest to interpret a computer-generated rap acapella.

Jay-Z is a fantastic target for a Deepfakes AI because, unlike most rappers, he’s released a lot of official, studio quality acapella tracks. This allows AI developers to train a neural network on his voice and rapping style without worrying about removing music or extracting vocals.

In theory, this should mean we can “style transfer” Jay’s flow, but that’s not quite how things work out. As Neely explains in the video, there’s an “uncanny valley” for music just like there is for visual imagery. The way this works for music is that, at some point, computer generated vocals start getting kind of cute. Think “Still Alive” by GLaDOS:

[embedded content]

But the closer you get to dialing in a “real” human voice, the weirder it starts to get. It’s not quite so noticeable when you’re watching a Deepfake where President Barrack Obama gives a speech, but when you really dig into the way things sound – like say when you’re trying to make novel music – the flaws in the system start to show.

[Read: This spooky Deepfake AI mimics dozens of celebs and politicians]

For starters, Neely had to call on professional jazz musicians to help him make heads or tails of the faux-Z vocal tracks. In the video, he says:

It didn’t have any of the musical aspects that we would normally find in hip hop music, namely a steady pulse and any kind of rhyme or rhythmic schemes. But there is something kind of musical there or rhythmic about it. At least that’s how I’m hearing it.

Part of the issue is that the fake Jay-Z is using a copypasta as lyrics instead of something written to a beat. This means that it doesn’t follow any normal pattern of speaking and that makes it incredibly hard to transpose into music. In other words: the current generation of Deepfakes are unlikely to produce a new Tupac or Biggie Smalls album anytime soon. They can copy the voice well enough for parody, but the musicianship isn’t quite there yet.

Neely wasn’t going to be derailed by the fact that robots suck at rapping. When he turned to his friends in the jazz music business, they were able to turn the scat-singing-like Deepfake utterances into as compelling a jazz song featuring an AI-generated Jay-Z as is probably humanly possible.

Check out the full video above for details.

Published August 4, 2020 — 18:08 UTC