How useful is your AI? It’s not a simple question to answer. If you’re trying to decide between Google‘s Translate service or Microsoft’s Translator, how do you know which one is better?

If you’re an AI developer, there’s a good chance you think the answer is: benchmarks. But that’s not the whole story.

The big idea

Benchmarks are necessary, important, and useful within the context of their own domain. If you’re trying to train an AI model to distinguish between cats and dogs in images, for example, it’s pretty useful to know how well it performs.

But since we can’t literally take our model out and use it to scan every single image of a cat and dog that’s ever existed or ever will exist, we have to sort of guess how good it will be at its job.

To do that, we use a benchmark. Basically, we grab a bunch of pictures of cats and dogs and we label them correctly. Then we hide the labels from the AI and ask it to tell us what’s in each image.

If it scores 9 out of 10, it’s 90% accurate. If we think 90% accurate is good enough, we can call our model successful. If not, we keep training and tweaking.

The big problem

How much money would you pay for an AI capable of telling a cat from a dog? A billion dollars? Half a nickel? Nothing? Probably nothing. It wouldn’t be very useful outside of the benchmark leaderboards.

However, an AI capable of labeling all the objects in any given image would be very useful.

But there’s no “universal benchmark” for labeling objects. We can only guess how good such an AI would be at its job. Just like we don’t have access to every image of a cat and dog in existence, we also cannot label everything that couldpossiblyexist in image form.

And that means any benchmark measuring how good an AI is at labeling images is an arbitrary one.

Is an AI that’s 43% accurate at labeling images from a billion categories better or worse than one that’s 71% accurate at labeling images from 28 million categories? Does it matter what the categories are?

BD Tech Talks’ Ben Dickson put it best in a recent article:

The focus on benchmark performance has brought a lot of attention to machine learning at the expense of other promising directions of research. Thanks to the growing availability of data and computational resources, many researchers find it easier to train very large neural networks on huge datasets to push the needle on a well-known benchmark rather than to experiment with alternative approaches.

We’re developing AI systems that are very good at passing tests, but they often fail to perform well in the real world.

The big solution

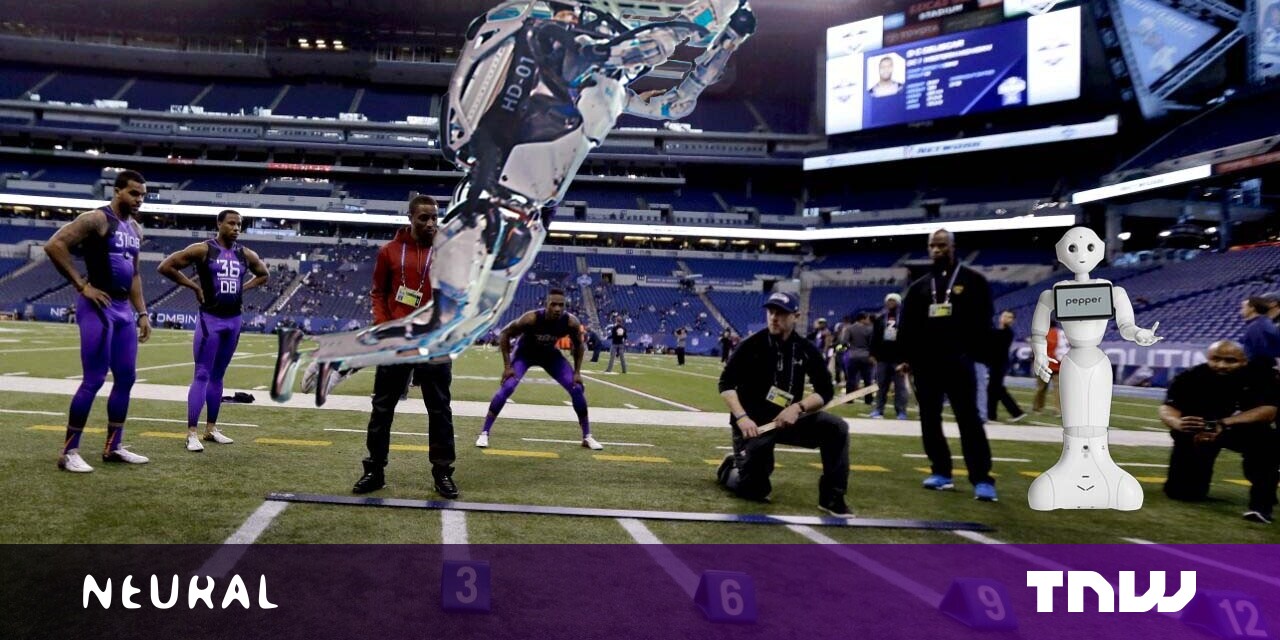

It turns out that guessing performance at scale isn’t a problem isolated to the world of AI. In 1982, National Football Scouting Inc. held the first “NFL Combine” to address the problems of busts – players who don’t perform as well as they were predicted to.

In the pre-internet era, the only way to evaluate players was in person and the travel expenses involved in scouting hundreds or thousands of players throughout the year were becoming too great. The Combine was a place where NFL scouts could gather to judge player performance at the same time.

Not only did this save time and money, but it also established a universal benchmark. When a team wanted to trade or release a player, other teams could refer to their “benchmark” performance at the combine.

Of course, there are no guarantees in sports. But, essentially, the Combine puts players through a series of drills that are specifically relevant to the sport of football.

However, the Combine is just a small part of the scouting process. In the modern era, teams hold private player workouts so they can determine whether a prospect appears to be a good fit in an organization’s specific system.

Another way of putting all that would be that NFL team developers use a model’s benchmarks as a general performance predictor, but they also conduct rigorous external checks to determine the model’s usefulness in a specific domain.

A player may knock your socks off at the Combine, but if they fail to impress in individual workouts there’s a pretty good chance they won’t make the team.

Ideally, benchmarking in the AI world would simply represent the first round of rigor.

As a team of researchers from UC Berkeley, the University of Washington, and Google Research recently wrote:

Benchmarking, appropriately deployed, is not about winning a contest but more about surveying a landscape— the more we can re-frame, contextualize and appropriately scope these datasets, the more useful they will become as an informative dimension to more impactful algorithmic development and alternative evaluation methods.