In this installment of You Asked: What is HDR, really? Streaming with 4K HDR 10 + versus 4K Blu-ray discs with just HDR 10? What’s the difference between 4:2:0, 4:2:2, and 4:4:4 color? And why do some OLED TVs look terrible in the store?

Which streaming device is the best for image quality

We’re going to start with two commenters asking more or less the same question, and there are more like this in the You Asked inbox along the same lines, I’m sure of it.

Freddy writes: I already know that quality can change among the different streaming services, but can quality also depend on your streaming device? In other words, can an Apple TV 4K or Nvidia Shield produce a better picture on the same app compared to the cheaper Chromecast with Google TV or a Roku 4K device?

And Raphael asked: Will an Apple TV 4K provide better image and sound quality than the LG C1 built-in apps? I know people say the ATV4k has a better system, but the LG OS doesn’t bother me at all. My main concern is with image quality. And what about a PS5?

The short answer is that the hardware you are using to stream your content — whether that’s the apps built into your TV, inexpensive streaming sticks, or premium streaming boxes – can have an impact on the quality of your video and audio. But in many cases, it’s the platform that makes the difference.

The Amazon Prime Video app, for instance, doesn’t output the same quality video across every platform. It’s no surprise that the best Amazon Prime Video experience comes from an Amazon Fire TV device. That’s an instance of Amazon keeping the best for itself, which I think is just rude. Other cases are less nefarious. The Max or Disney+ app may look different streamed on a TCL Google TV versus a Hisense Google TV versus a Sony Google TV. The apps on the Roku platform may work differently than the same apps on the LG WebOS platform or the Samsung Tizen platform. Digging into the details of which platform has the best versions of a given app can get super messy, as you’d imagine.

Then there’s the hardware consideration, which is also messy. I haven’t seen much of a difference in video quality between, say, the Roku Streaming Stick 4K and the Roku Ultra, unless the Roku Ultra’s Ethernet connection overcame a Wi-Fi connection limitation. However, I have noticed that the Chromecast with Google TV is consistent across TVs and sometimes ends up working better than the built-in Google TV apps on some TVs. The same goes for the Fire TV Stick versus the Cube on the Amazon platform. Most of the differences in the hardware aren’t related to the quality of the video they deliver, though. They have to do with how fast apps load and other frilly features.

As you can see, it’s a bit of a mess if you start drilling down too deeply. And I think most folks are going to be very happy with a high-quality external streamer in the long term in general. Having that streaming device separated from the TV means they can be updated independently and replaced more inexpensively and as needed to stay up to date. Those considerations alone make getting a decent streaming stick or dongle worth it. Plus, streaming sticks and dongles are portable. You can take them with you on vacations, visits to relatives, business trips, etc. I carry a Chromecast with Google TV in my backpack for just such reasons.

But for home use, on my main TVs? I tend to use Apple TV 4K boxes. The Apple TV 4K is not perfect, though it still is atop our list of the best streaming hardware you can buy. For instance: It doesn’t support DTS:X, right now, which Disney+ will soon support. But overall it is the most stable, reliable, and consistently high-quality experience out there. And then there’s all the Apple-specific stuff, if you’re a heavy Apple user. I also like the control that the Apple TV lets you leverage. It lets you make sure that you’re watching 24 frames per second content at 24 fps instead of having 30 fps or 60 fps forced on you – which some streaming services and hardware will do to you.

I fought the Apple TV tooth and nail in the early days because it was comically expensive compared to streaming sticks — and even streaming boxes from competing brands. But now, in its more modern version, it has become my go-to for daily use and TV testing.

As a side note, game consoles generally leave a lot to be desired as media streamers. Convenient? Sure, if you’re a gamer first and a streamer a distant second. But I will never use a game console as a streamer for any long-term use.

Frame rate control

Felix from Germany says: I use an Alienware aw3423dw. I’m surprised I can use HDR even with the color depth set to 8-bit. I always thought 10-bit is necessary. In a previous You Asked, you said some panels are using “frame rate control” to archive HDR even with the limits. But I also found the tech called “dithering.”

Frame rate control — or FRC — and dithering, within the context of what we’re talking about, are the same thing.

What you’re asking is how HDR is possible with 8-bit color when I said previously that HDR required 10-bit color. And that is because some segments of the industry have decided that expanded luminance contrast — the increase in the range between black and white and the greater number of steps in the brightness — is enough to qualify as HDR. I do not agree, and I’m not alone. There are lots of other industry professionals and an increasing number of consumers who believe 10-bit color should be part and parcel of the HDR designation. However, since the HDR police aren’t out there slapping the cuffs on anyone who breaks the law — because there really isn’t a law — you will see some monitors and TVs that claim to be HDR-capable, even though they barely pull off HDR in any meaningful way.

By the way: That Alienware QD-OLED monitor is amazing, and you should feed it the best HDR signal you can because it deserves it. And so do you.

4K Blu-ray vs. HDR

Hasher asks: I recently bought a Samsung S95C and was wondering what would be a better option for me to watch my movies: movies on Prime Video that are in HDR10+ and UHD versus a 4K Blu-ray that is UHD, but just HDR and not HDR10+? There’s no Dolby Vision on the TV, so is it even possible to get a 4K Blu-ray that has HDR10+?

I’ll answer the second part of your question: Yes, there is a list of 50+ 4K Blu-rays with HDR+ on them. Forty isn’t a lot, though, so there’s a good chance that your decision is going to come down to whether you suffer the lower bit rate of streaming in favor of dynamic HDR 10+ metadata or sacrifice HDR 10+ dynamic metadata in favor of a high bit rate from a disc.

I really want to do a full comparison on this. But for now, I will say 4K Blu-ray is my preference. I think the higher bit rate will offer a much cleaner look — less color banding, more detail, less noise — and the HDR will be pretty dang good, even without the dynamic metadata. That’s where I stand. However, there’s no arguing that the streaming option is probably quite a bit cheaper and still pretty freaking great, so that could move the needle for you. But, if cost were no object, or if you really like owning your content, I’d buy the Blu-ray before the “digital copy” on Amazon.

Chroma subsampling

Thales asks: Can you explain a bit of the difference between RGB, YCbCr 4:2:2, and YCbCr 4:4:4, and which one would be the best to use on a desktop monitor? Also, do these also affect TVs?

First, let’s talk about bit depth, and then let’s talk about Chroma subsampling because the two are often mentioned in the same breath – especially on PCs and cameras. Color bit depth refers to the number of colors that can be captured by a camera or expressed on a display. The number of colors is simple math. We have three color primaries: red, green, and blue. Those make up RGB.

In 8-bit color, there are 256 shades each of red, green, and blue. Multiply 256 times 256 times 256, and you get roughly 16.7 million colors. That seems like a lot. But, then there’s a 10-bit color, in which there are 1,024 shades each of red, green, and blue. Do that math, and you get 1.07 billion colors, which is a whole lot more. And to really boggle your brain. In 12-bit color, there are 4,096 shades each of red, green, and blue, working out to a dizzying 68.7 billion colors.

Now, understand that color information — Chrominance — is big, fat-cat information. It takes a lot of space or bandwidth. It takes way more than luminance, or black-and-white information. This actually works out just fine for us because we are way more sensitive to brightness changes (that’s contrast) than we are to color.

In a video signal, luminance information — the black-and-white or grayscale — is uncompressed because it is super important and only takes up about one-third of the total bandwidth of that video signal.

Color being the space hog that it is, it must be compressed. However, if we compress nothing, we get 4:4:4 Chroma. That’s the best, and there are very few sources of uncompressed color. The next step down is 4:2:2, which has half the color information but is still a bit of a space hog. Then we have 4:2:0, which is one-quarter of the color information and still a space hog, but it is more manageable. See, 4:2:0 Chroma subsampling is most of what we get on TV. It’s the spec for streaming and for 4K Blu-ray Discs, and we’re all quite happy with it. Game consoles can support 4:2:2 chroma because they are basically mini-PCs. But remember that all that color information takes up a lot of space. So if you boost up to 4:2:2 chroma, you’ll likely have to sacrifice somewhere else — like frame rate, for example.

On a PC, you may want to opt for 4:2:2 or 4:4:4 if you can because you can see a difference with text over uniform colors. But, again, depending on your setup and your use case, pumping up the chroma subsampling may require trade-offs to other video performance that you don’t want to make.

Showroom OLEDs

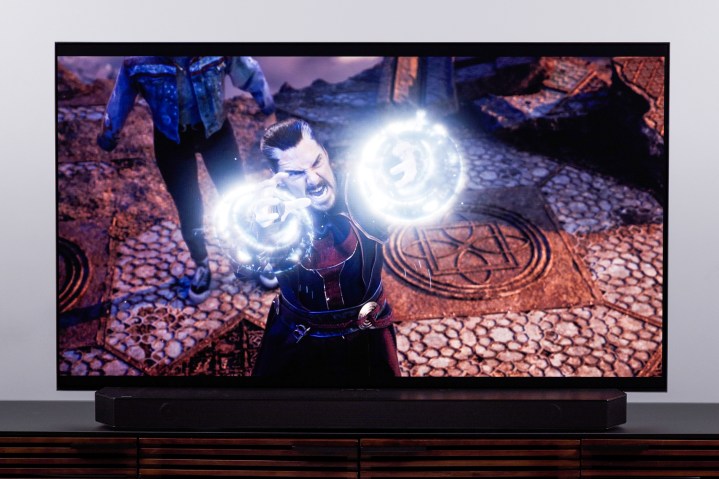

Jason Wuster writes that they are holding on to their 12-year-old Pioneer Kuro plasma. OLED TVs look terrible in the store. The displays with lizards on branches or honey dripping look astounding. But when they show films, they look like hot garbage. I was recently in my local store and they were showing Dr. Strange and the Multiverse of Madness on an LG C3, and it just looked mushy, smeary, and gross. Is this because of poor signal quality, or are they just poorly calibrated in-store? If I had spent $3,00 on one and it looked like that at home, I would have buyer’s remorse.

It’s hard for me to know exactly what’s going on at your local Best Buy, so I can only speculate. I suppose it is possible that the matrix switch they use to distribute the video signal to multiple TVs could be a limiting factor. But what’s most likely is that the TVs are in showroom mode, which means they’ll basically be in Vivid mode. And with Vivid mode, which over-brightens everything, blows out color, and applies a very cold color temperature, comes motion smoothing.

My guess is that what you saw was an extreme version of what we often call “soap opera effect,” which has a jarring look to anyone who is used to a 24-frame-per-second film cadence. Soap opera effect, the by-product of motion smoothing or motion interpolation, is not the same as watching high frame-rate video that was recorded at 60 fps or higher. It isn’t the same because the smoothing involves faked video — the TV is literally making up frames and inserting them. And the result is … well, it’s rarely good. It’s less annoying when the TV just has to double a 30-frame-per-second TV signal because that job is much easier than trying to take 24 frames per second and mathematically make it multiply evenly to 60, 120, or 240 frames per second.

My best guess is that’s why you hate the look of OLED TVs at the store you’re visiting. You can always ask an associate to take the TV out of showroom mode and let you see what it looks like when it is in Filmmaker Mode or Cinema Mode — not Cinema Home, though, as that leaves some motion smoothing on. Anyway, many of us out here, myself included, who moved from plasma to OLED and are pleased as punch about it. Even though OLED introduces some new issues that Plasma didn’t have, its benefits far outweigh its faults. I made a video about Plasma vs. OLED.

Blu-ray players

Roderick L Strong writes: I have the Panasonic UB820, and I love it — especially the HDR optimizer function it has. I’m on the fence about getting the Magnetar UDP800, but I don’t know if it’s something that I should invest in, considering how well my Panasonic performs and that I don’t have any physical audio media that the Magnetar could take advantage of. I would like your input: Should I stay with my Panasonic or make the leap with the Magnetar?

The simple answer: Stick with the Panasonic. Unless it is showing signs of age or causing you some other issues of concern, it is doing exactly the job you need it to do. I would not buy a new player expecting a noticeable upgrade in video fidelity. If you had a lot of physical audio media, that would be a different story. But for playing 4K Blu-rays, stick with what you have and what you already very much enjoy.

Services Marketplace – Listings, Bookings & Reviews