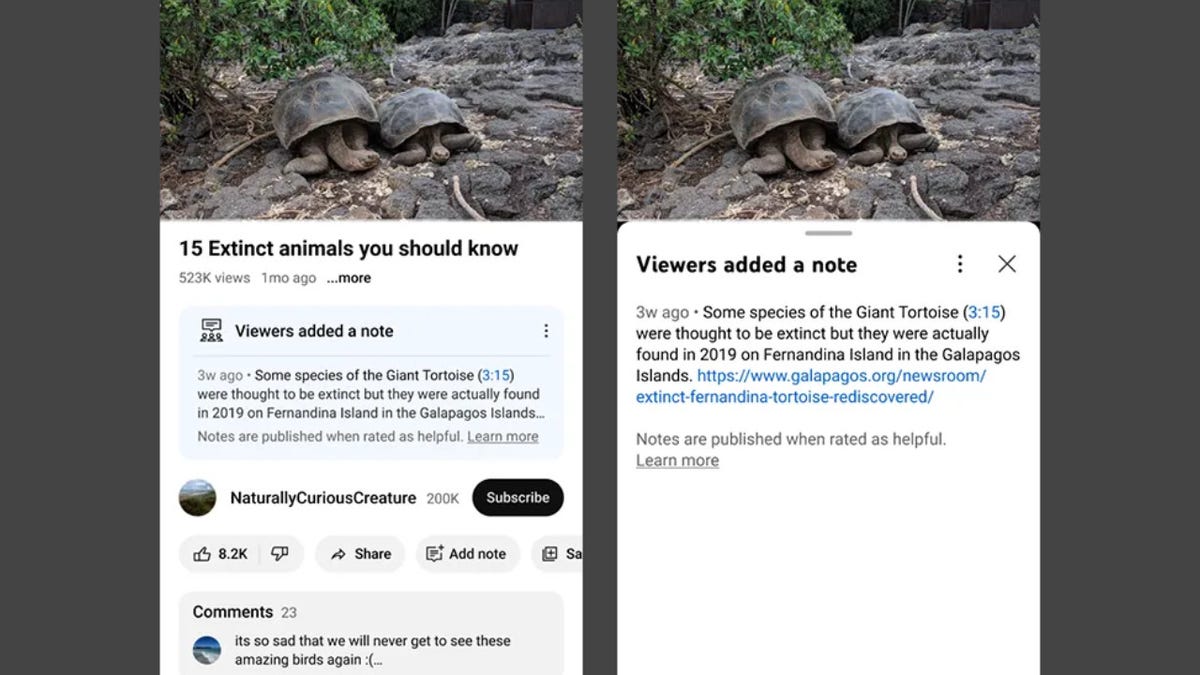

YouTube will soon be testing a feature that allows users to add notes under videos to correct inaccurate or misleading information, according to an announcement from the video-sharing platform on Monday. And while it sounds strikingly similar to X’s Community Notes program, there are still a lot of questions about how it will work.

Suggested Reading

Suggested Reading

The new program, which doesn’t appear to have an official name yet, will be rolled out to eligible contributors who will be invited by email or a notification in their Creator Studio portal. Only creators with an active channel in good standing will be invited, according to YouTube.

Advertisement

“Viewers in the U.S. will start to see notes on videos in the coming weeks and months,” YouTube said in a blog post. “In this initial pilot, third-party evaluators will rate the helpfulness of notes, which will help train our systems. These third-party evaluators are the same people who provide feedback on YouTube’s search results and recommendations. As the pilot moves forward, we’ll look to have contributors themselves rate notes as well.”

Advertisement

YouTube stressed that its efforts are just experimental at this point and the company anticipates problems that will need to be ironed out along the way.

Advertisement

“The pilot will be available on mobile in the U.S. and in English to start,” YouTube said in a blog post on Monday. “During this test phase, we anticipate that there will be mistakes—notes that aren’t a great match for the video, or potentially incorrect information—and that’s part of how we’ll learn from the experiment.”

YouTube will also allow users to rate whether a note is helpful, which will theoretically help improve the system:

From there, we’ll use a bridging-based algorithm to consider these ratings and determine what notes are published.

A bridging-based algorithm helps identify notes that are helpful to a broad audience across perspectives. If many people who have rated notes differently in the past now rate the same note as helpful, then our system is more likely to show that note under a video. These systems will continuously improve as more notes are written and rated across a broad range of topics.

Advertisement

The problem of how to tackle misinformation and disinformation has long been a problem on the internet. But things have gotten so much more consequential over the past decade, with the 2016 presidential election and the 2020 covid-19 pandemic making it clear that bad information can have very real consequences for the world. When lies spread quickly online you can wind up with someone like Trump as president as well as lots of people who are convinced basic public health measures like vaccines will actually make them sick.

Complicating things even further, you’ve got nation-state actors spreading their own disinformation, as countries like Russia, China, and the U.S. all jockey for dominance in the post-Cold War world order. As Reuters revealed just last week, the U.S. military ran hundreds of Twitter accounts back in 2020 and 2021 spreading propaganda questioning the safety of China’s covid-19 vaccine.

Advertisement

There are still a lot of unanswered questions about how YouTube’s new fact-checking system will work, including how notes might appear when a false claim is made on a very long video. Will there be timestamps to help identify where something in the video is wrong? And how many notes will be allowed on a given video? YouTube didn’t respond to questions about those details on Monday. We’ll update this post if we hear back.

Services Marketplace – Listings, Bookings & Reviews