AI may not replace your doctor anytime soon, but it will probably be answering their emails. A study published in JAMA Internal Medicine Friday examined questions from patients and found that ChatGPT provided better answers than human doctors four out of five times. A panel of medical professionals evaluated the exchanges, and preferred the AI’s response in 79% of cases. ChatGPT didn’t just provide higher quality answers, the panel concluded the AI was more empathetic, too. It’s a finding that could have major implications for the future of healthcare.

“There’s one area in public health where there’s more need than ever before, and that is people seeking medical advice. Doctor’s inboxes are filled to the brim after this transition to virtual care because of COVID-19,” said the study’s lead author, John W. Ayers, PhD, MA, vice chief of innovation in the UC San Diego School of Medicine Division of Infectious Diseases and Global Public Health.

Advertisement

“Patient emails go unanswered or get poor responses, and providers get burnout and leave their jobs. With that in mind, I thought ‘How can I help in this scenario?’” Ayers said. “So we got this basket of real patient questions and real physician responses, and compared them with ChatGPT. When we did, ChatGPT won in a landslide.”

Questions from patients are hard to come by, but Ayers’s team found a novel solution. The study pulled from Reddit’s r/AskDocs, where doctors with verified credentials answer users’ medical questions. The study randomly collected 195 questions and answers, and then had ChatGPT answer the same questions. A panel of licensed healthcare professionals with backgrounds in internal medicine evaluated the exchanges. The panel first chose which response was they thought was better, and then evaluated both the quality of the answers and the empathy or bedside manner provided.

Advertisement

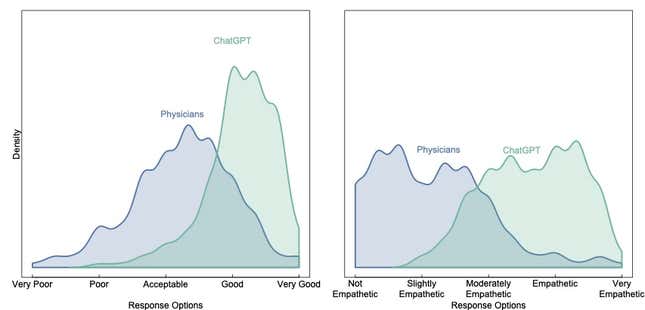

The results were dramatic. ChatGPT’s answers were rated “good” or “very good” more than three times more often than doctors’ responses. The AI was rated “empathetic” or “very empathetic” almost 10 times more often.

The study’s authors say their work isn’t an argument in favor of ChatGPT over other AI tools, and they say that we don’t know enough about the risks and benefits for doctors to start using chatbots just yet.

Advertisement

“For some patients, this could save their lives,” Ayers said. For example, if you’re diagnosed with heart failure, it’s likely you’ll die within five years. “But we also know your likelihood of survival is higher if you have a high degree of compliance to clinical advice, such as restricting salt intake and taking your prescriptions. In that scenario, messages could help ensure compliance to that advice.”

Advertisement

The study says the medical community needs to move with caution. AI is progressing at an astonishing rate, and as the technology advances, so do the potential harms.

“The results are fascinating, if not all that surprising, and will certainly spur further much-needed research,” said Steven Lin, MD, executive director of the Stanford Healthcare AI Applied Research Team. However, Lin stressed that the JAMA study is far from definitive. For example, exchanged on Reddit don’t reflect the typical doctor-patient relationship in a clinical setting, and doctors with no therapeutic relationship with a patient have no particular reason to be empathetic or personalized in their responses. The results may also be skewed because the methodology for judging quality and empathy were simplistic, among other caveats.

Advertisement

Still, Lin said the study is encouraging, and highlights the enormous opportunity that chatbots pose for public health.

“There is tremendous potential for chatbots to assist clinicians when messaging with patients, by drafting a message based on a patient’s query for physicians or other clinical team members to edit,” said “The silent tsunami of patient messages flooding physicians’ inboxes is a very real, devastating problem.”

Advertisement

Doctors started playing around with ChatGPT almost as soon as it was released, and the chatbot shows a lot of potential for use in healthcare. But it’s hard to trust the AI’s responses, because in some cases, it lies. In another recent study, researchers had ChatGPT answer questions about preventing cardiovascular disease. The AI gave appropriate responses for 21 out of 25 questions. But ChatGPT made some serious mistakes, such as “firmly recommending” cardio and weightlifting, which can be unsafe for some people. In another example, a physician posted a TikTok about a conversation with ChatGPT where it made up a medical study. These problems could have deadly consequences if patients take a robot’s advice without input from a real doctor. OpenAI, the maker of ChatGPT, did not respond to a request for comment.

“We’re not saying we should just flip the switch and implement this. We need to pause and do the phase one studies, to evaluate the benefits and discover and mitigate the potential harms.” Ayers said. “That doesn’t mean we have to delay implementation for years. You could do the next phase and the next required study in a matter of months.”

Advertisement

There is no question that tools like ChatGPT will make their way into the world of medicine. It’s already started. In January, a mental health service called Koko tested GPT-3 on 4,000 people. The platform connects users to provide peer-to-pier support, and briefly let people harness an OpenAI powered chatbot in their responses. Koko said the AI messages got an overwhelmingly positive response, but the company shut the experiment down after a few days because it “felt kind of sterile.”

That’s not to say tools like ChatGPT don’t have potential in this context. Designed properly, a system that uses AI chatbots in medicine “may even be a tool to combat the epidemic of misinformation and disinformation that is probably the single biggest threat to public health today,” Lin said. “Applied poorly, it may make misinformation and disinformation even more rampant.”

Advertisement

Reading through the data from the JAMA study, the results seem clear even to a layperson. For example, one man said he gets arm pain when he sneezes, and asked if that’s cause for alarm. The doctor answered “Basically, no.” ChatGPT gave a detailed, five paragraph response with possible causes for the pain and several recommendations, including:

“It is not uncommon for people to experience muscle aches or pains in various parts of their body after sneezing. Sneezing is a sudden, forceful reflex that can cause muscle contractions throughout the body. In most cases, muscle pain or discomfort after sneezing is temporary and not a cause for concern. However, if the pain is severe or persists for a long time, it is a good idea to consult a healthcare professional for further evaluation.”

Advertisement

Unsurprisingly, all of the panelists in the study preferred ChatGPT’s answer. (Gizmodo lightly edited the details in the doctor’s example above to protect privacy.)

“This doesn’t mean we should throw doctors out of the picture,” Ayers said. “But the ideal message generating candidate may be a doctor using an AI system.”

Advertisement

If (and inevitably when) ChatGPT starts helping doctors with their emails, it could be useful even if it isn’t providing medical advice. Utilizing ChatGPT, doctors could work faster, giving patients the information they need without having to worry about grammar and spelling. Ayers said a ChatBot could also help reaching out to patients proactively with health care recommendations—like many doctors did in the early stages of the pandemic—rather than waiting for patients to get in touch when they have a problem.

“This isn’t just going to change medicine and help physicians, it’s going to have a huge value for public health, we’re talking en masse, across the population,” Ayers said.

Advertisement

Want to know more about AI, chatbots, and the future of machine learning? Check out our full coverage of artificial intelligence, or browse our guides to The Best Free AI Art Generators and Everything We Know About OpenAI’s ChatGPT.

Services Marketplace – Listings, Bookings & Reviews